v1.0

Showing

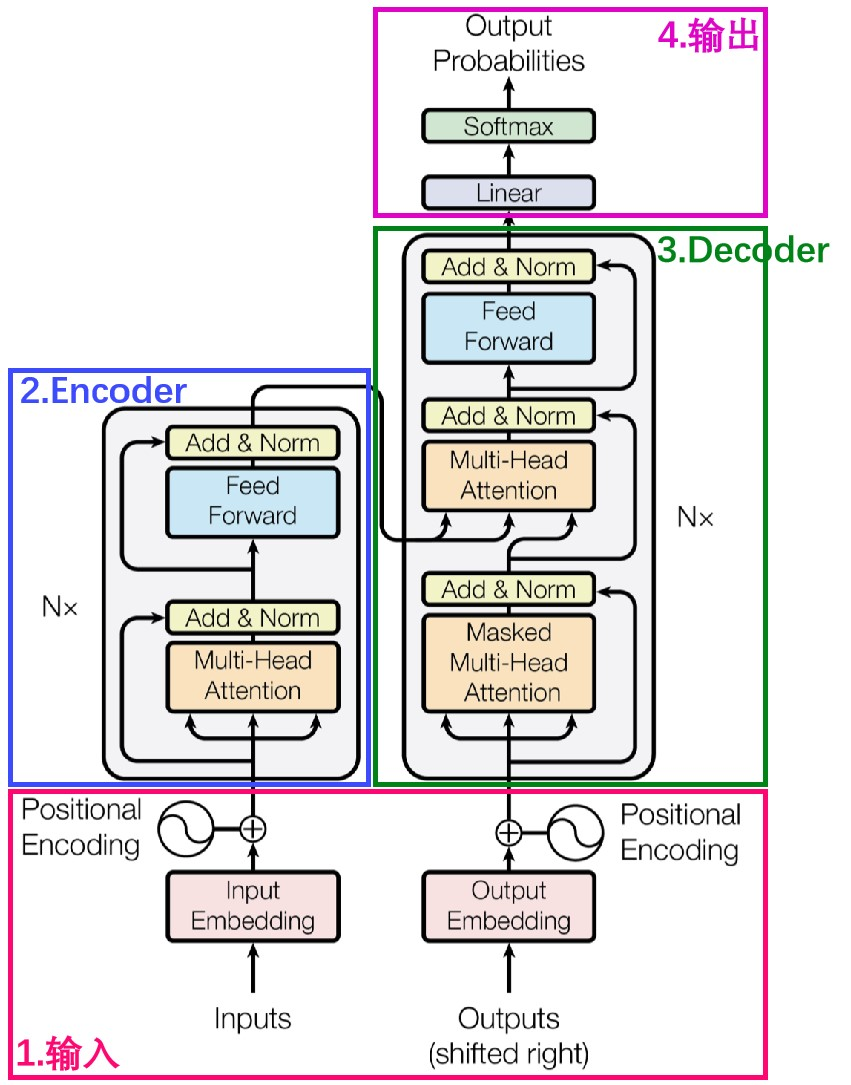

doc/transformer.png

0 → 100644

366 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

docker_start.sh

0 → 100644

environment-cpu.yml

0 → 100644

environment-cuda.yml

0 → 100644

infer.py

0 → 100644

minimal_example.py

0 → 100644

model.properties

0 → 100644

nbs/.gitignore

0 → 100644

nbs/_quarto.yml

0 → 100644

nbs/common.base_auto.ipynb

0 → 100644

This diff is collapsed.

nbs/common.base_model.ipynb

0 → 100644

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

nbs/common.modules.ipynb

0 → 100644

This diff is collapsed.