lettucedetect

Showing

model.properties

0 → 100644

pyproject.toml

0 → 100644

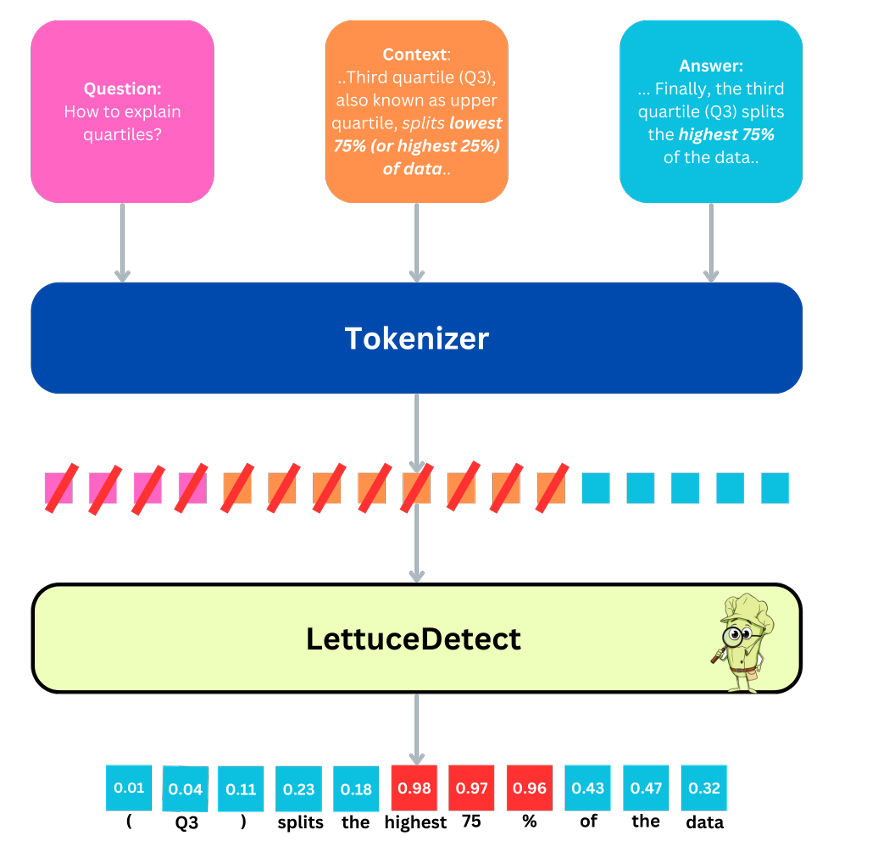

readme_imgs/arch.png

0 → 100644

123 KB

scripts/analyze_tokens.py

0 → 100644

scripts/evaluate.py

0 → 100644

scripts/train.py

0 → 100644

scripts/upload.py

0 → 100644

tests/__init__.py

0 → 100644

tests/conftest.py

0 → 100644

tests/pytest.ini

0 → 100644

tests/run_pytest.py

0 → 100644

translate/translate.py

0 → 100644