InstruceBLIP

Showing

Too many changes to show.

To preserve performance only 1000 of 1000+ files are displayed.

app/vqa.py

0 → 100644

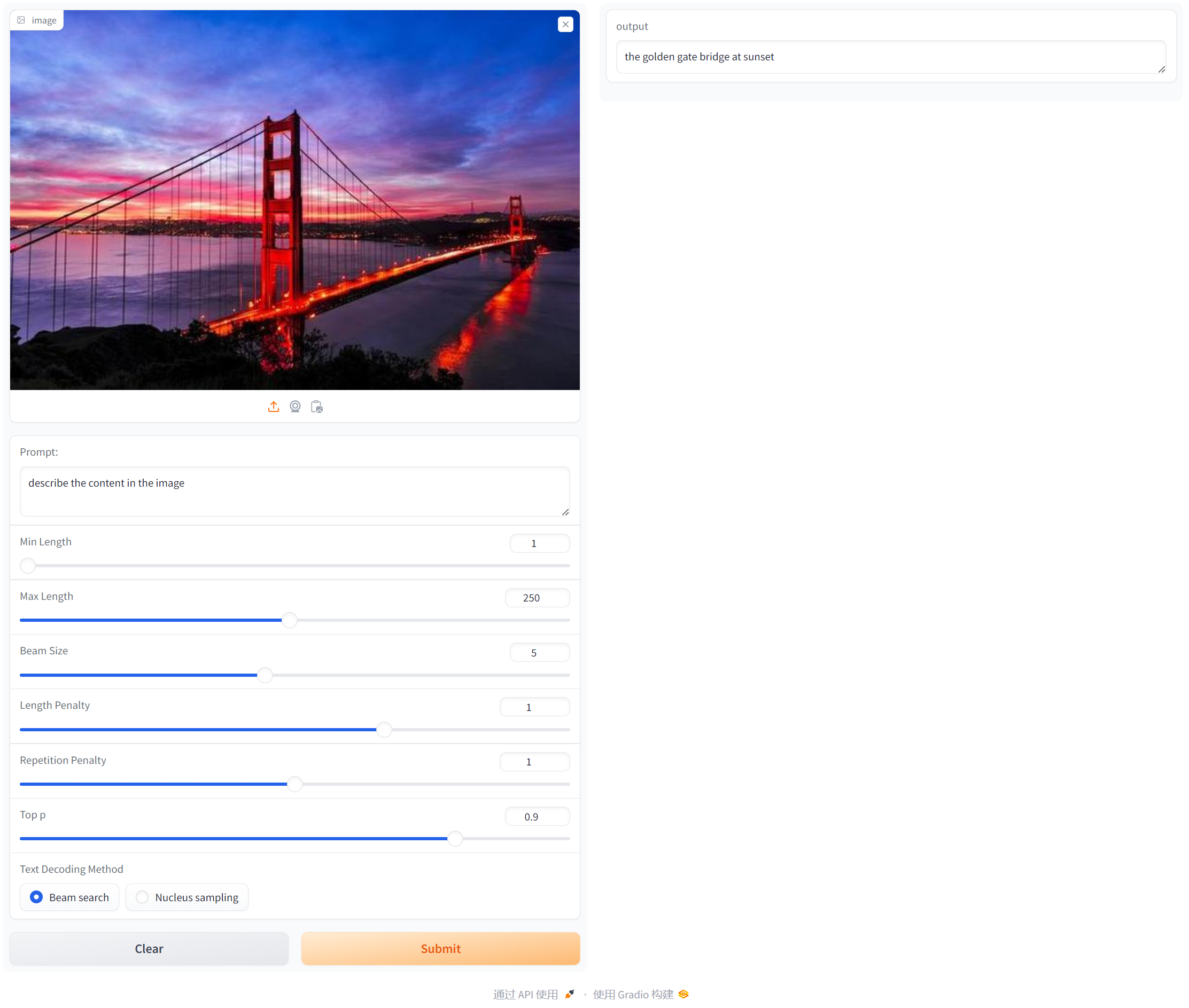

assets/BLIP.PNG

0 → 100644

480 KB

assets/BLIP2.PNG

0 → 100644

216 KB

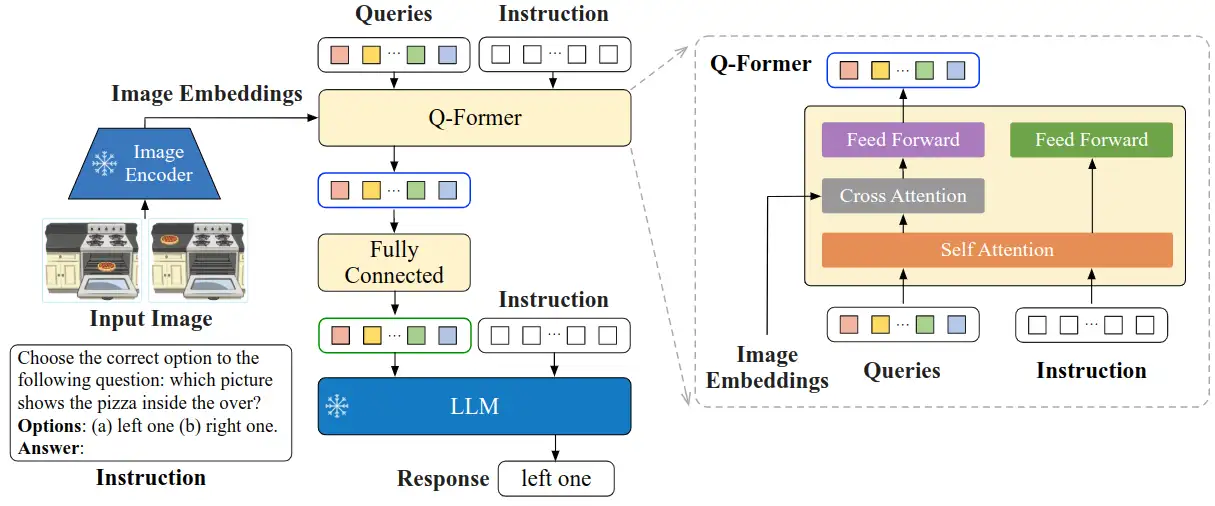

assets/InstructBLIP.png

0 → 100644

319 KB

File added

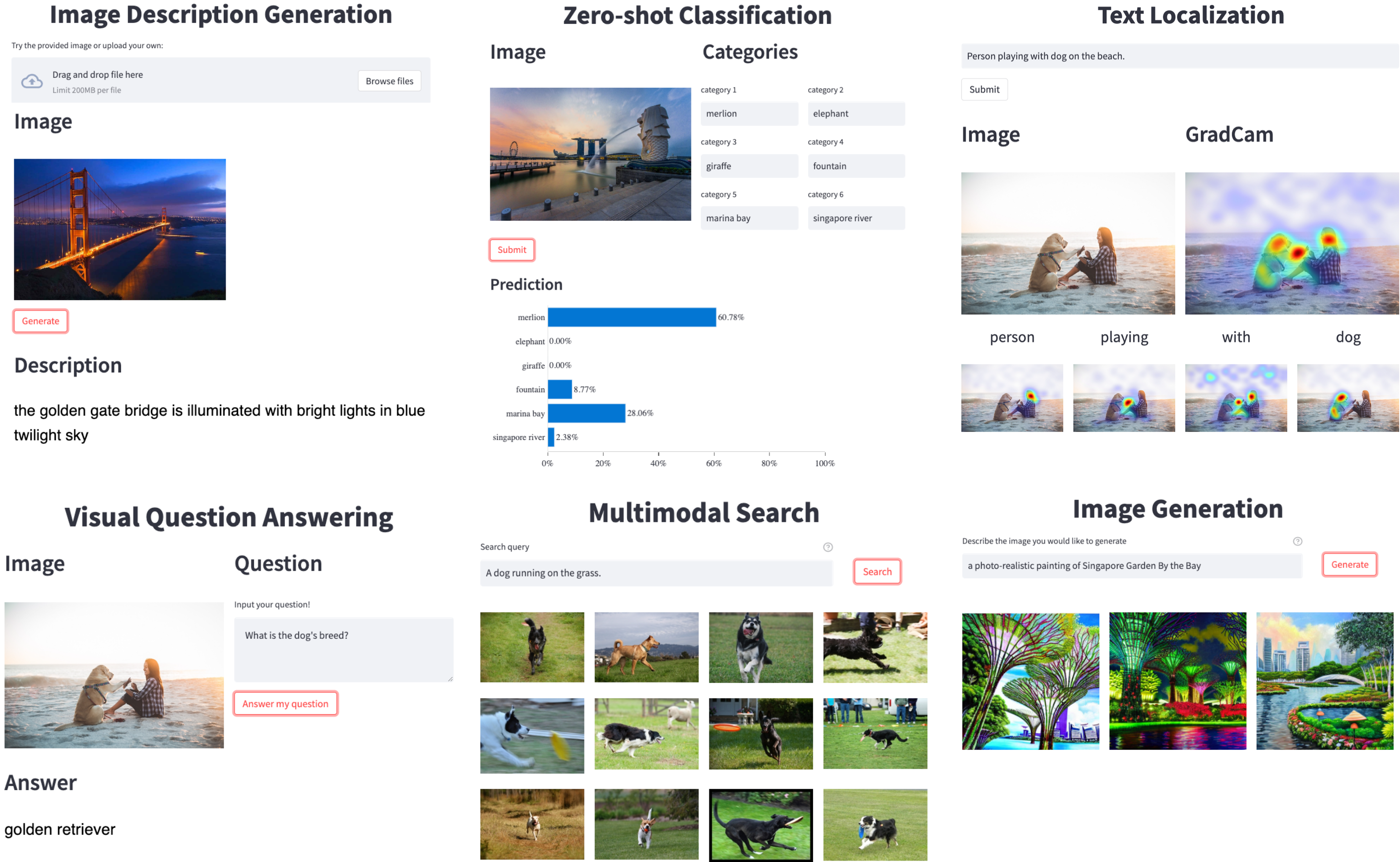

assets/demo-6.png

0 → 100644

2.98 MB

assets/demo.png

0 → 100644

860 KB

dataset_card/coco_caption.md

0 → 100644

dataset_card/gqa.md

0 → 100644

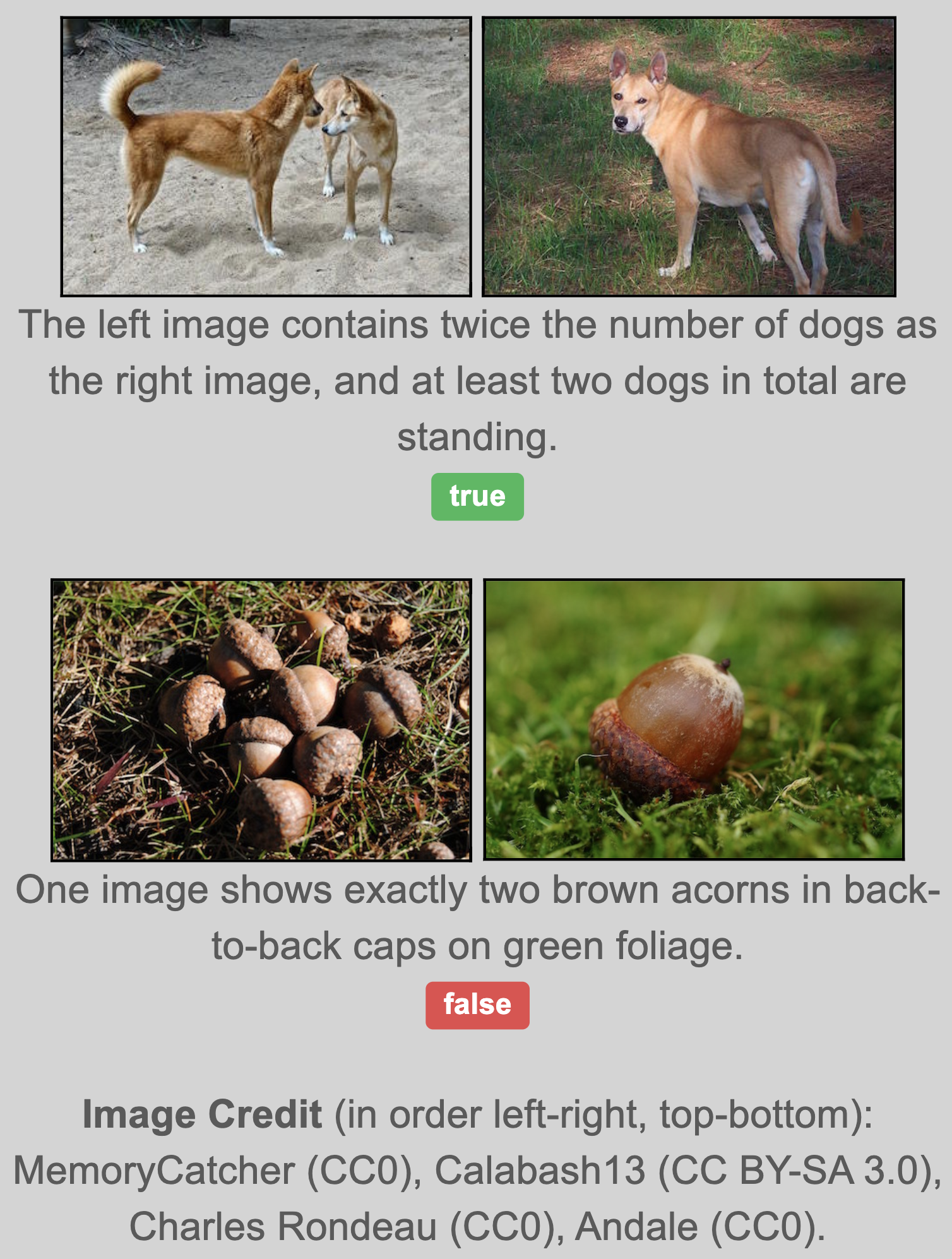

dataset_card/imgs/NLVR2.png

0 → 100644

2.37 MB

1.49 MB

2.34 MB

1.76 MB

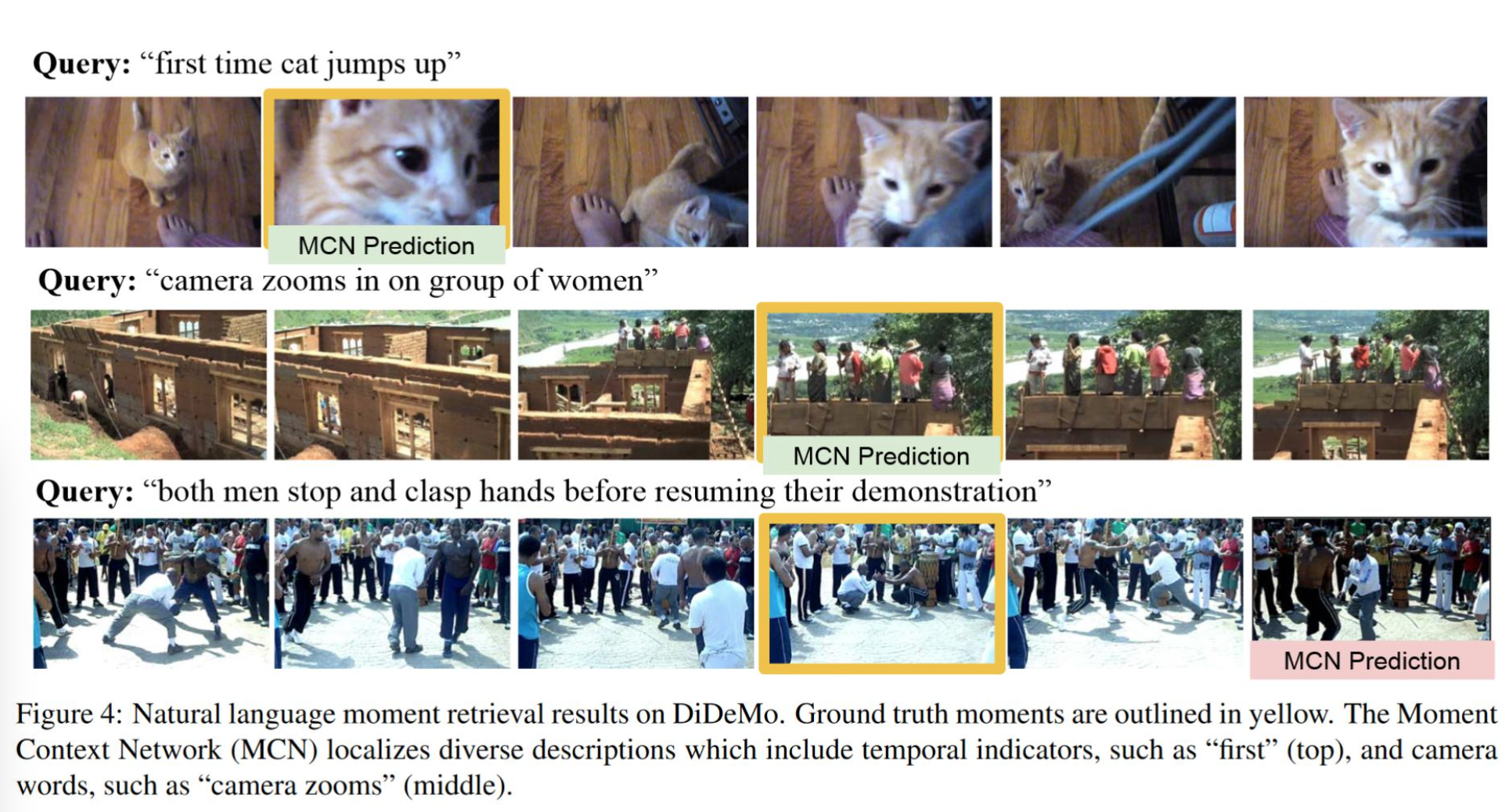

dataset_card/imgs/didemo.png

0 → 100644

2.51 MB

1.37 MB