Updata codes

Showing

data/train.json

0 → 100644

This diff is collapsed.

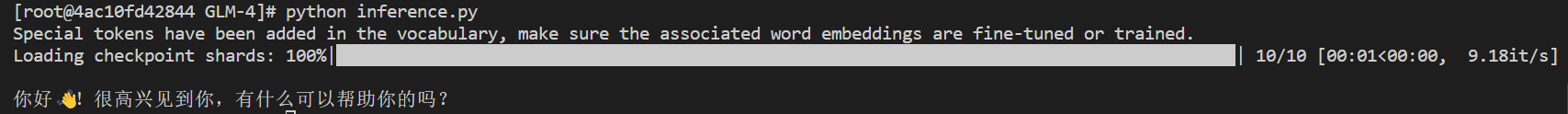

doc/result.png

0 → 100644

25 KB

finetune_demo/train.sh

0 → 100644

finetune_demo/train_dp.sh

0 → 100644

gen_messages_data.py

0 → 100644