bert-large training

Showing

evaluate-v1.1.py

0 → 100644

extract_features.py

0 → 100644

file_utils.py

0 → 100644

This diff is collapsed.

icon.png

0 → 100644

53.8 KB

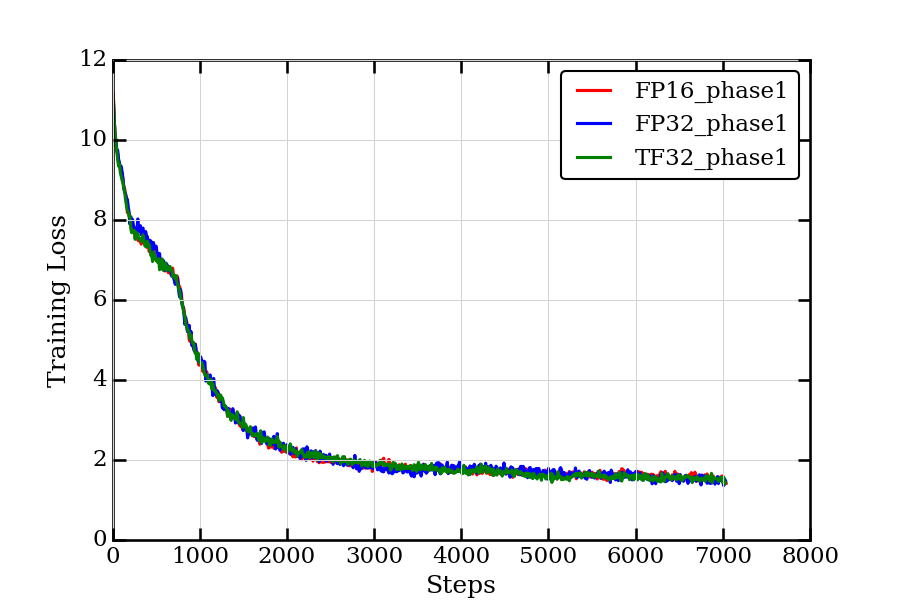

images/loss_curves.png

0 → 100644

50.2 KB

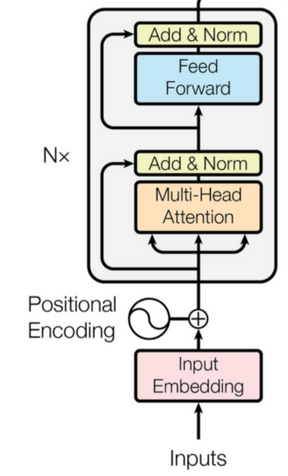

images/model.png

0 → 100644

56.5 KB

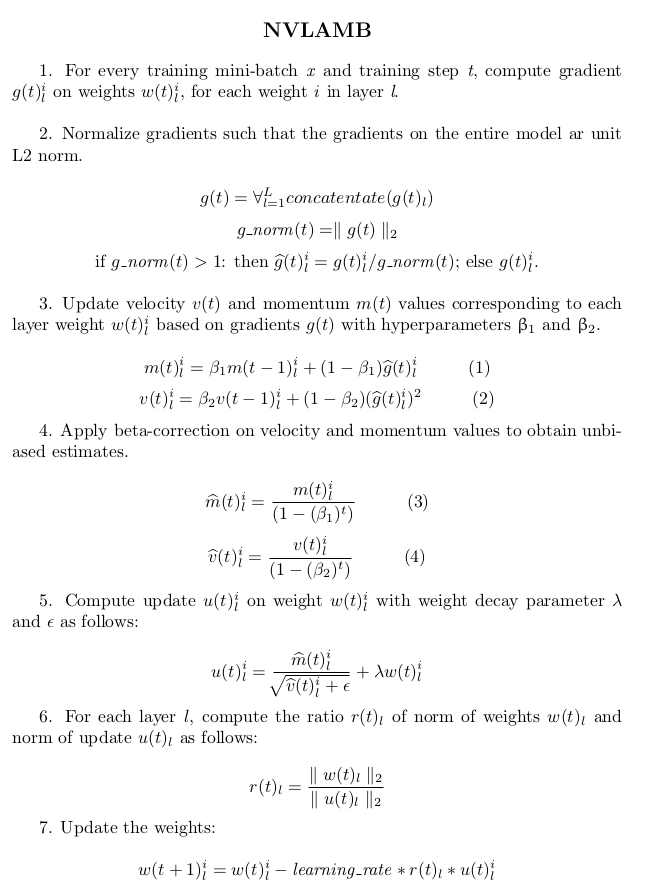

images/nvlamb.png

0 → 100644

86.1 KB

inference.py

0 → 100644

This diff is collapsed.

log/results-squad-fp16.json

0 → 100644

log/results.json

0 → 100644

model.properties

0 → 100644

modeling.py

0 → 100644

This diff is collapsed.

optimization.py

0 → 100644

output/dllogger.json

0 → 100644

This diff is collapsed.

This diff is collapsed.

processors/__init__.py

0 → 100644

processors/glue.py

0 → 100644

This diff is collapsed.

requirements.txt

0 → 100644

This diff is collapsed.