"test/ut/git@developer.sourcefind.cn:OpenDAS/nni.git" did not exist on "2566badb06095b9e3ea16eb6f00fd58da65a95fd"

Merge pull request #199 from microsoft/master

merge master

Showing

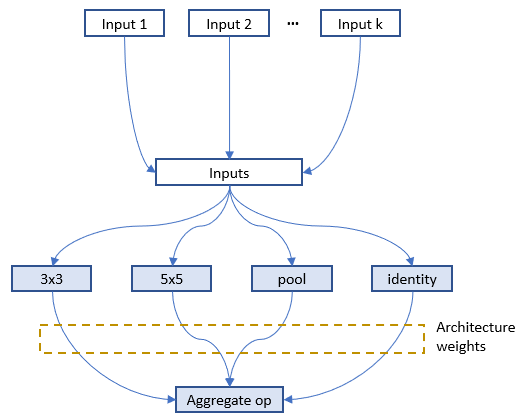

docs/img/darts_mode.png

0 → 100644

13.5 KB

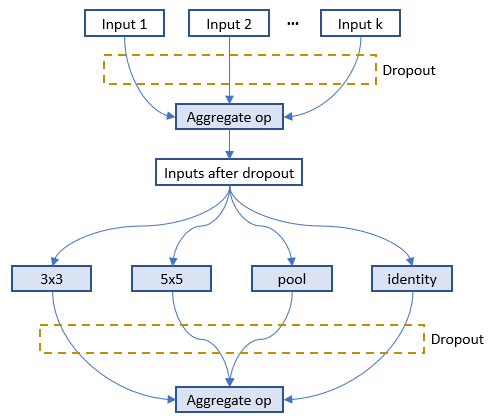

docs/img/oneshot_mode.png

0 → 100644

14.3 KB