[major] add flux.1-redux; update the flux.1-tools demos

Showing

520 KB

app/flux.1/fill/README.md

0 → 100644

603 KB

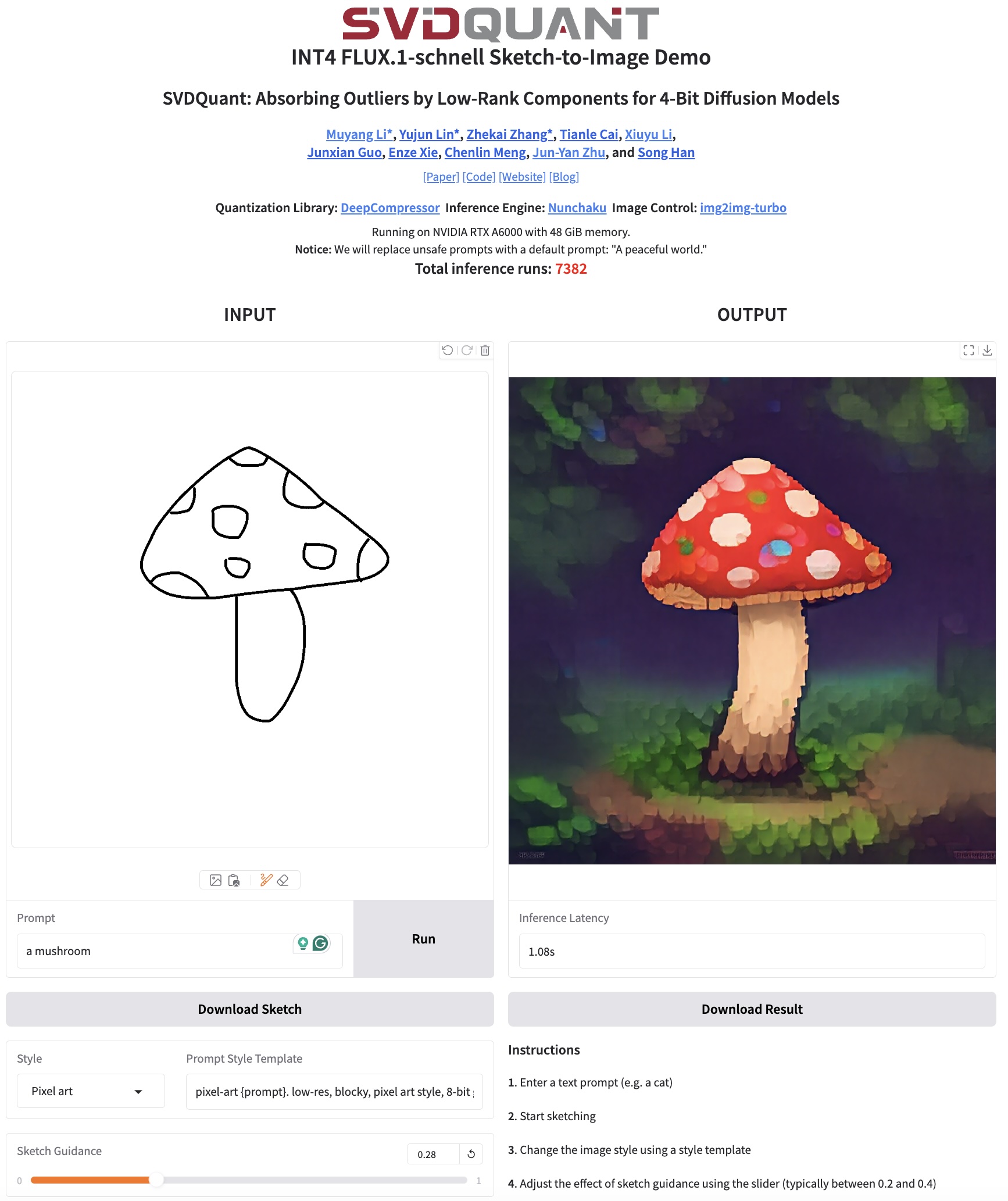

app/flux.1/sketch/README.md

0 → 100644

436 KB

File moved

File moved

File moved

File moved