"model/git@developer.sourcefind.cn:OpenDAS/ollama.git" did not exist on "139f84cf21f8d8107f69c1404f17a8840c6d67d0"

Update inference/7B_single_dcu.py, result/all_results.json,...

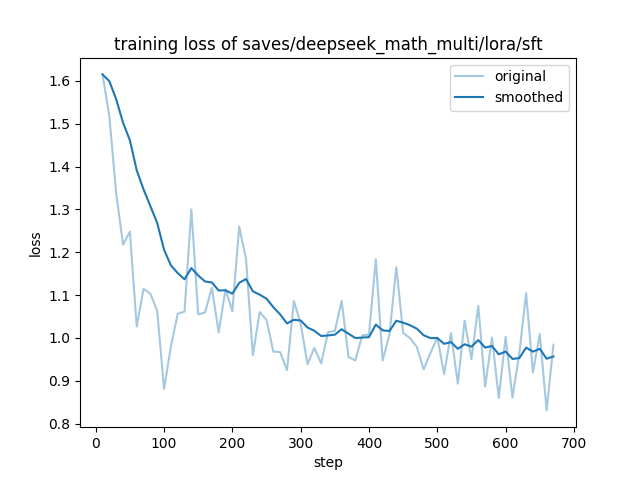

Update inference/7B_single_dcu.py, result/all_results.json, result/training_loss.png, result/train_results.json files

Showing

inference/7B_single_dcu.py

0 → 100644

result/all_results.json

0 → 100644

result/train_results.json

0 → 100644

result/training_loss.png

0 → 100644

48.1 KB