Merging megatron with ICT

Showing

.gitlab-ci.yml

0 → 100644

This diff is collapsed.

File moved

images/cases.png

deleted

100644 → 0

11.5 KB

images/cases_jan2021.png

0 → 100644

150 KB

13.1 KB

22.3 KB

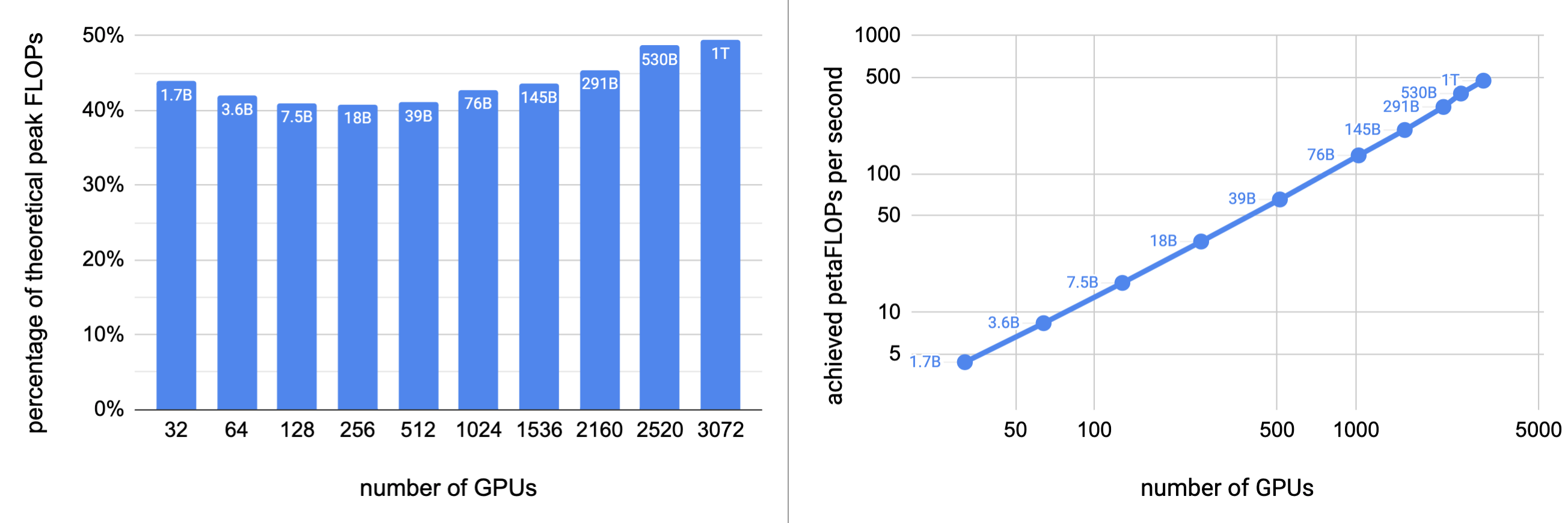

images/scaling.png

0 → 100644

280 KB

This diff is collapsed.