Merge remote-tracking branch 'upstream/dygraph' into dy3

Showing

doc/doc_en/inference_en.md

100644 → 100755

| W: | H:

| W: | H:

129 KB

94 KB

236 KB

81.7 KB

147 KB

124 KB

164 KB

137 KB

284 KB

244 KB

180 KB

305 KB

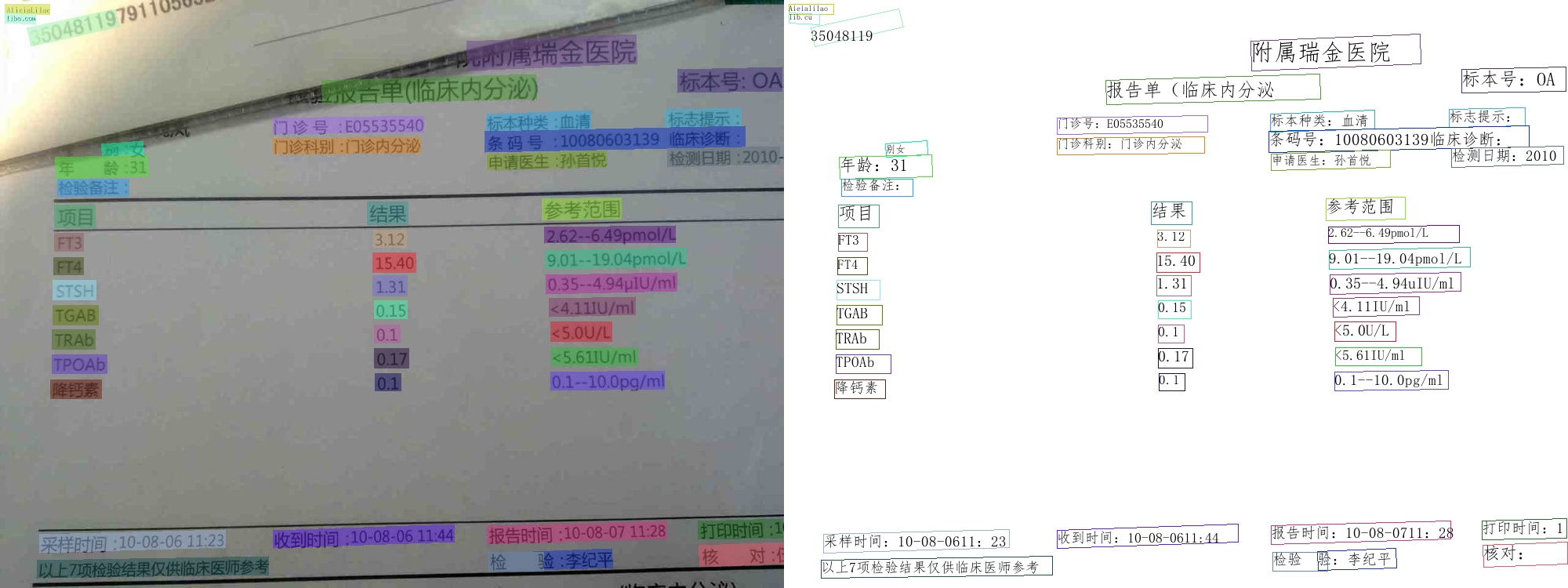

983 KB | W: | H:

38.8 KB | W: | H:

129 KB

94 KB

236 KB

81.7 KB

147 KB

124 KB

164 KB

137 KB

284 KB

244 KB

180 KB

305 KB