Merge branch 'dygraph' of https://github.com/PaddlePaddle/PaddleOCR into dygraph

Showing

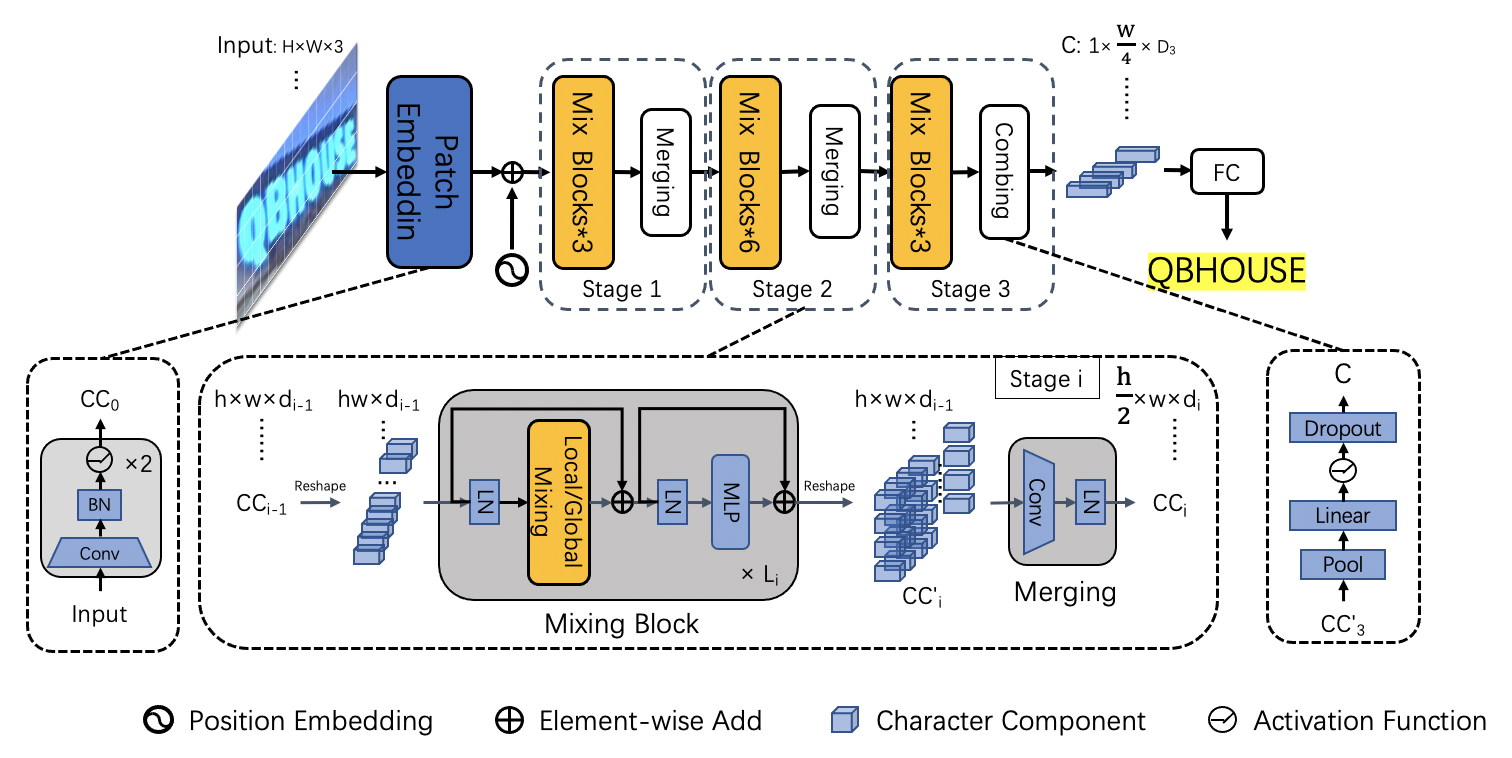

doc/ppocr_v3/svtr_g4.png

0 → 100644

550 KB

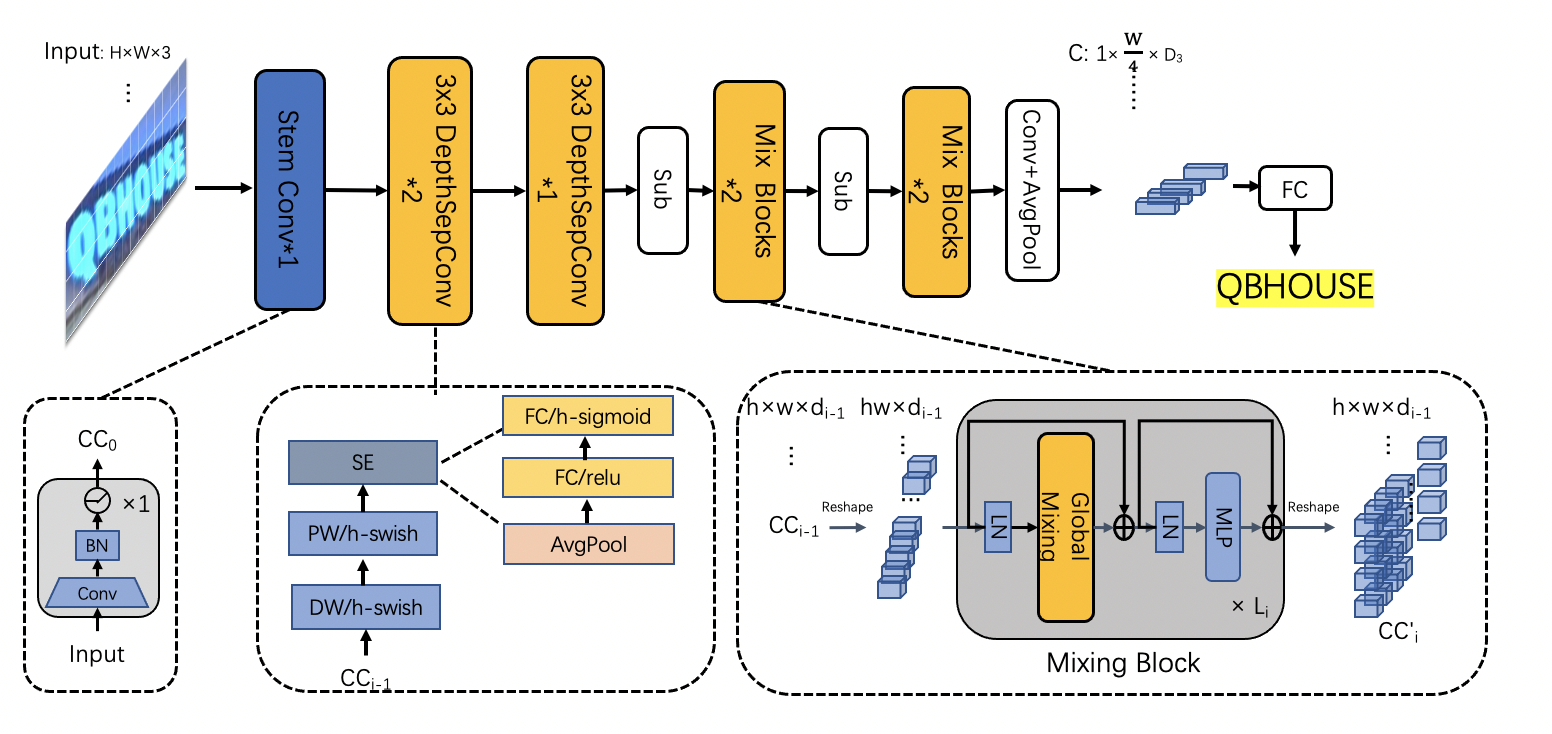

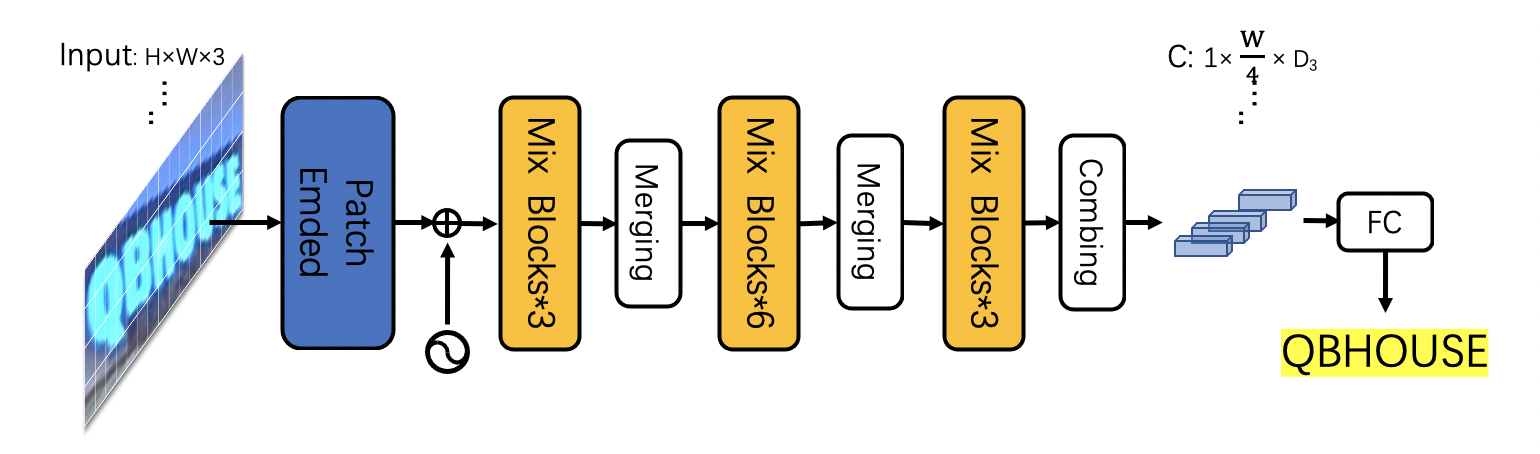

doc/ppocr_v3/svtr_tiny.jpg

0 → 100644

324 KB

doc/ppocr_v3/svtr_tiny.png

0 → 100644

586 KB

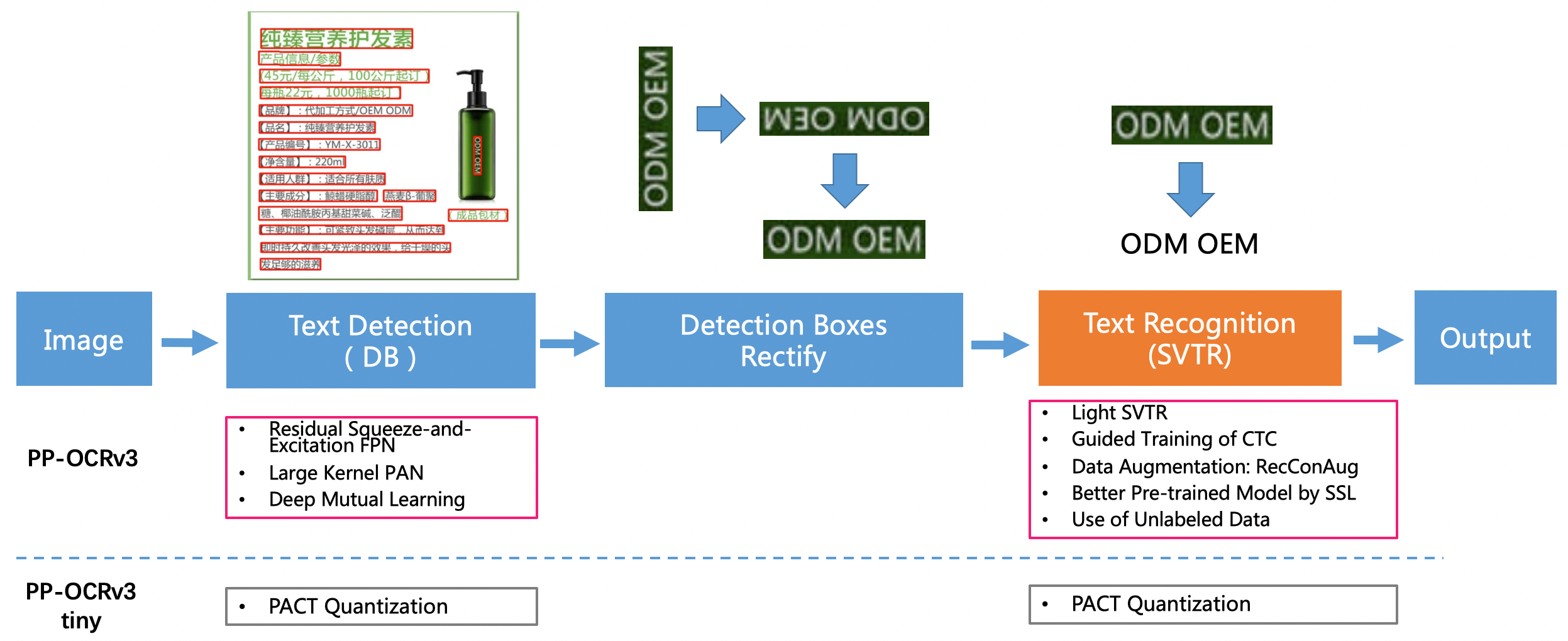

doc/ppocrv3_framework.png

0 → 100644

957 KB