"...resnet50_tensorflow.git" did not exist on "09bc9f54fb7084b7908447572938b2e203d7c232"

Merge branch 'dev' into multi_gpu_v2

Showing

This diff is collapsed.

This diff is collapsed.

| W: | H:

| W: | H:

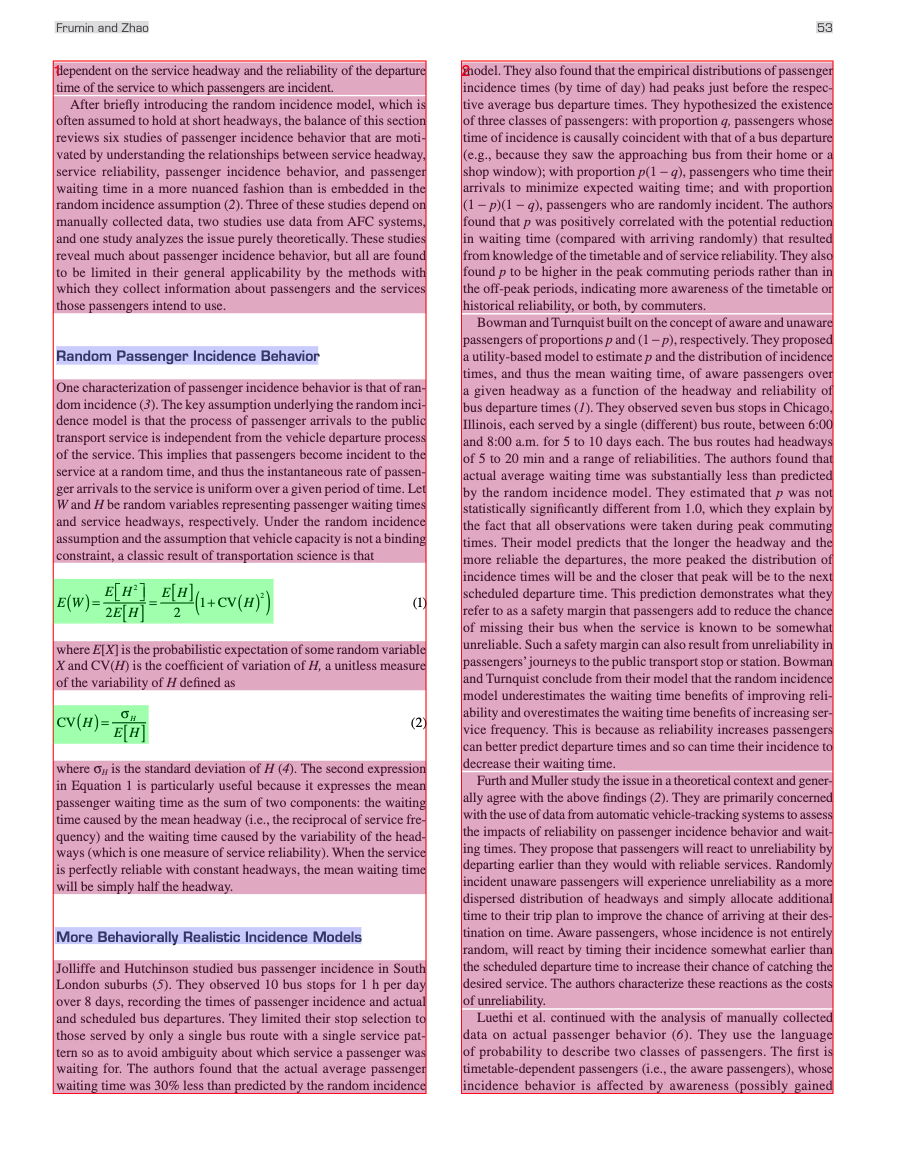

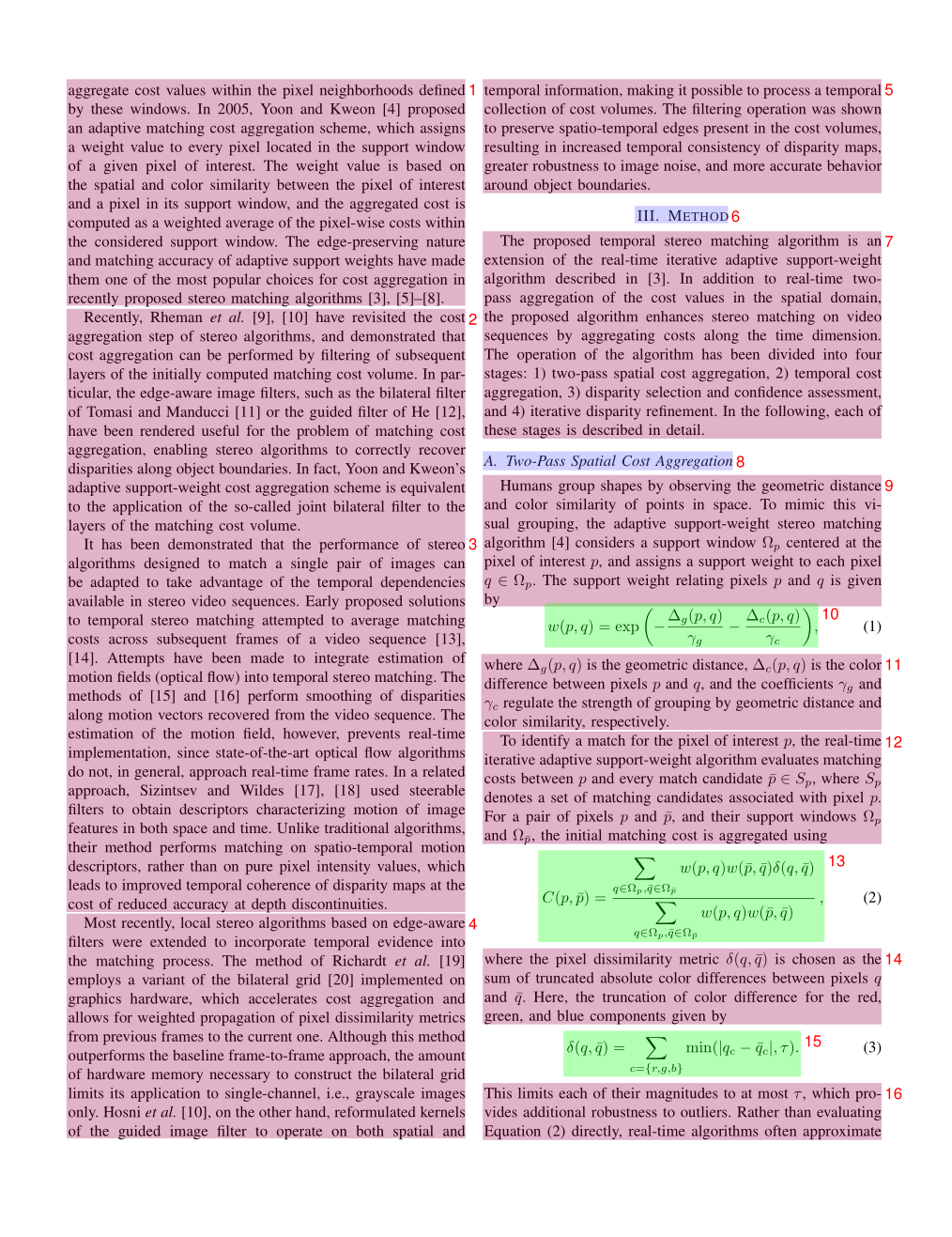

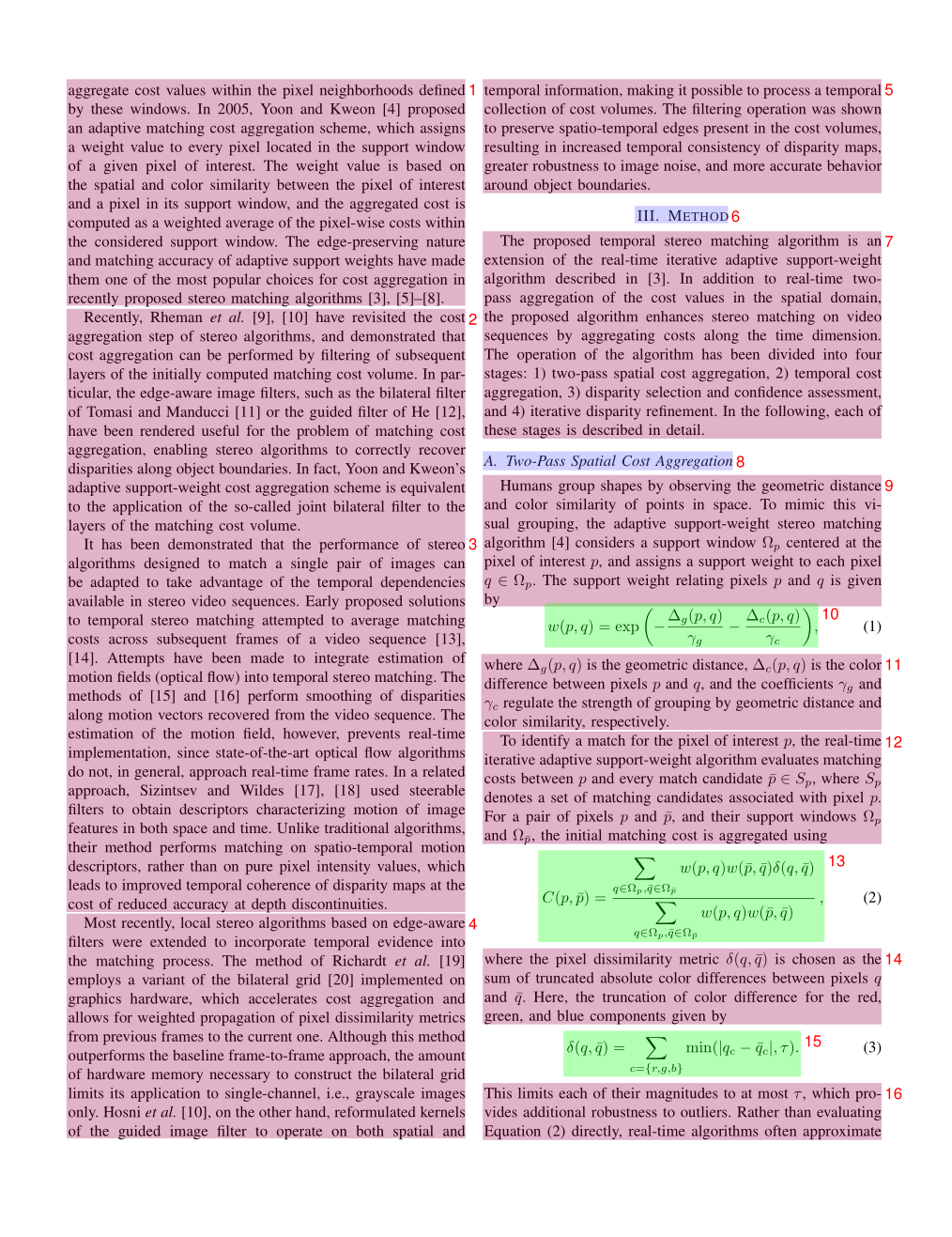

498 KB

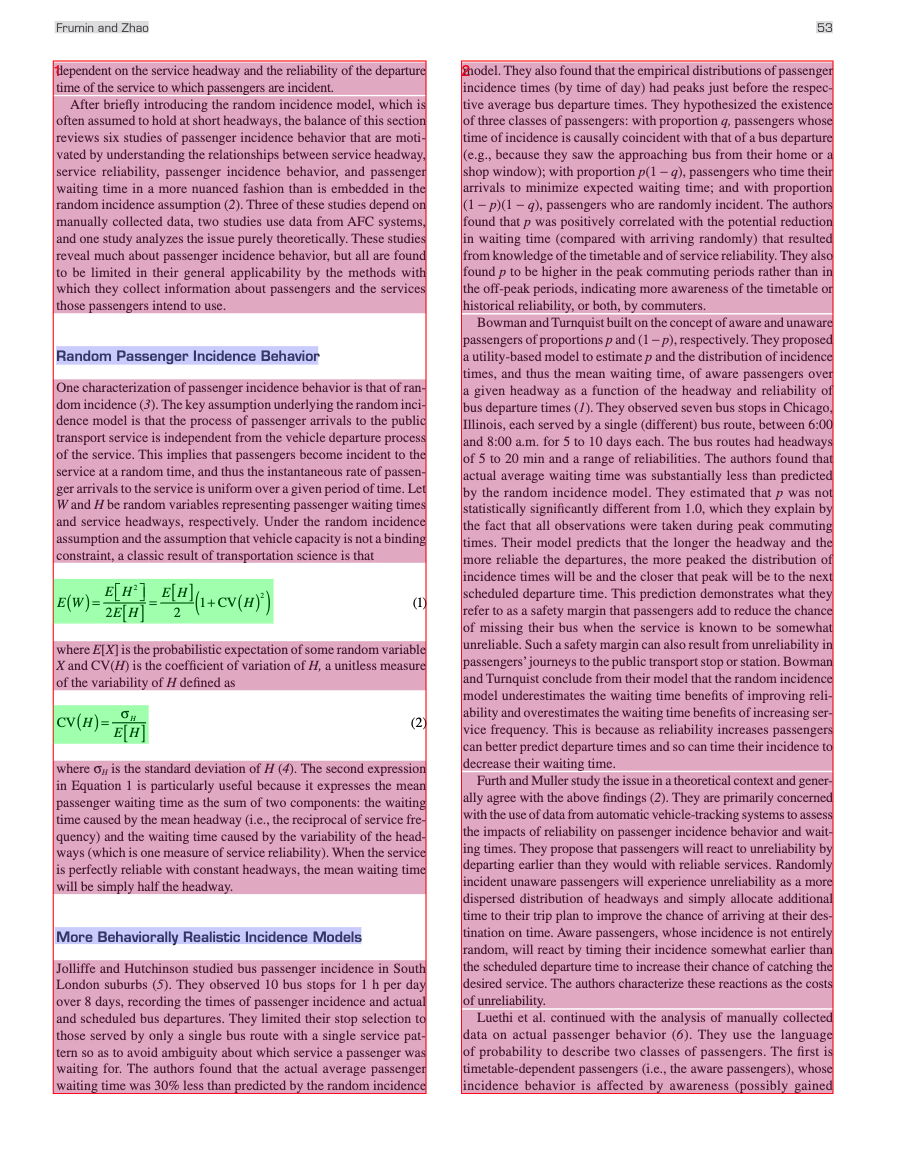

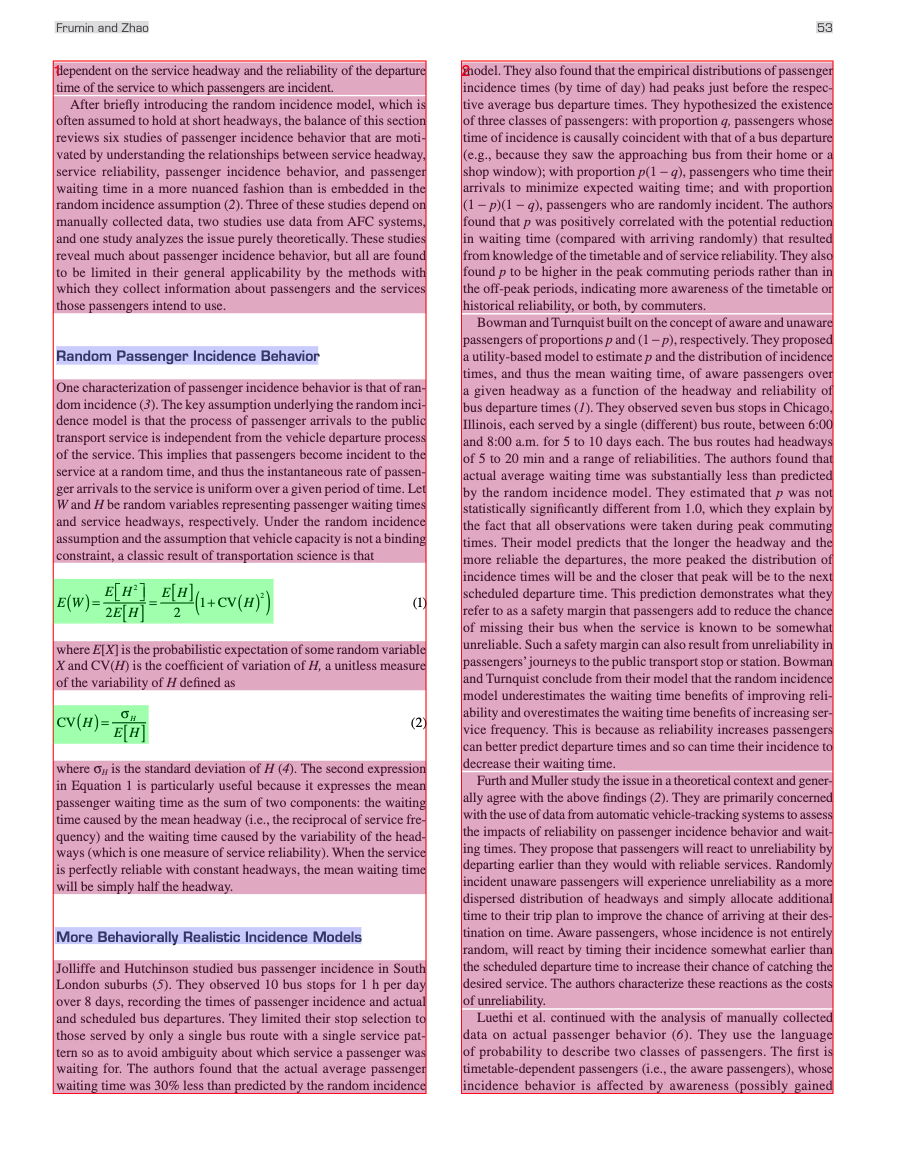

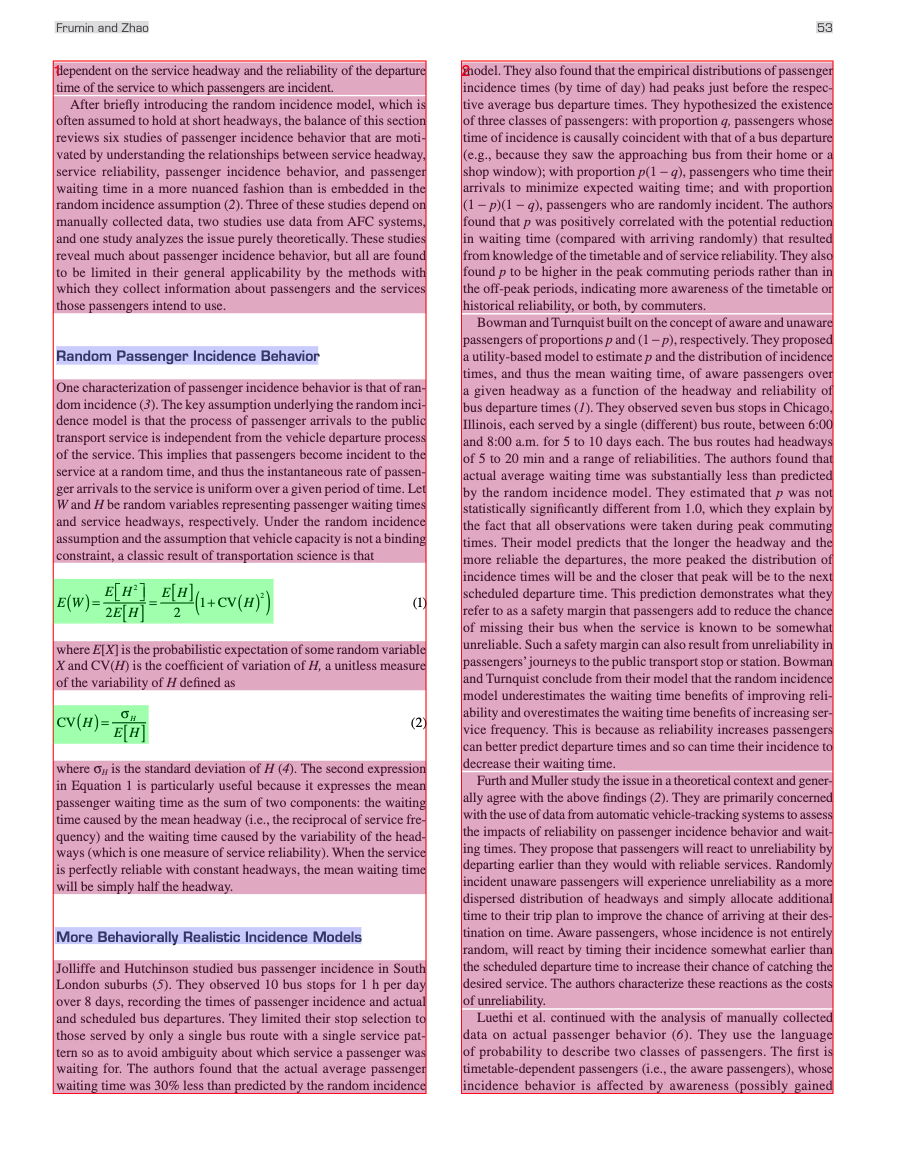

559 KB | W: | H:

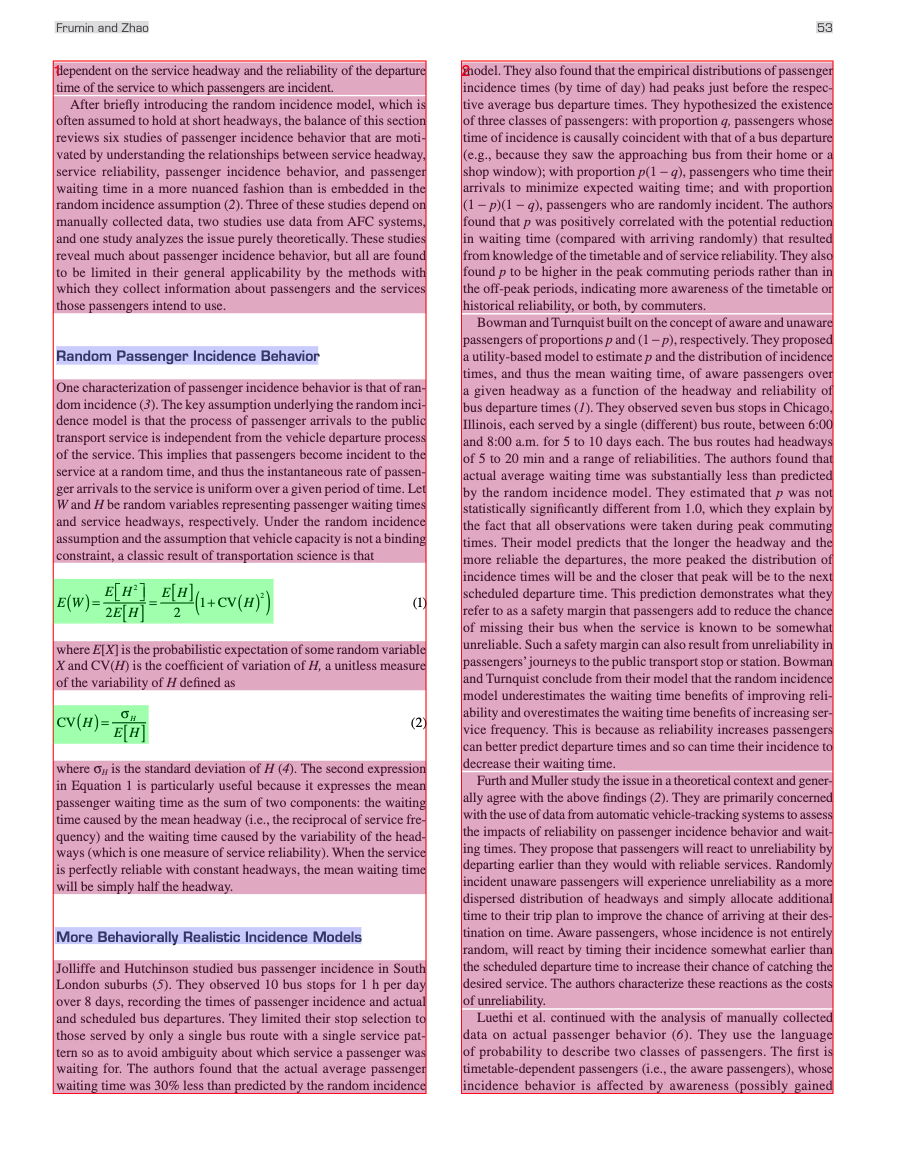

626 KB | W: | H:

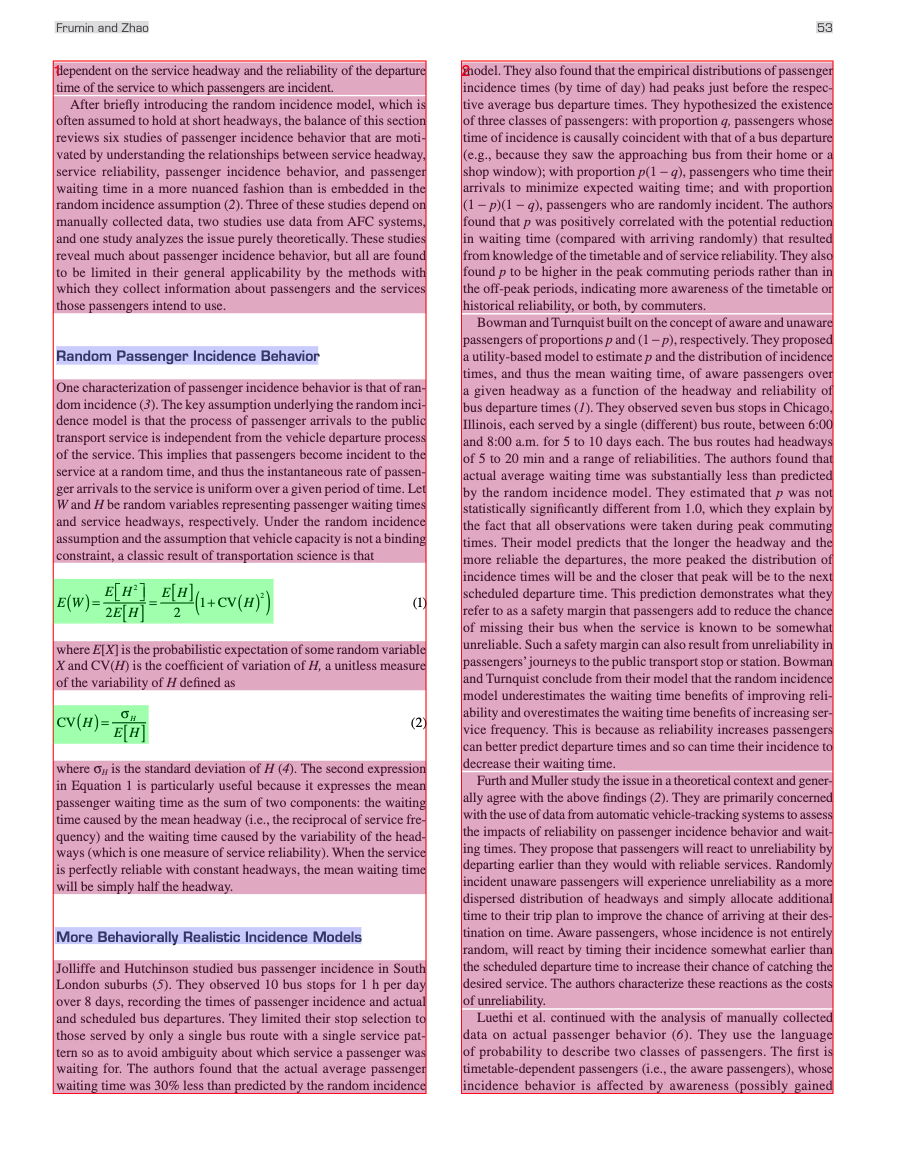

498 KB