Add results images and infer_hf.py

Showing

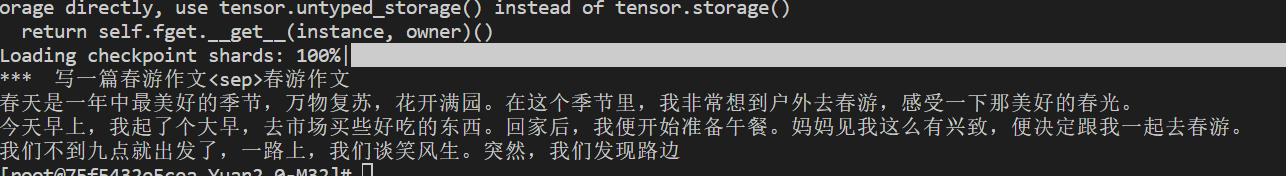

doc/result.png

0 → 100644

61.4 KB

infer_hf.py

0 → 100644

requirements.txt

0 → 100644

| accelerate | ||

| \ No newline at end of file |

61.4 KB

| accelerate | ||

| \ No newline at end of file |