first init

Showing

model/yolov8n.pt

0 → 100644

File added

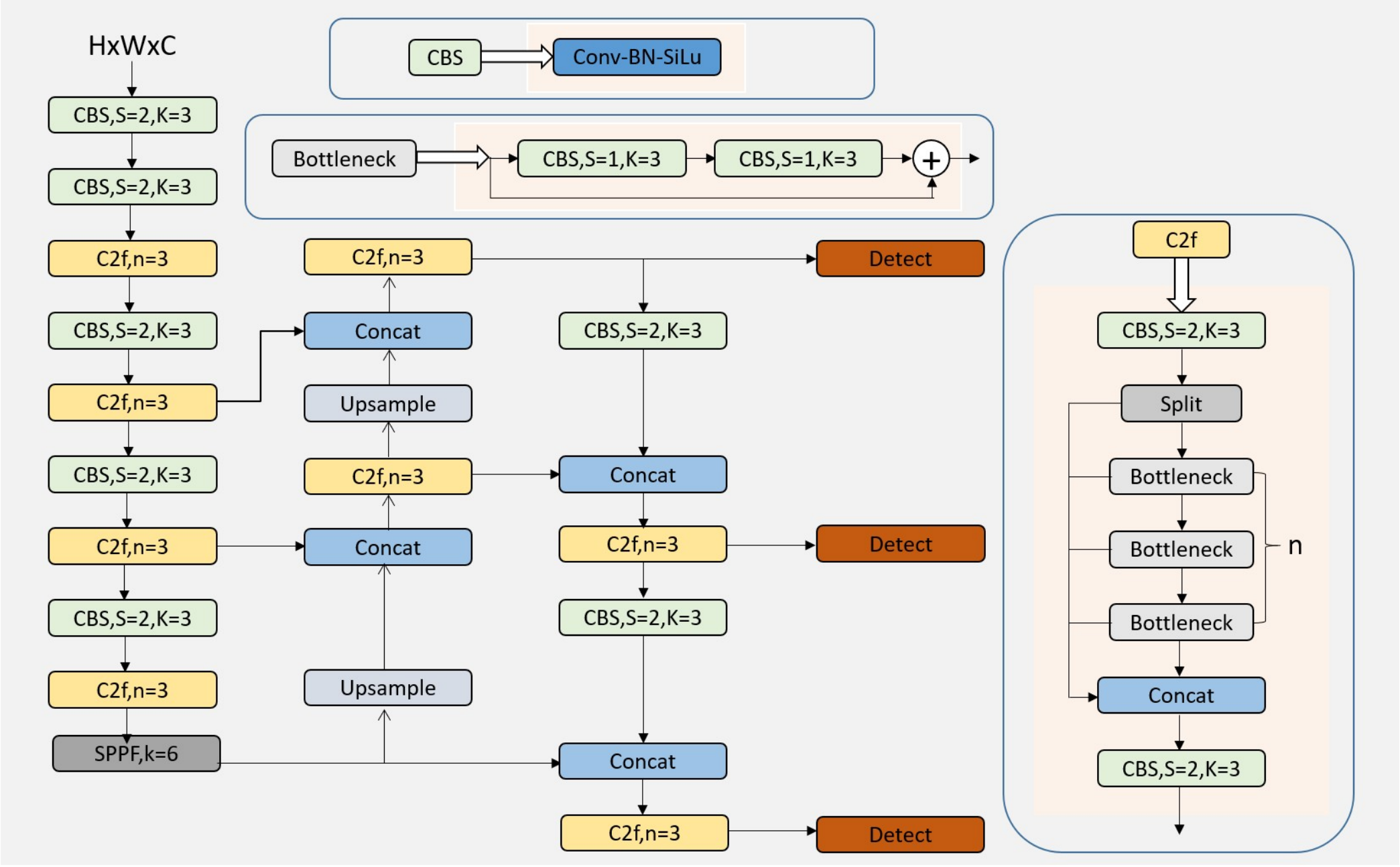

model_framework.png

0 → 100644

883 KB

src/main.cpp

0 → 100644

src/utils.cpp

0 → 100644

src/yolov8Predictor.cpp

0 → 100644