提交YOLOV7C++推理示例

Showing

.gitignore

0 → 100644

3rdParty/InstallRBuild.sh

0 → 100644

File added

File added

File added

File added

File added

File added

CMakeLists.txt

0 → 100644

Python/requirements.txt

0 → 100644

Resource/Configuration.xml

0 → 100644

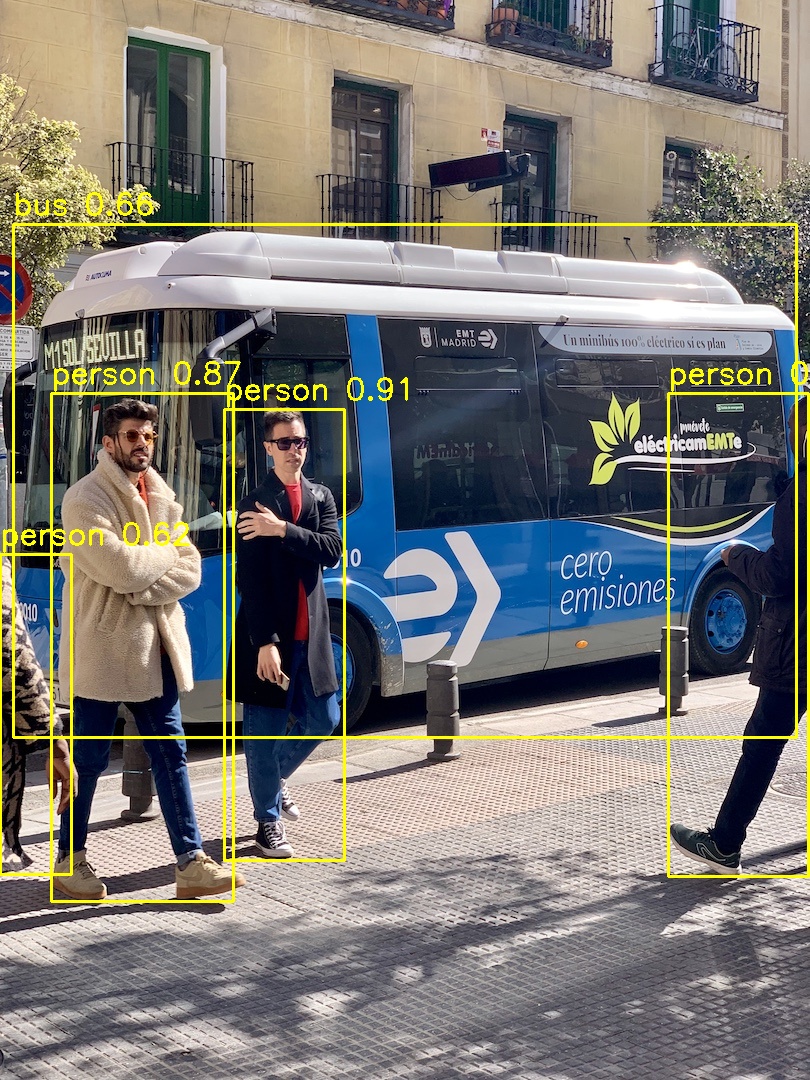

Resource/Images/Result.jpg

0 → 100644

489 KB

File moved