提交yolov7推理示例

Showing

YoloV7_infer_migraphx.py

0 → 100644

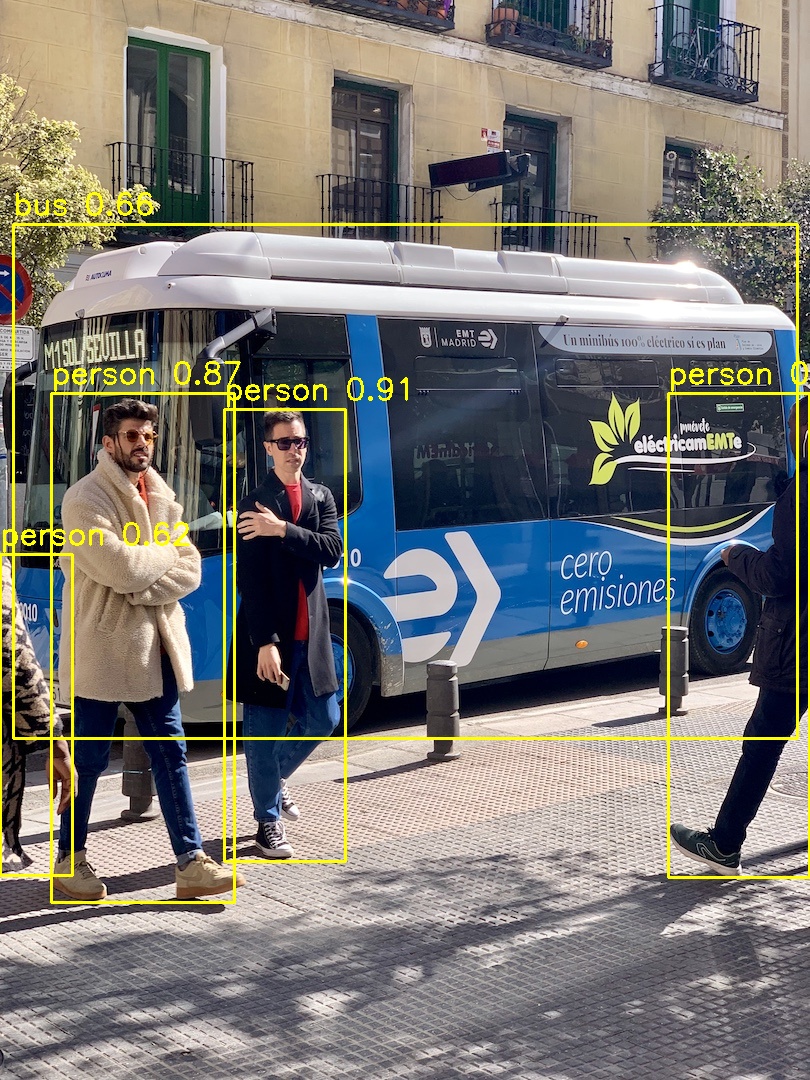

images/Result.jpg

0 → 100644

489 KB

images/bus.jpg

0 → 100644

476 KB

requirements.txt

0 → 100644

| opencv-contrib-python | |||

| numpy | |||

| os | |||

| argparse | |||

| time | |||

| \ No newline at end of file |

weights/coco.names

0 → 100644

weights/yolov7-tiny.onnx

0 → 100644

File added