update yolov5s_tvm

parents

Showing

Makefile

0 → 100755

README.md

0 → 100755

cow.jpg

0 → 100644

278 KB

lib/libtvm_runtime_pack.o

0 → 100644

File added

lib/yolov5s_deploy

0 → 100755

File added

File added

model.properties

0 → 100644

prepare_test_libs.py

0 → 100755

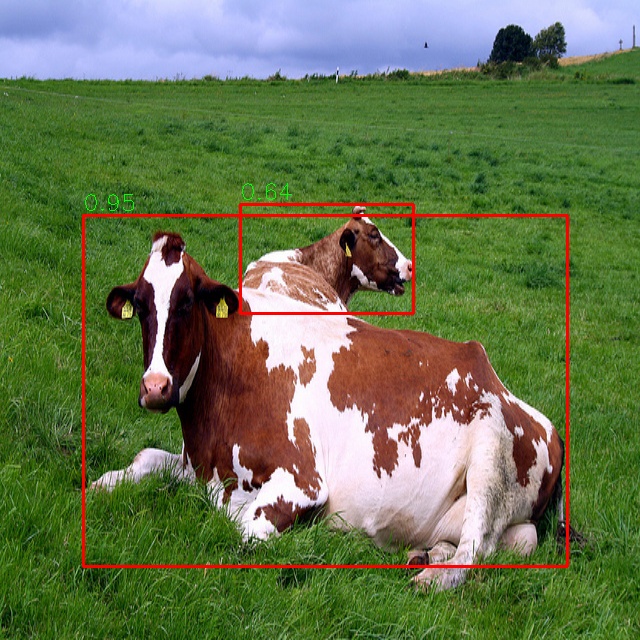

result.jpg

0 → 100644

221 KB

run_example.sh

0 → 100755

tvm_runtime_pack.cc

0 → 100755

yolov5s_deploy.cc

0 → 100755

yolov5s_infer.py

0 → 100755

yolov5s_pred_utils.py

0 → 100755