init

parents

Showing

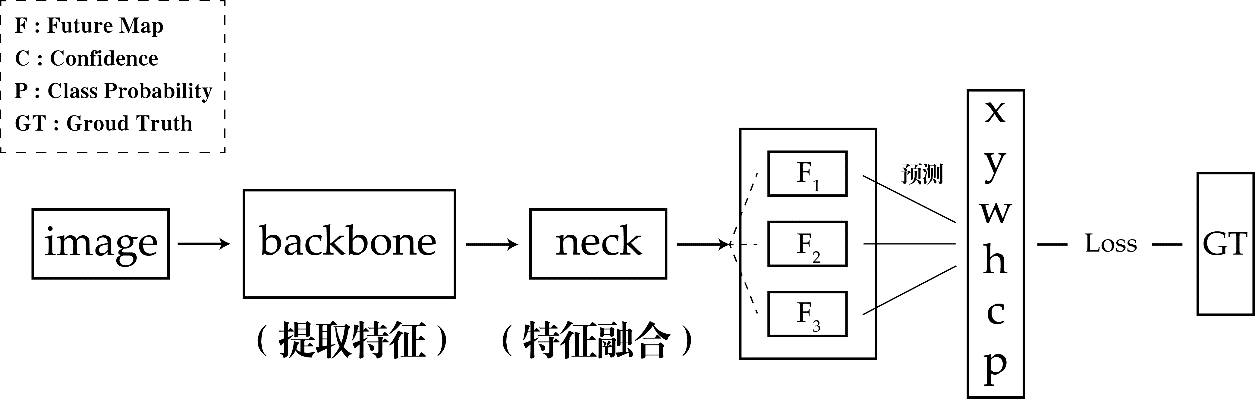

Algorithm_principle.png

0 → 100644

32.2 KB

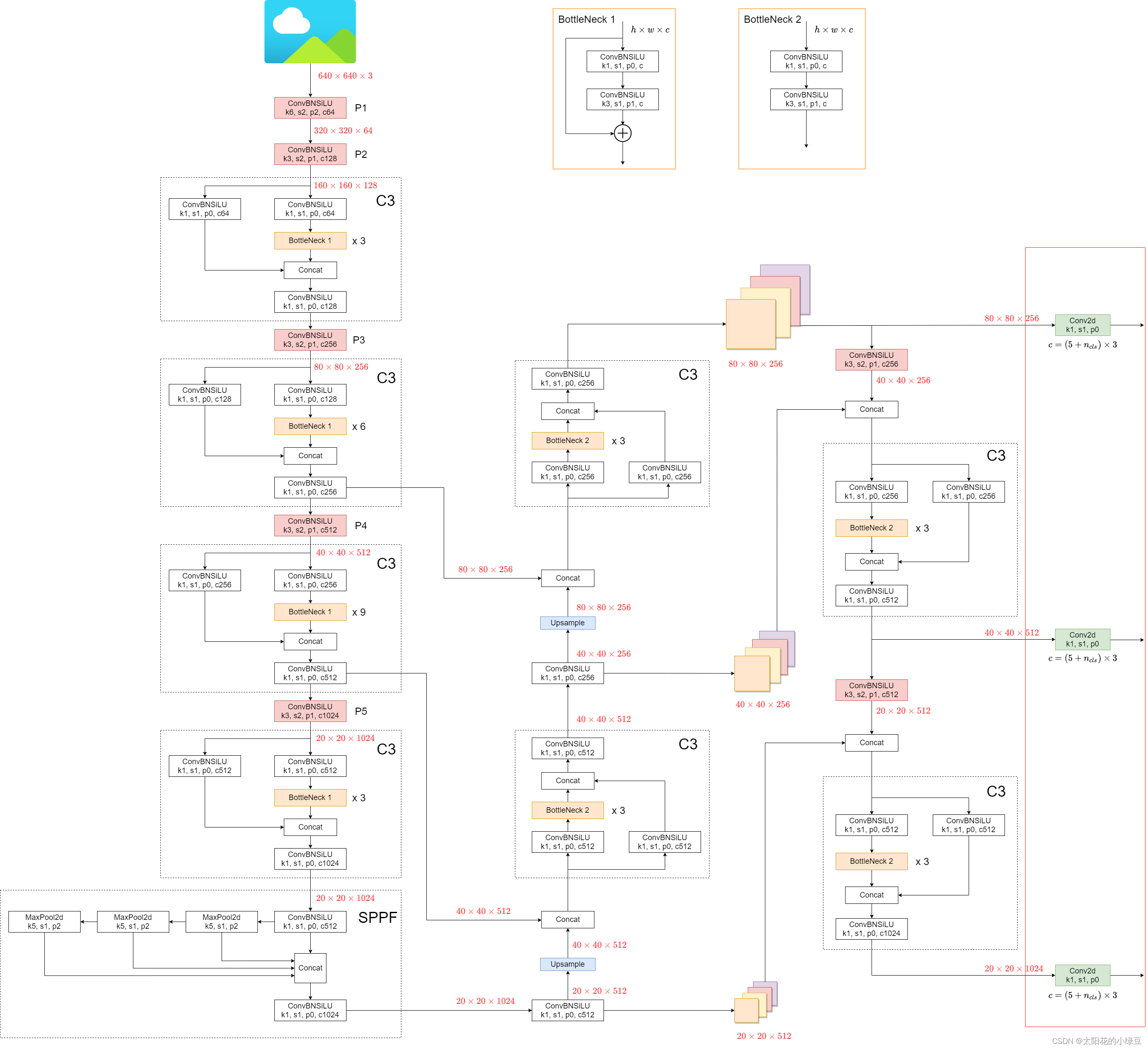

Backbone.png

0 → 100644

379 KB

CMakeLists.txt

0 → 100644

README.md

0 → 100644

Resource/Images/bear.jpg

0 → 100644

368 KB

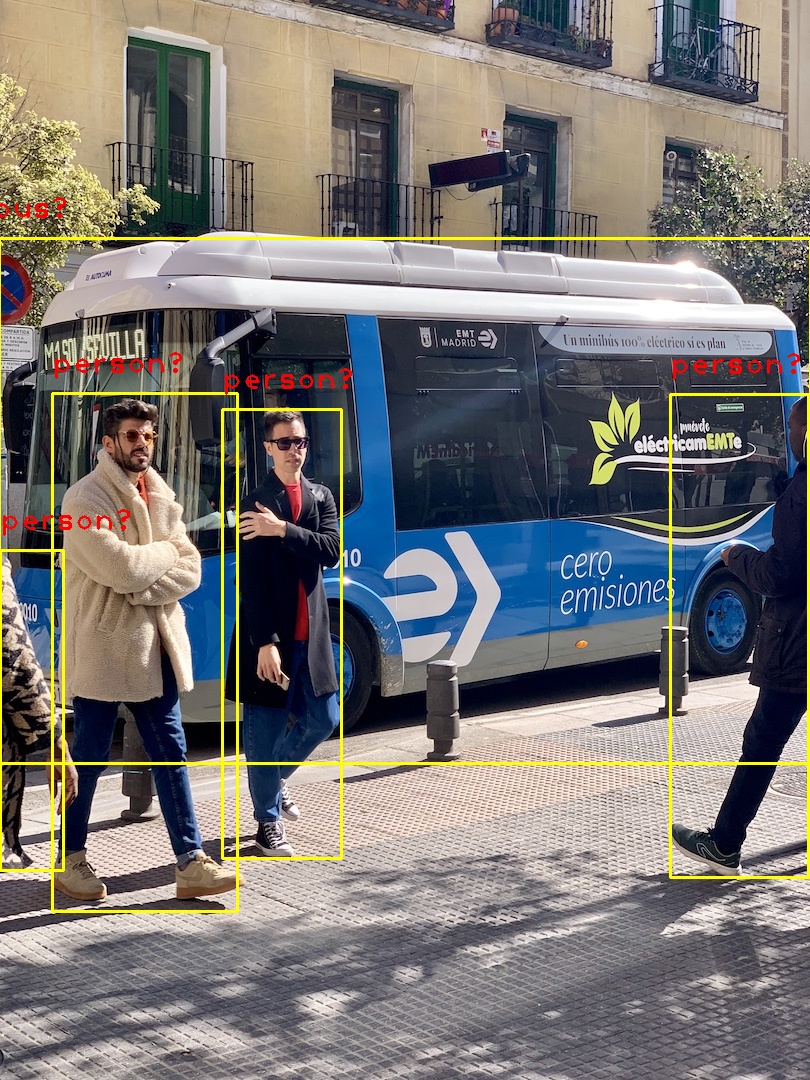

Resource/Images/bus.jpg

0 → 100644

476 KB

Resource/Images/cat.jpg

0 → 100644

137 KB

Resource/Images/dog.jpg

0 → 100644

217 KB

File added

bus.jpg

0 → 100644

481 KB

icon.png

0 → 100644

77.3 KB

include/CommonUtils.h

0 → 100644

include/DetectorYOLOV5.h

0 → 100644

include/main.h

0 → 100644

lib/libQueue.so

0 → 100644

File added

lib/libdecode.so

0 → 100644

File added

model.properties

0 → 100644

src/CommonUtils.cpp

0 → 100644

src/DetectorYOLOV5.cpp

0 → 100644