update code

Showing

Too many changes to show.

To preserve performance only 323 of 323+ files are displayed.

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

docs/CHANGELOG.md

0 → 100644

docs/CHANGELOG_en.md

0 → 100644

docs/MODEL_ZOO_cn.md

0 → 100644

docs/MODEL_ZOO_en.md

0 → 100644

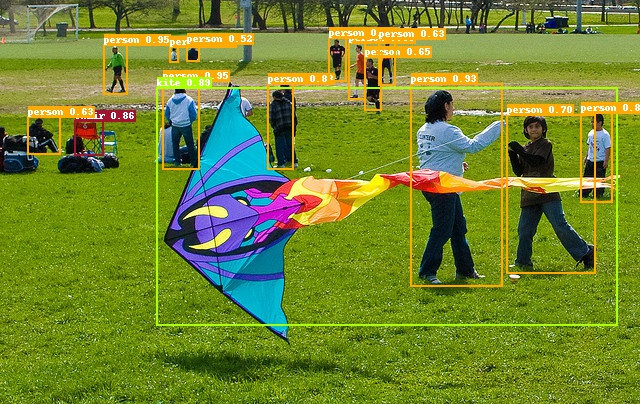

docs/images/000000014439.jpg

0 → 100644

203 KB

491 KB

168 KB

191 KB

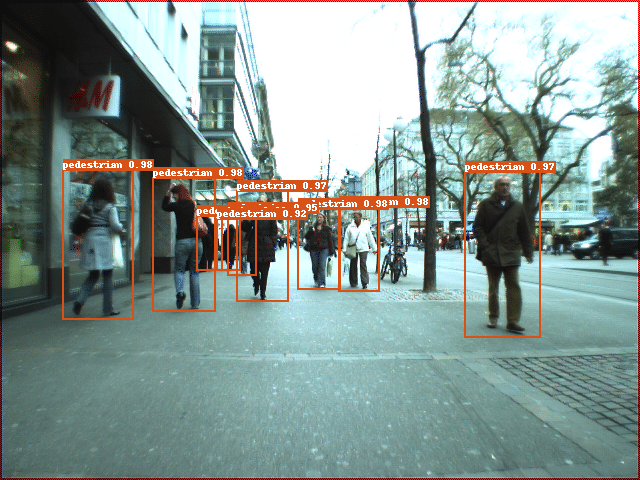

docs/images/bus.jpg

0 → 100644

479 KB

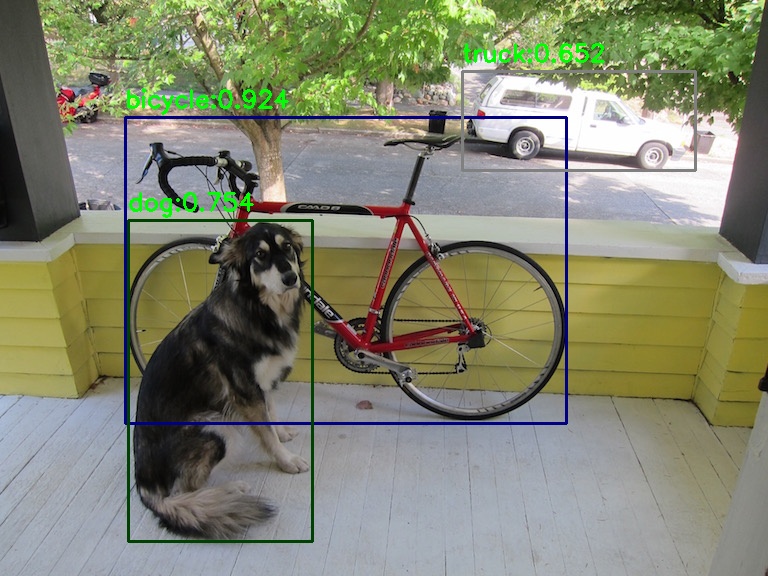

docs/images/dog.jpg

0 → 100644

181 KB