精简代码

Showing

Doc/Tutorial_Cpp.md

0 → 100644

Doc/Tutorial_Python.md

0 → 100644

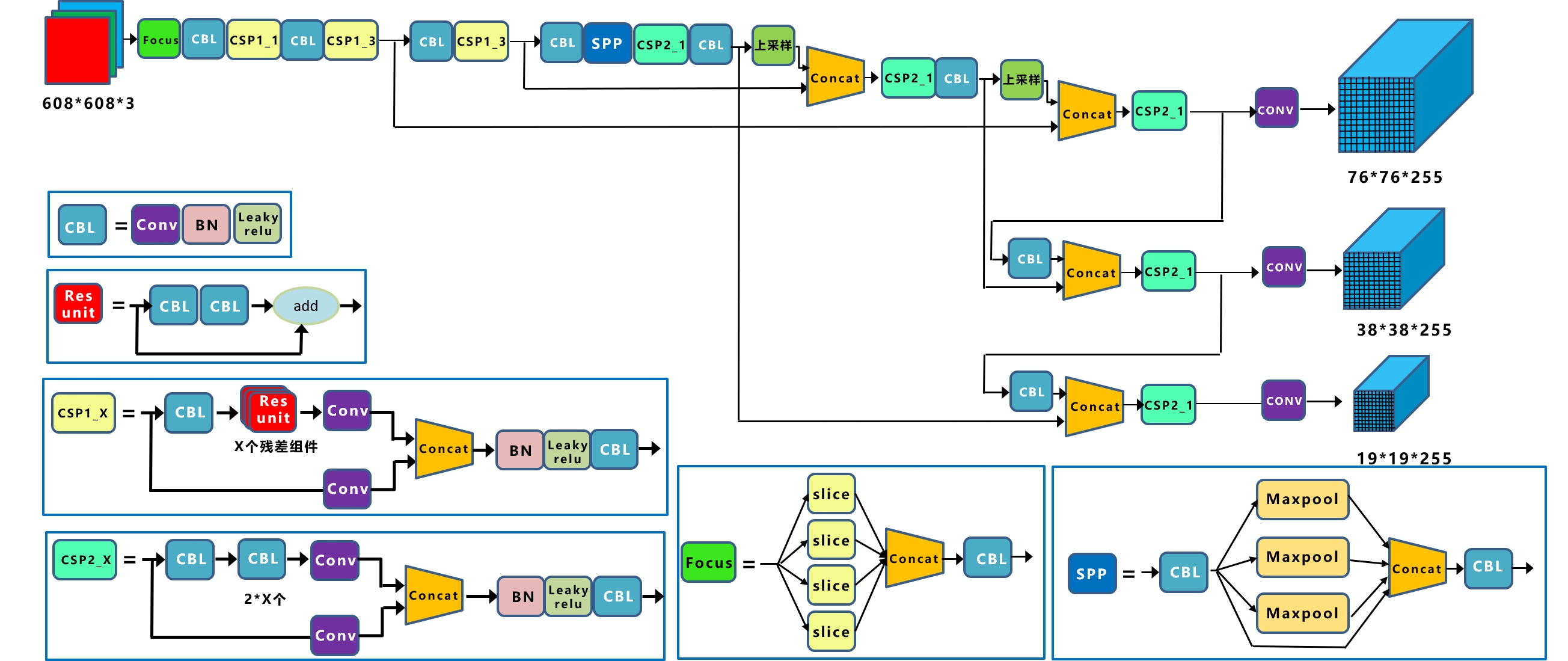

Doc/YOLOV5_01.jpg

0 → 100644

571 KB

File moved

File moved

Src/Sample.cpp

deleted

100644 → 0

Src/Sample.h

deleted

100644 → 0