v1.0

Showing

Arial.ttf

0 → 100644

File added

LICENSE

0 → 100644

This diff is collapsed.

README.md

0 → 100644

README_origin.md

0 → 100644

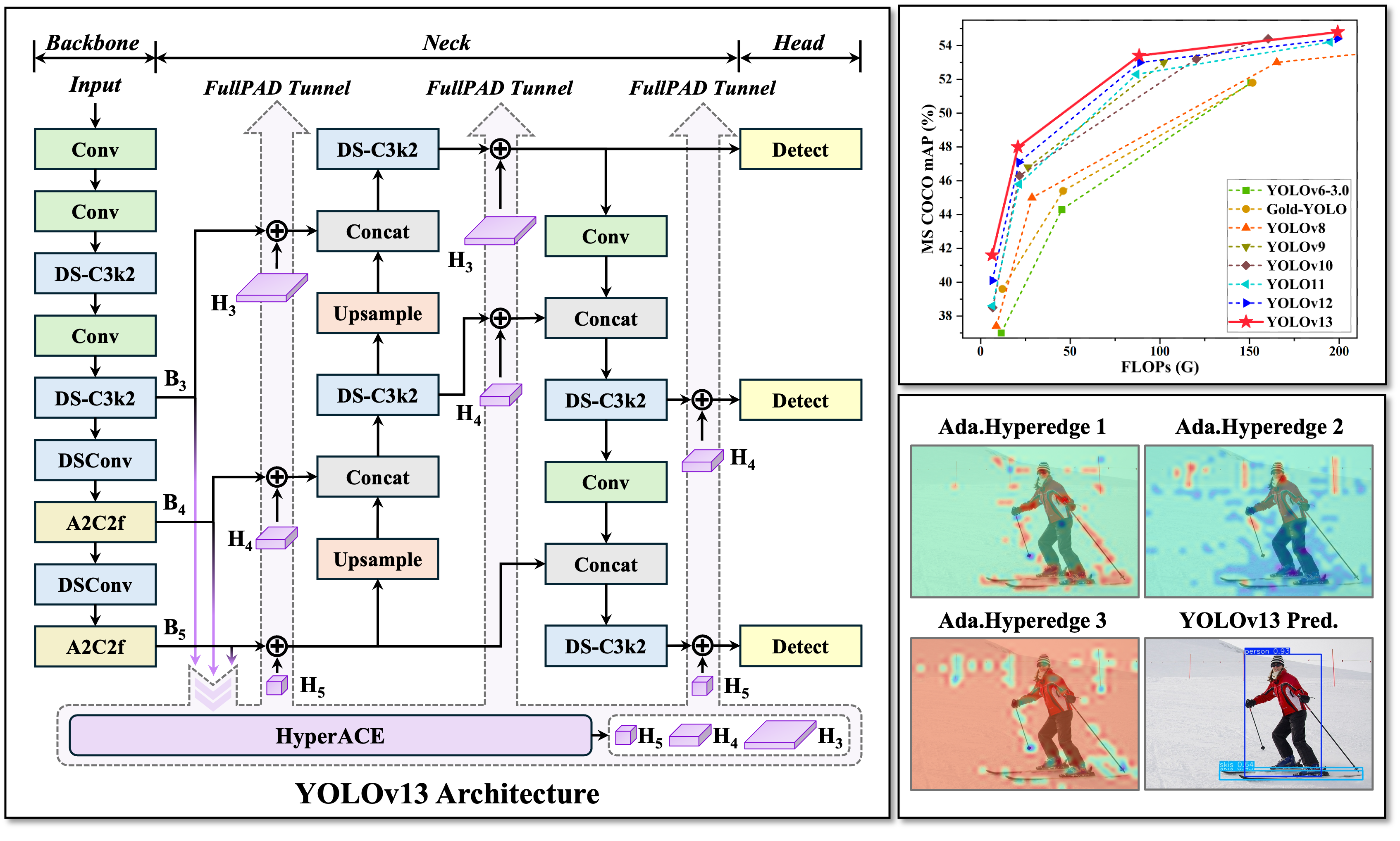

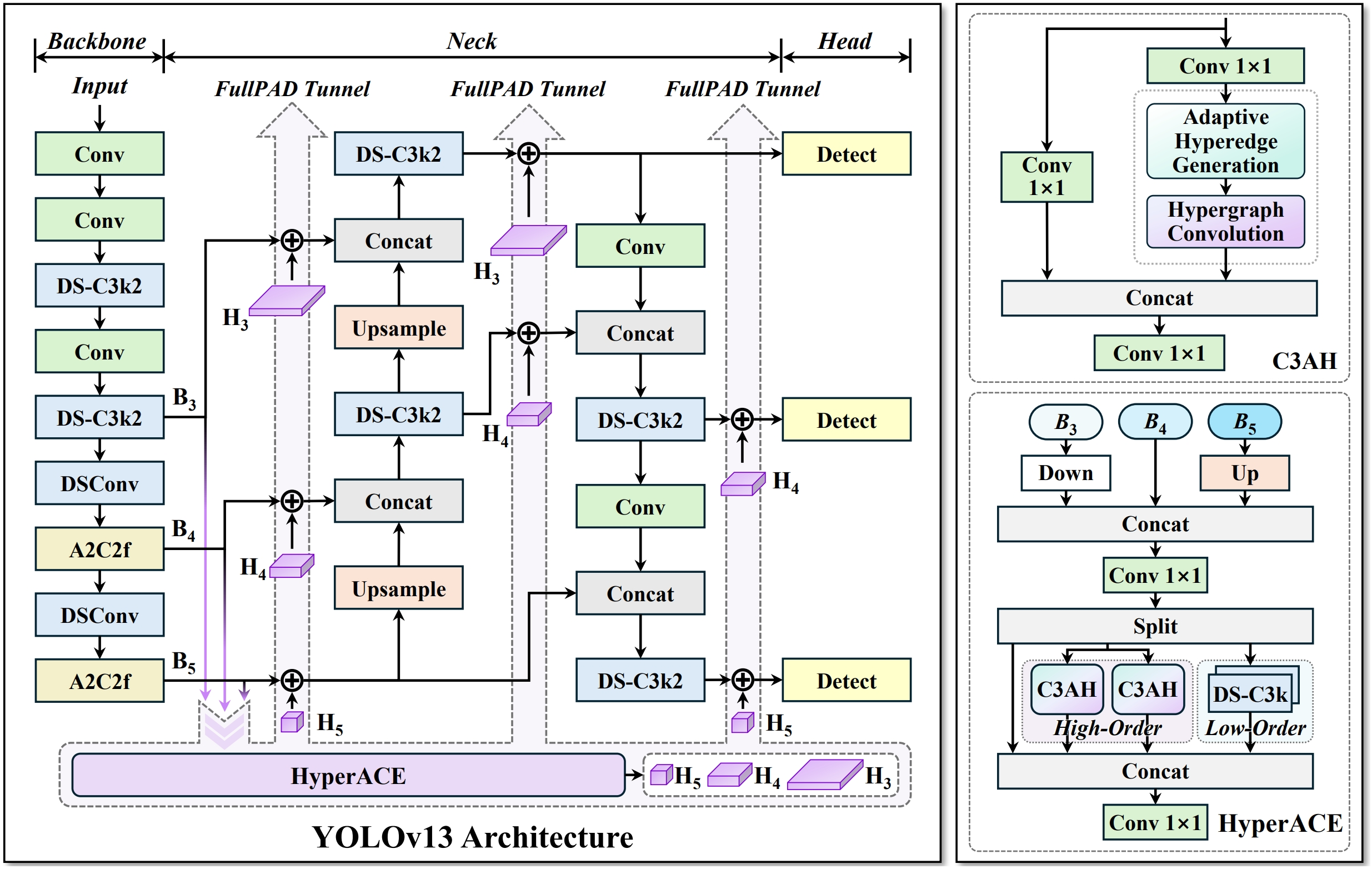

assets/framework.png

0 → 100644

2.01 MB

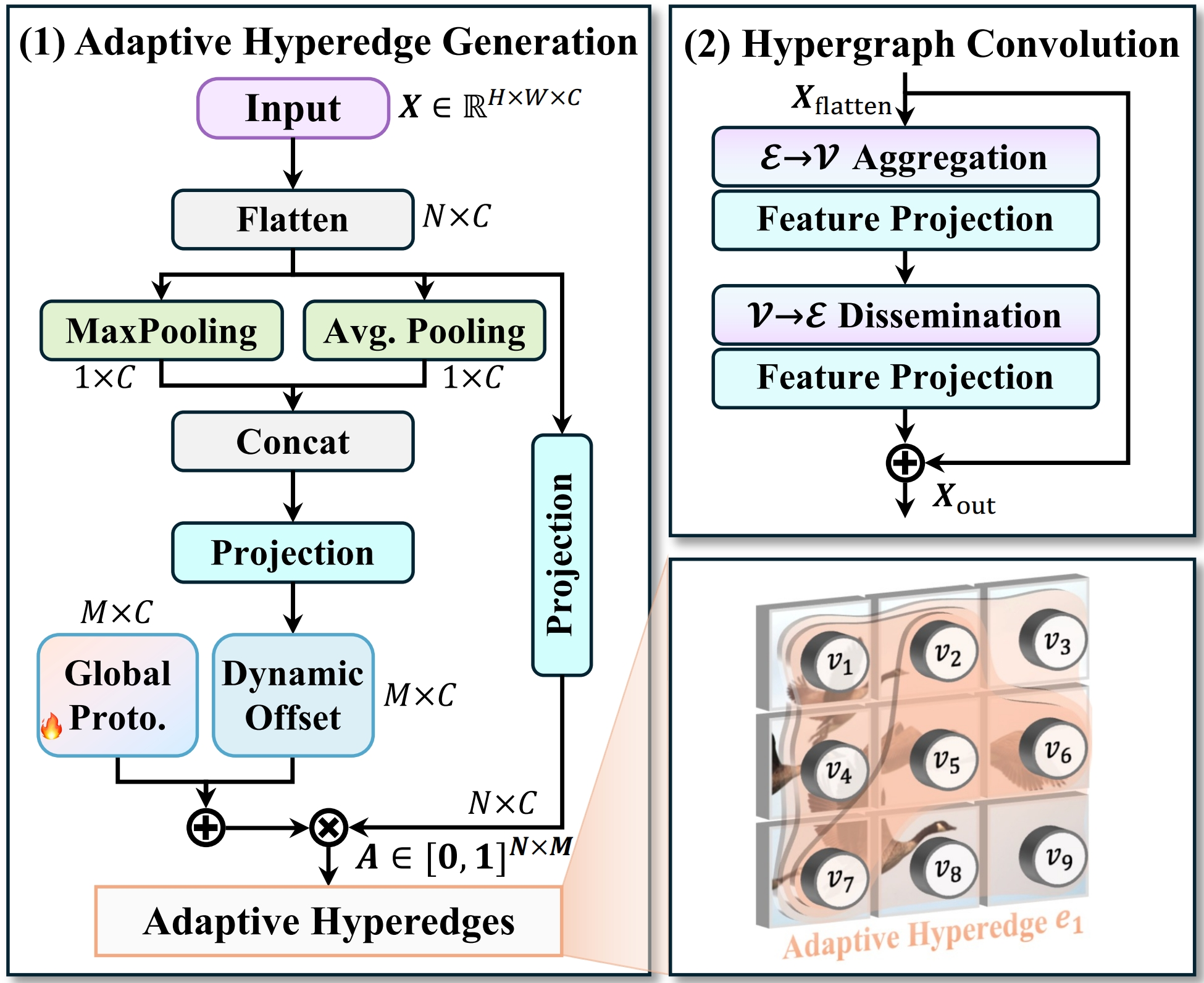

assets/hyperedge.png

0 → 100644

6.4 MB

assets/icon.png

0 → 100644

694 KB

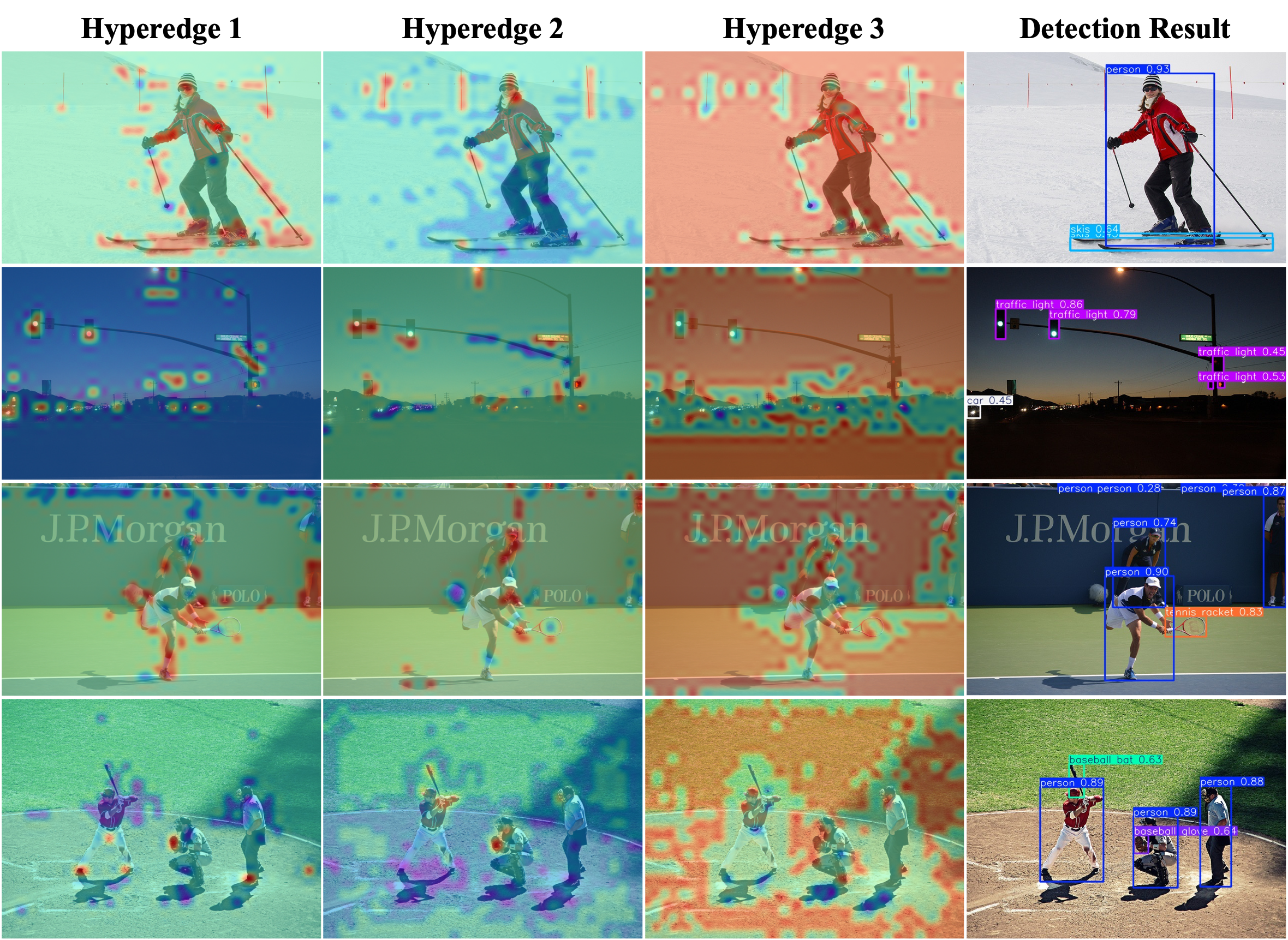

assets/vis.png

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

doc/HyperACE.png

0 → 100644

944 KB

doc/YOLOv13.png

0 → 100644

914 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

docker_nv/Dockerfile

0 → 100644

docker_nv/Dockerfile-arm64

0 → 100644

docker_nv/Dockerfile-conda

0 → 100644

docker_nv/Dockerfile-cpu

0 → 100644

docker_nv/Dockerfile-jupyter

0 → 100644