v1.0

Showing

.gitignore

0 → 100644

.pre-commit-config.yaml

0 → 100644

CONTRIBUTING.md

0 → 100644

LICENSE

0 → 100644

This diff is collapsed.

README.md

0 → 100644

README_origin.md

0 → 100644

app.py

0 → 100644

coco128.zip

0 → 100644

File added

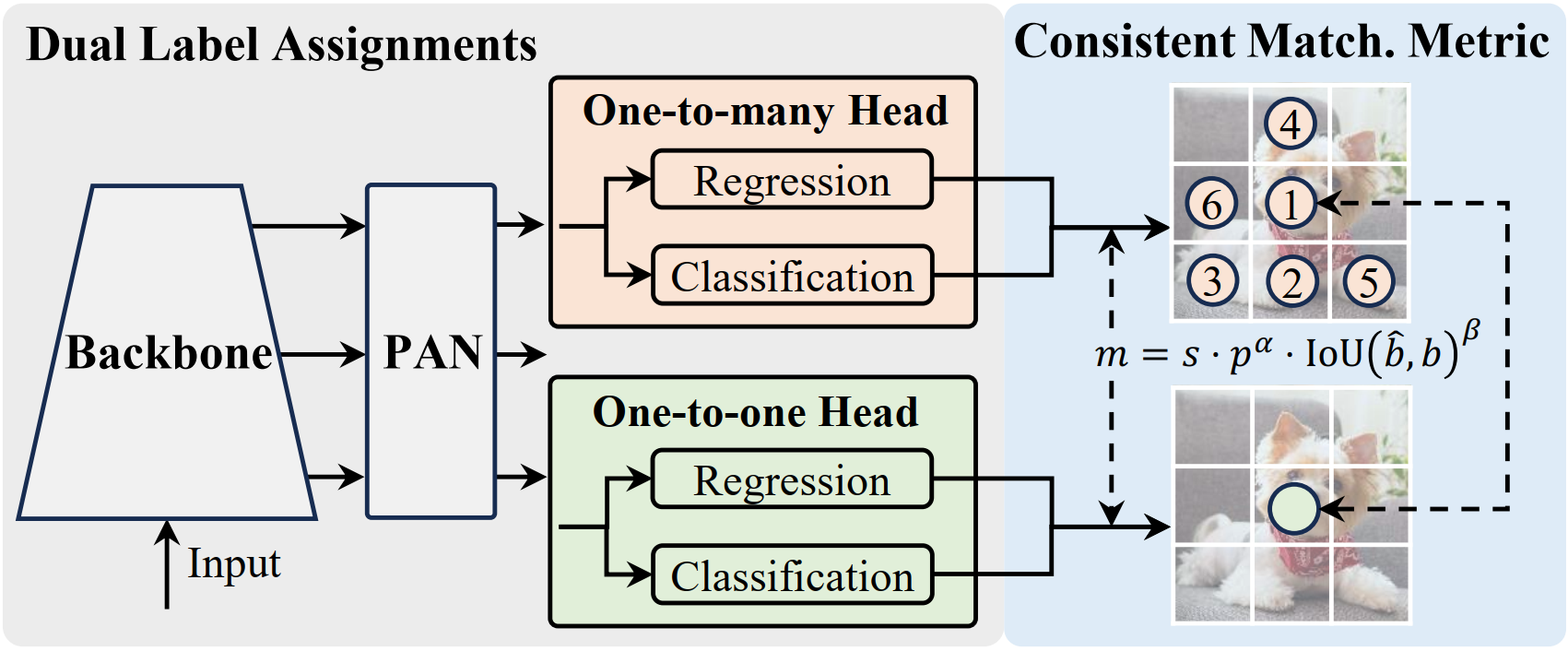

doc/algorithm.png

0 → 100644

269 KB

doc/bus.png

0 → 100644

1.36 MB

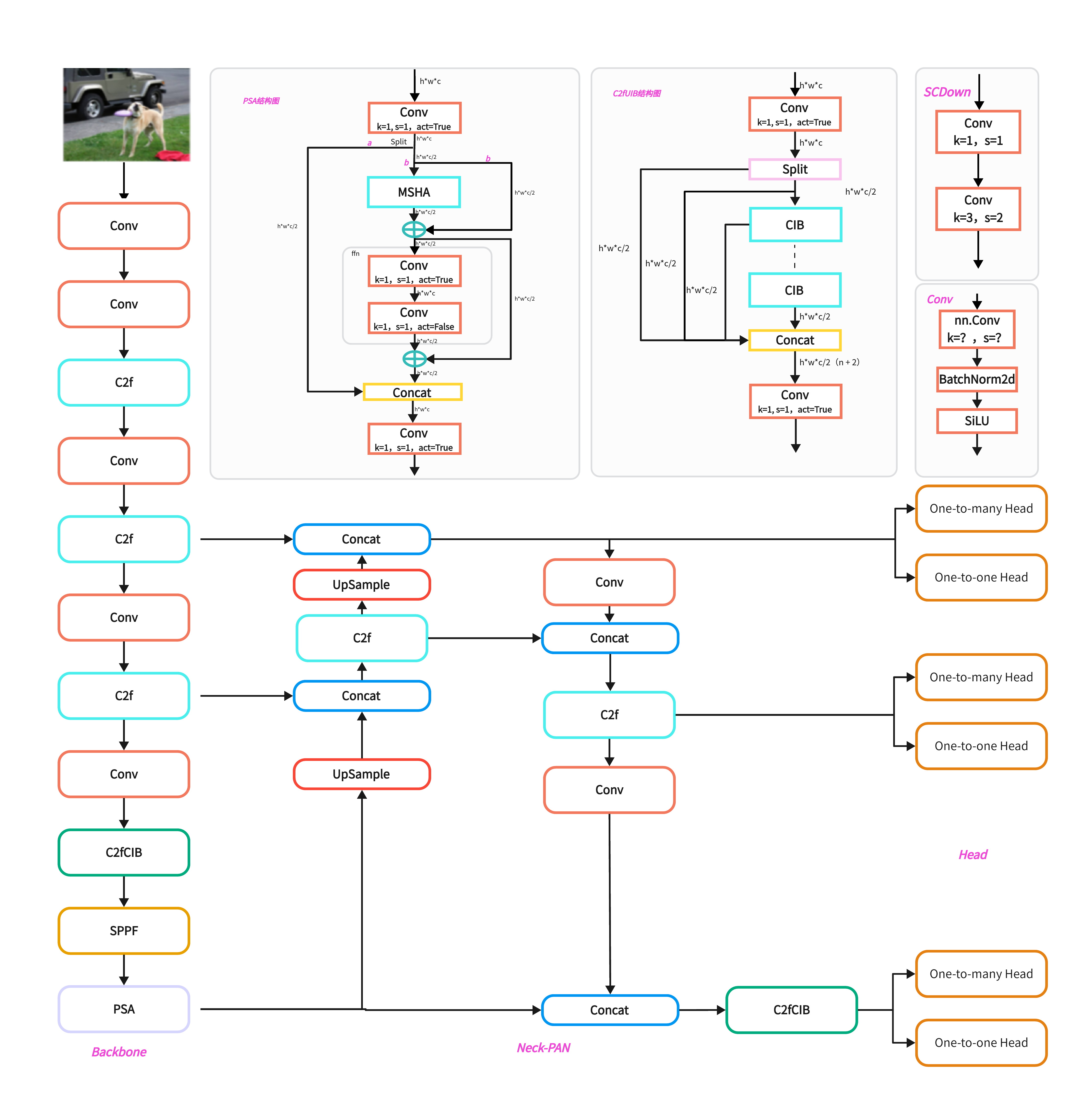

doc/structure.png

0 → 100644

656 KB

docker/Dockerfile

0 → 100644

docker/requirements.txt

0 → 100644

docker_nv/Dockerfile

0 → 100644

docker_nv/Dockerfile-arm64

0 → 100644

docker_nv/Dockerfile-conda

0 → 100644

docker_nv/Dockerfile-cpu

0 → 100644

docker_nv/Dockerfile-jetson

0 → 100644

docker_nv/Dockerfile-python

0 → 100644

docker_nv/Dockerfile-runner

0 → 100644