"test/spectests.cpp" did not exist on "90be7e75c2d2704503213eacf9ef5760cbeb2ae0"

Initial commit

Showing

.dockerignore

0 → 100644

.gitattributes

0 → 100644

.gitignore

0 → 100644

.gitmodules

0 → 100644

Dockerfile

0 → 100644

LICENSE

0 → 100644

This diff is collapsed.

README.md

0 → 100644

466 KB

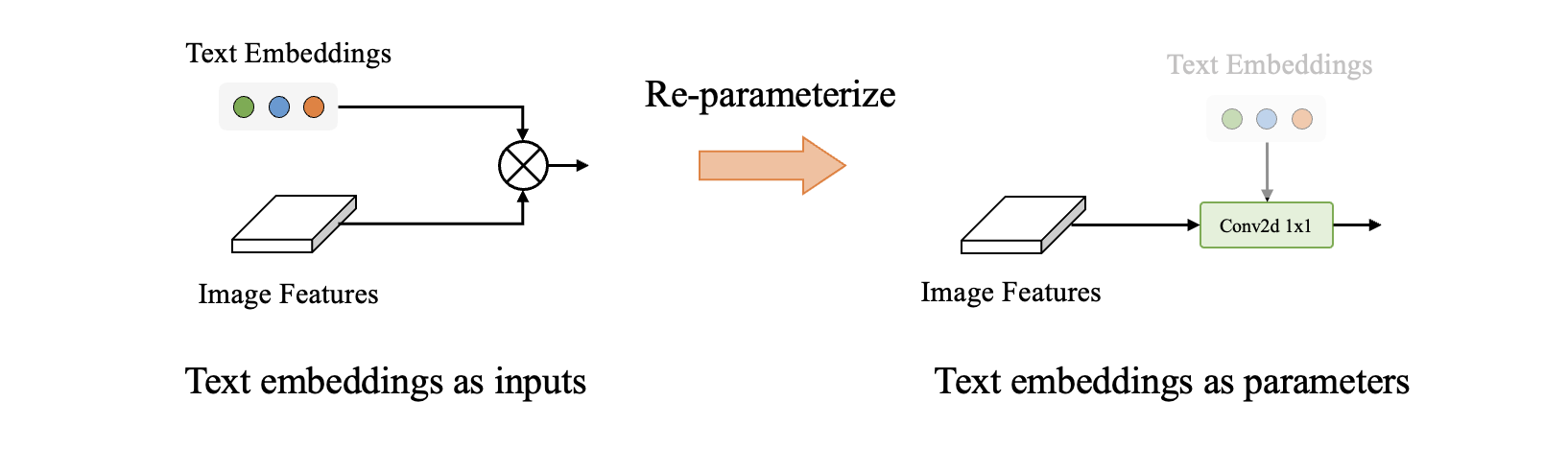

assets/reparameterize.png

0 → 100644

62.8 KB

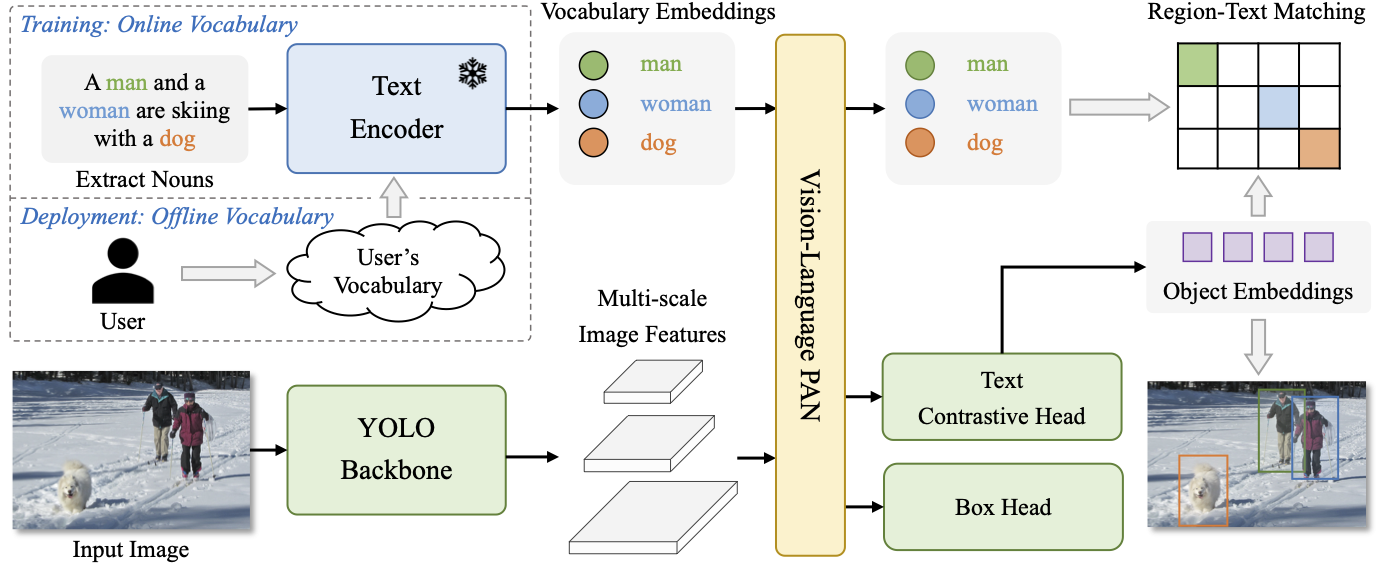

assets/yolo_arch.png

0 → 100644

298 KB

assets/yolo_logo.png

0 → 100644

99.9 KB