v1

Showing

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

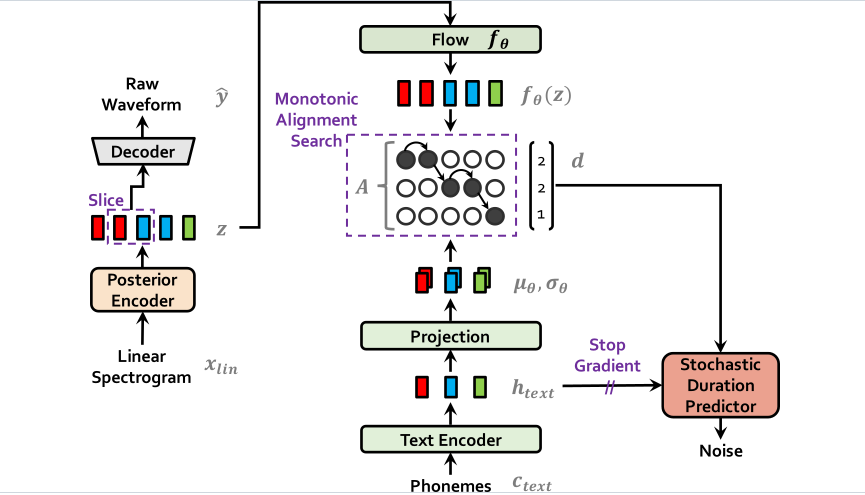

image-20240829142644186.png

0 → 100644

70.5 KB

inference.ipynb

0 → 100644

kme.log

0 → 100644

This diff is collapsed.

losses.py

0 → 100644

mel_processing.py

0 → 100644

models.py

0 → 100644

modules.py

0 → 100644

This diff is collapsed.

monotonic_align/__init__.py

0 → 100644

File added

File added

File added

monotonic_align/core.c

0 → 100644

This diff is collapsed.