update

parents

Showing

309 KB

asset/test_imgs/0000000.png

0 → 100644

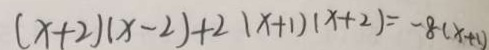

34.1 KB

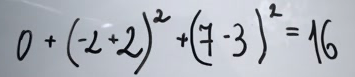

asset/test_imgs/0000001.png

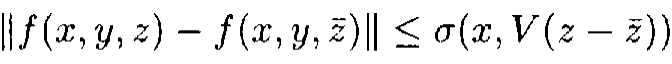

0 → 100644

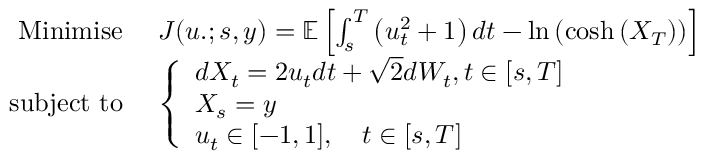

7.11 KB

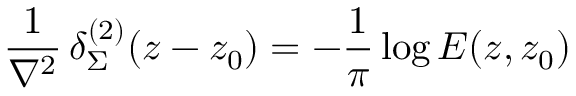

asset/test_imgs/0000002.png

0 → 100644

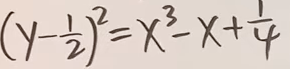

37.5 KB

asset/test_imgs/0000003.png

0 → 100644

1.55 KB

asset/test_imgs/0000004.png

0 → 100644

23.1 KB

asset/test_imgs/0000005.png

0 → 100644

3.14 KB

asset/test_imgs/0000006.png

0 → 100644

3.2 KB

asset/test_imgs/0000007.png

0 → 100644

21.3 KB

asset/test_imgs/0000008.png

0 → 100644

4.87 KB

asset/test_imgs/0000009.png

0 → 100644

37.3 KB

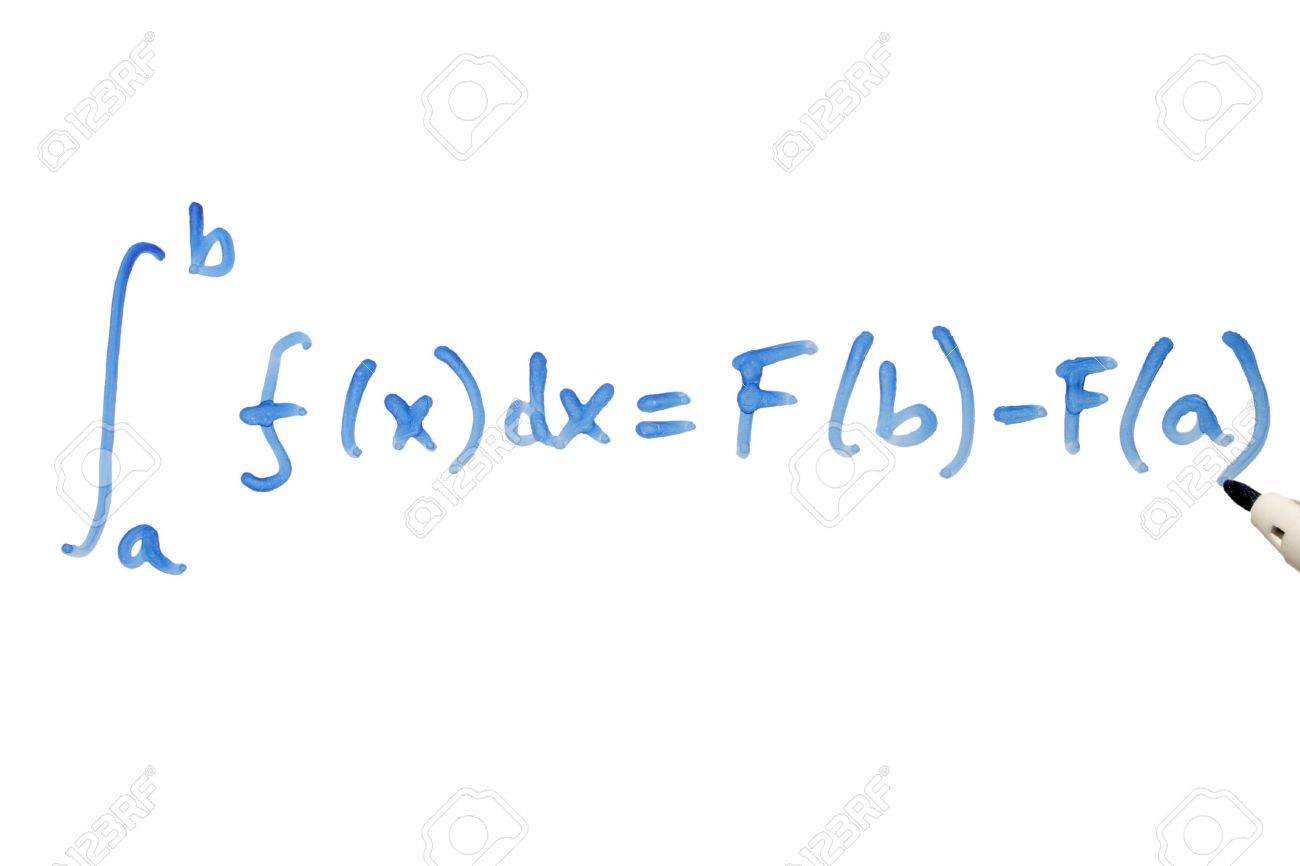

asset/test_imgs/0000010.png

0 → 100644

167 KB

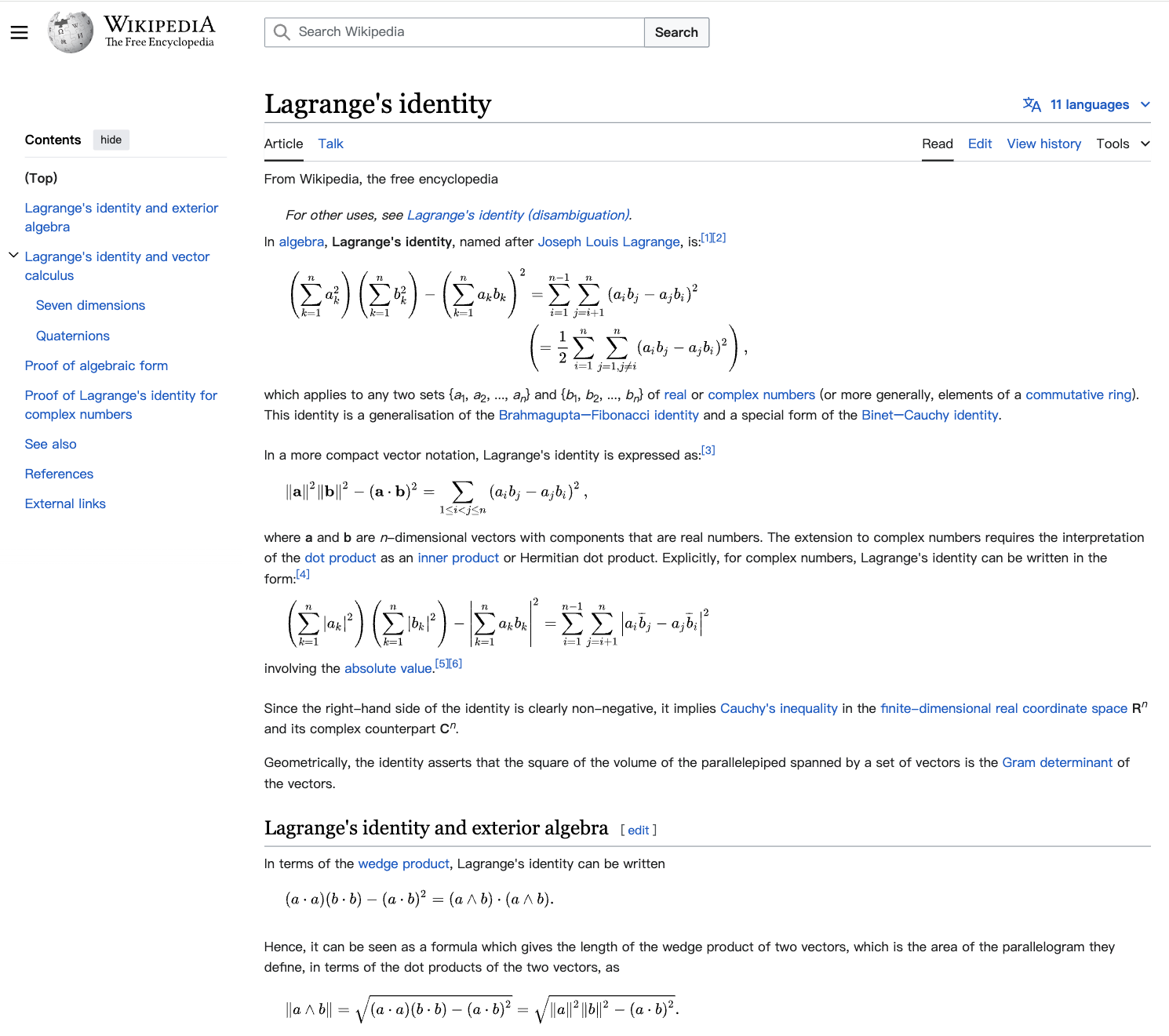

asset/test_imgs/0000011.png

0 → 100644

4.95 KB

asset/test_imgs/0000012.png

0 → 100644

7.49 KB

asset/test_imgs/0000013.png

0 → 100644

1.83 KB

asset/test_imgs/0000014.png

0 → 100644

959 Bytes

cdm/DockerFile

0 → 100644

cdm/README-CN.md

0 → 100644

cdm/README.md

0 → 100644

cdm/app.py

0 → 100644