"tests/python/common/test_batch-heterograph.py" did not exist on "09642d7c21c5930ae678d8b7c348be2302225a2a"

init

parents

Showing

LICENSE

0 → 100644

README.md

0 → 100644

README_origin.md

0 → 100644

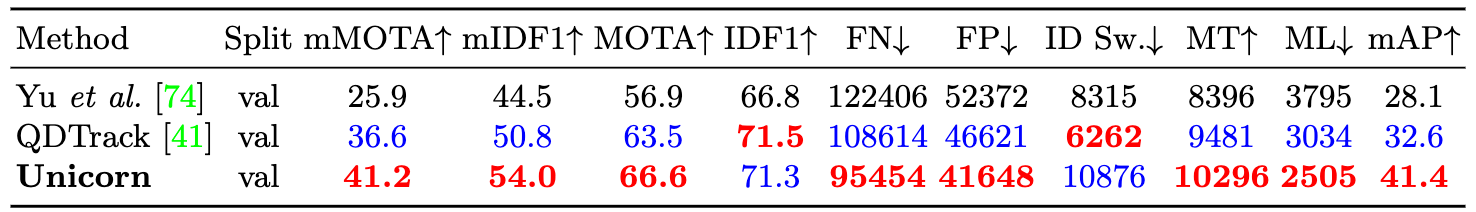

assets/MOT-BDD.png

0 → 100644

63.5 KB

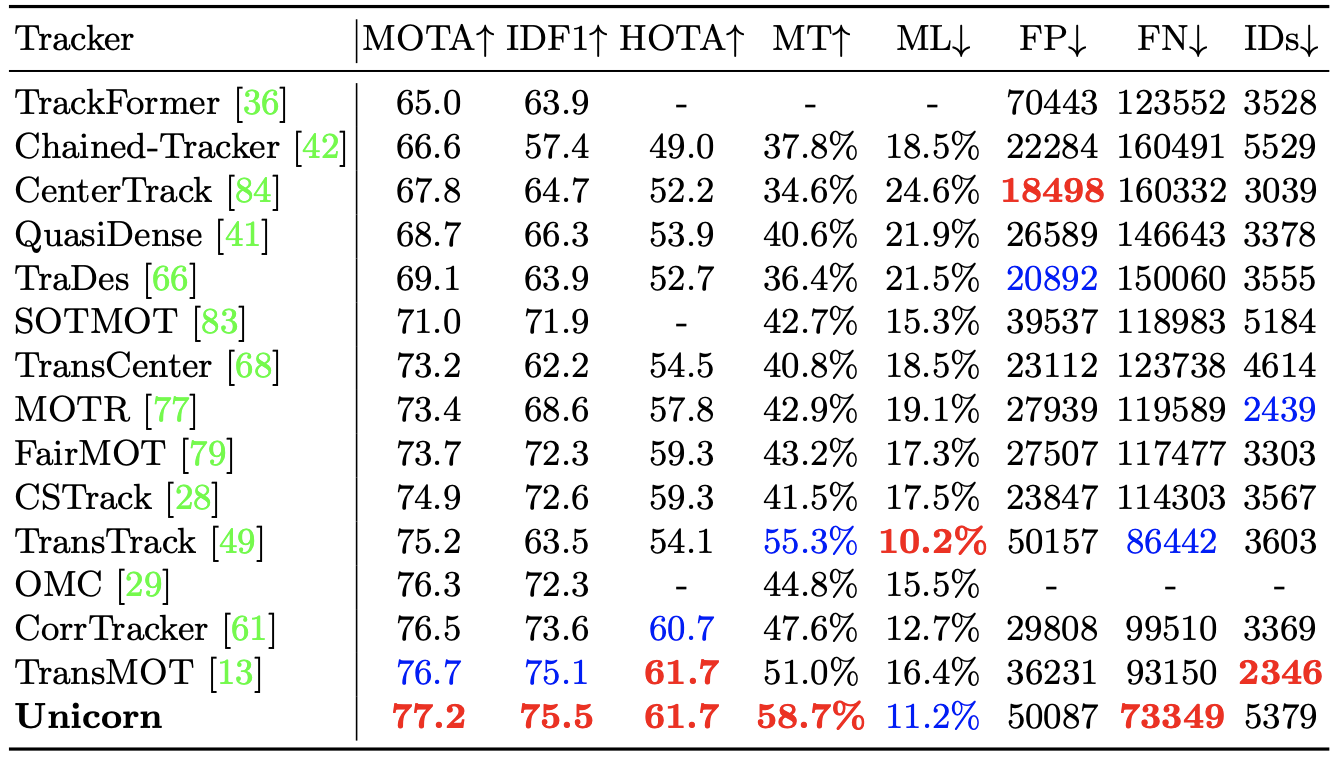

assets/MOT.png

0 → 100644

236 KB

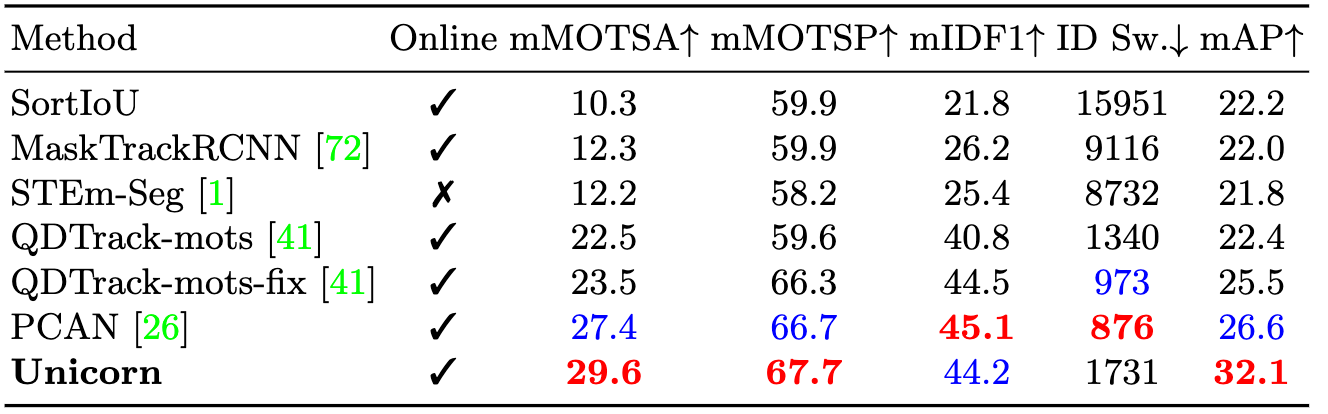

assets/MOTS-BDD.png

0 → 100644

101 KB

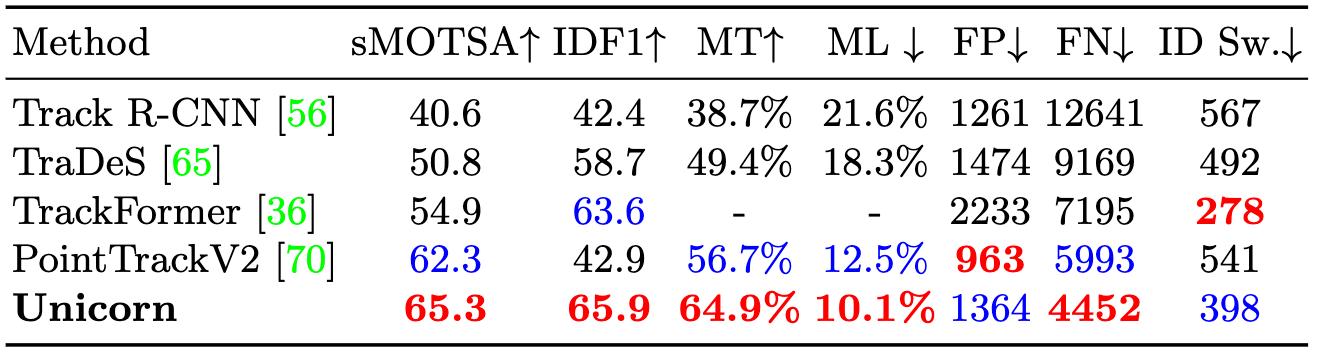

assets/MOTS.png

0 → 100644

101 KB

assets/SOT.png

0 → 100644

294 KB

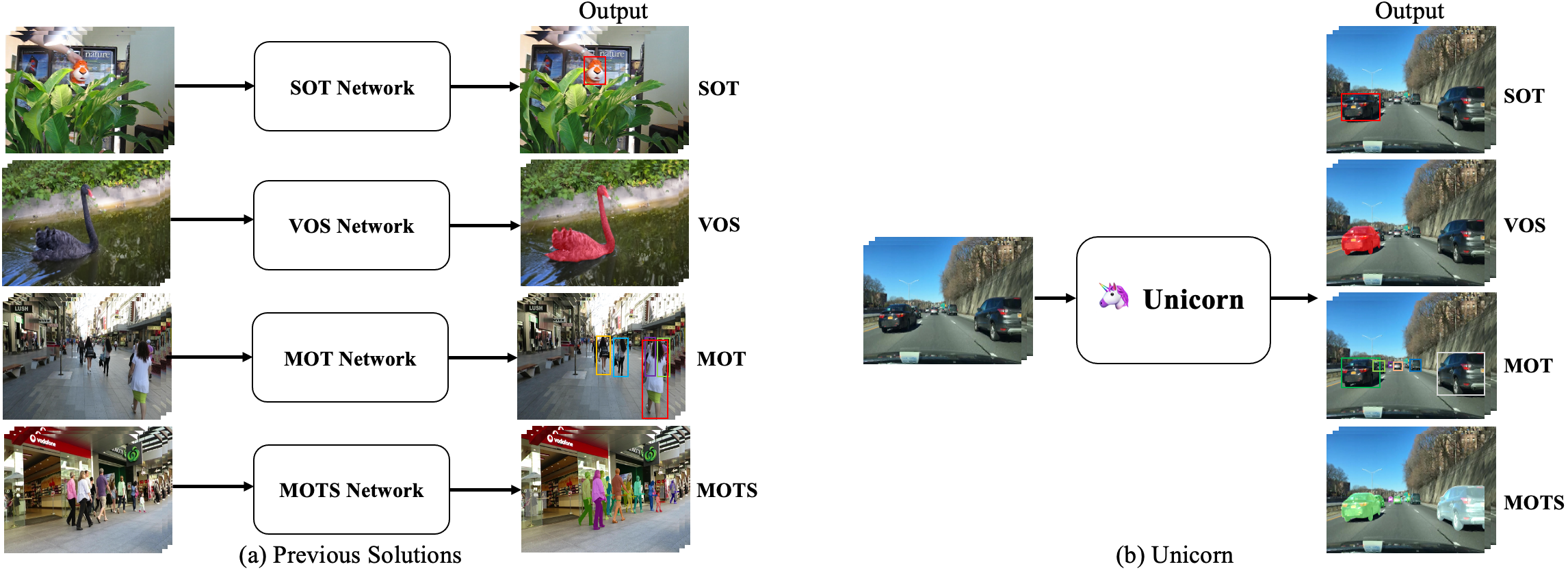

assets/Unicorn.png

0 → 100644

1.47 MB

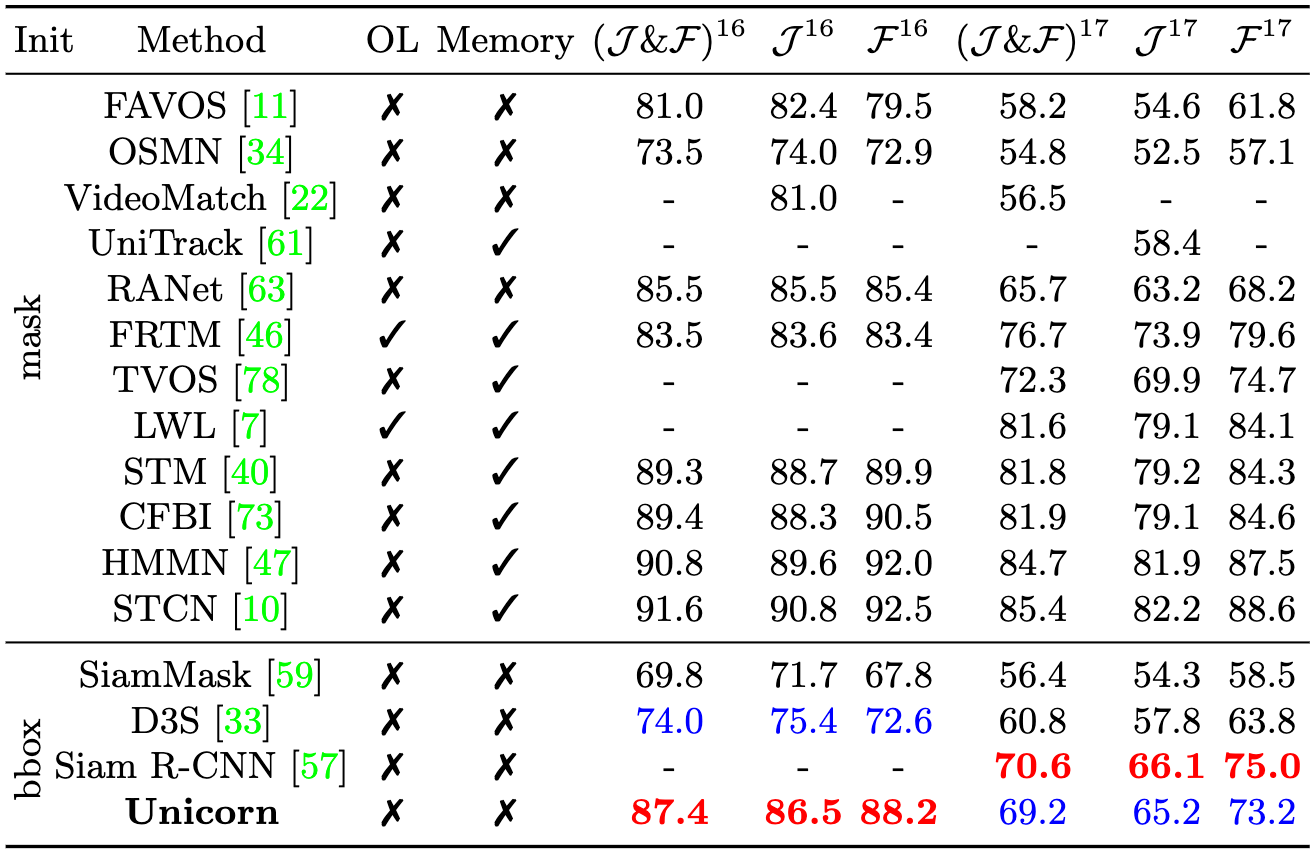

assets/VOS.png

0 → 100644

209 KB

assets/data.md

0 → 100644

assets/install.md

0 → 100644

assets/model_zoo.md

0 → 100644

assets/test.md

0 → 100644

assets/train.md

0 → 100644

assets/video_demo.gif

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

datasets/GOT10K

0 → 120000

This diff is collapsed.

datasets/data_path/eth.train

0 → 100644

This diff is collapsed.

doc/Unicorn_components.png

0 → 100644

164 KB