"...data/git@developer.sourcefind.cn:OpenDAS/megatron-lm.git" did not exist on "dff98d475f3efaf81a080fa43be391b55a7f6243"

Initial commit

Showing

No preview for this file type

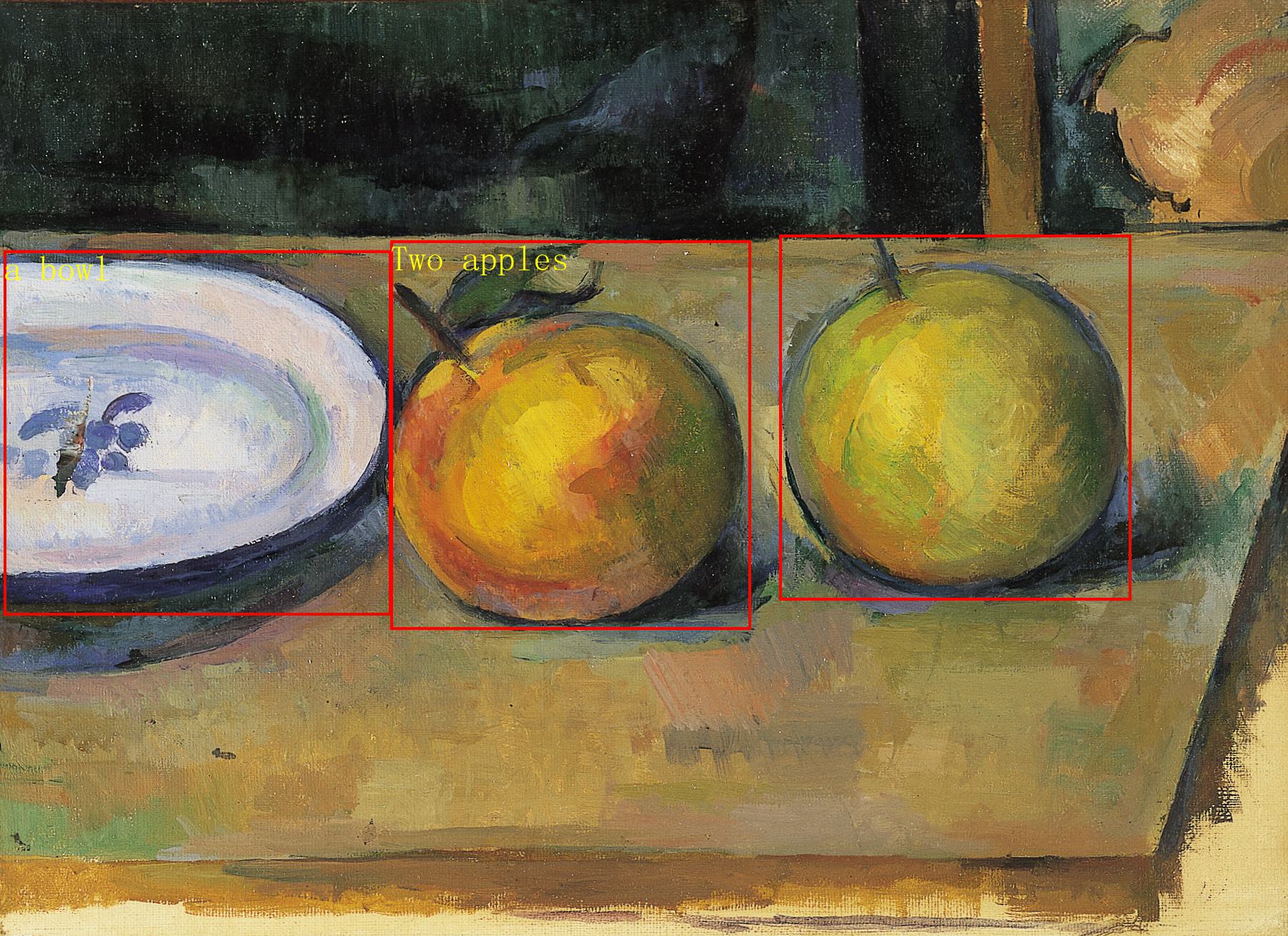

assets/apple_r.jpeg

0 → 100644

465 KB

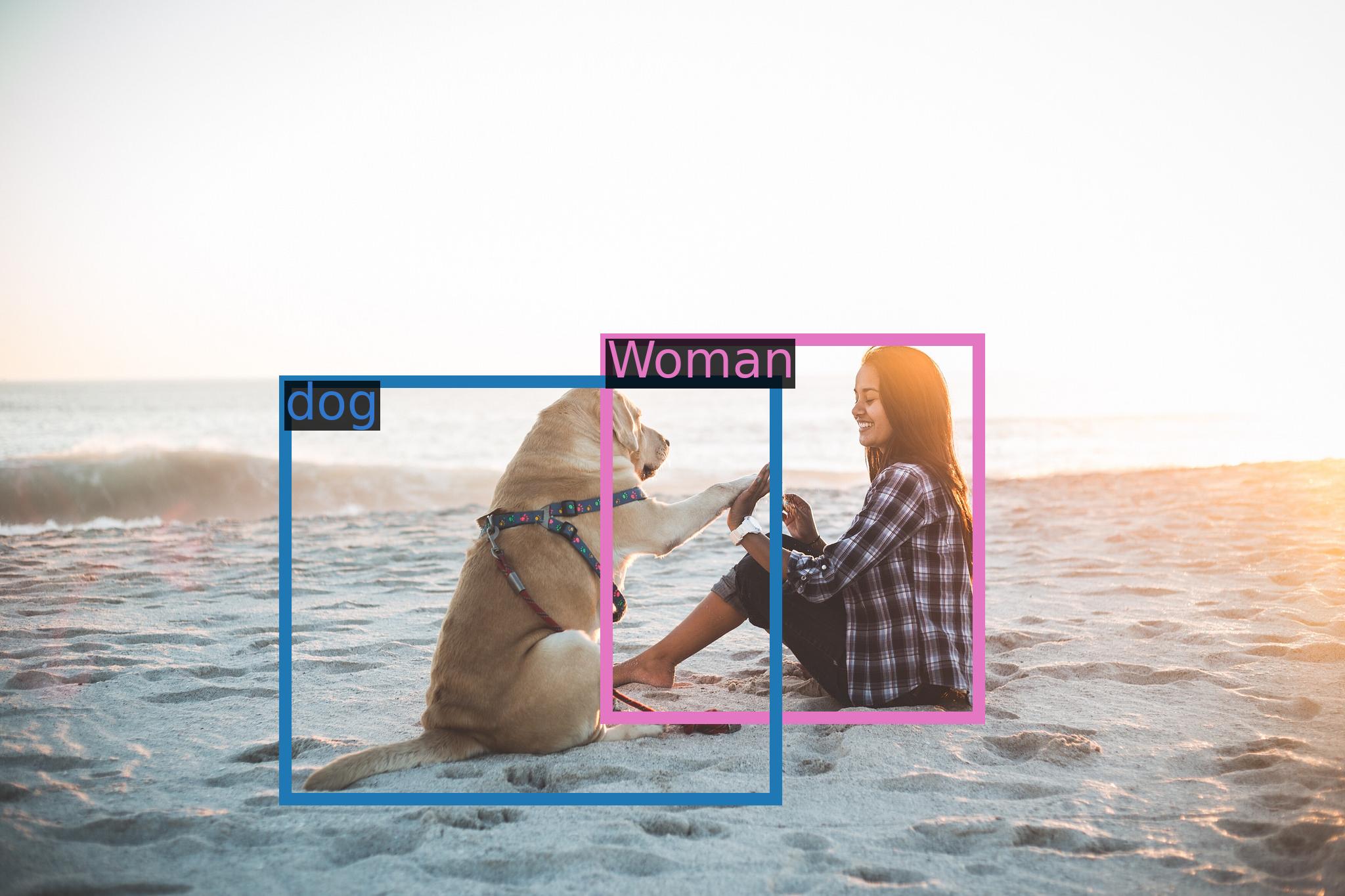

assets/demo_highfive.jpg

0 → 100644

233 KB

242 KB

assets/demo_vl.gif

0 → 100644

2.07 MB

assets/logo.jpg

0 → 100644

61.9 KB

8.8 MB

179 KB

1.62 MB

344 KB

399 KB

53.6 KB

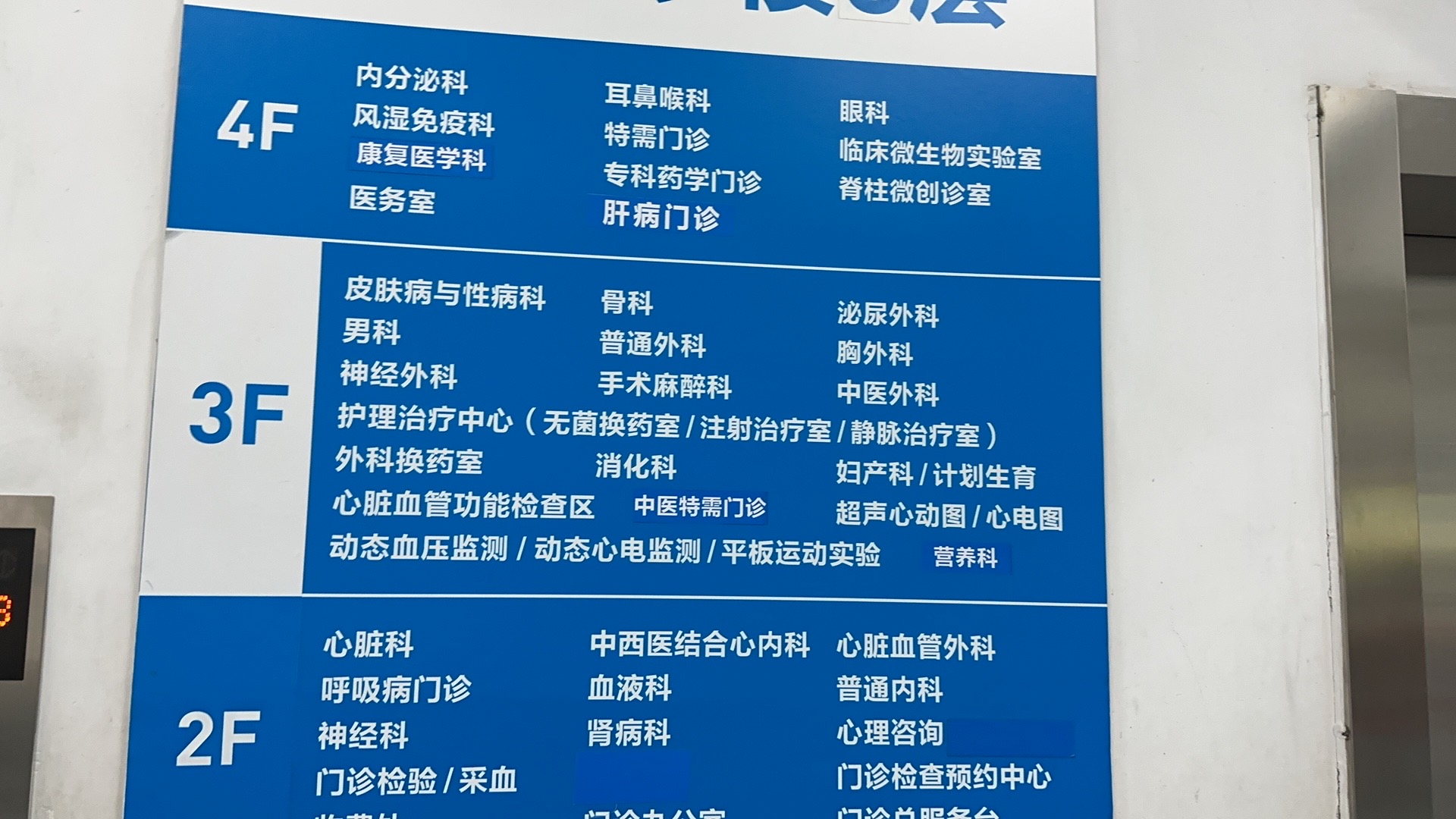

assets/mm_tutorial/Menu.jpeg

0 → 100644

46.6 KB

1.26 MB

18.4 KB

777 KB

1.14 MB

24.5 KB

43.6 KB

.jpeg)

_Small.jpeg)