"git@developer.sourcefind.cn:gaoqiong/composable_kernel.git" did not exist on "0a2657312ec62a65e92a36cebd7d3b2a3c0712e1"

readme

Showing

images/Doc_Chart.png

0 → 100644

1.94 MB

images/abstract.pdf

0 → 100644

File added

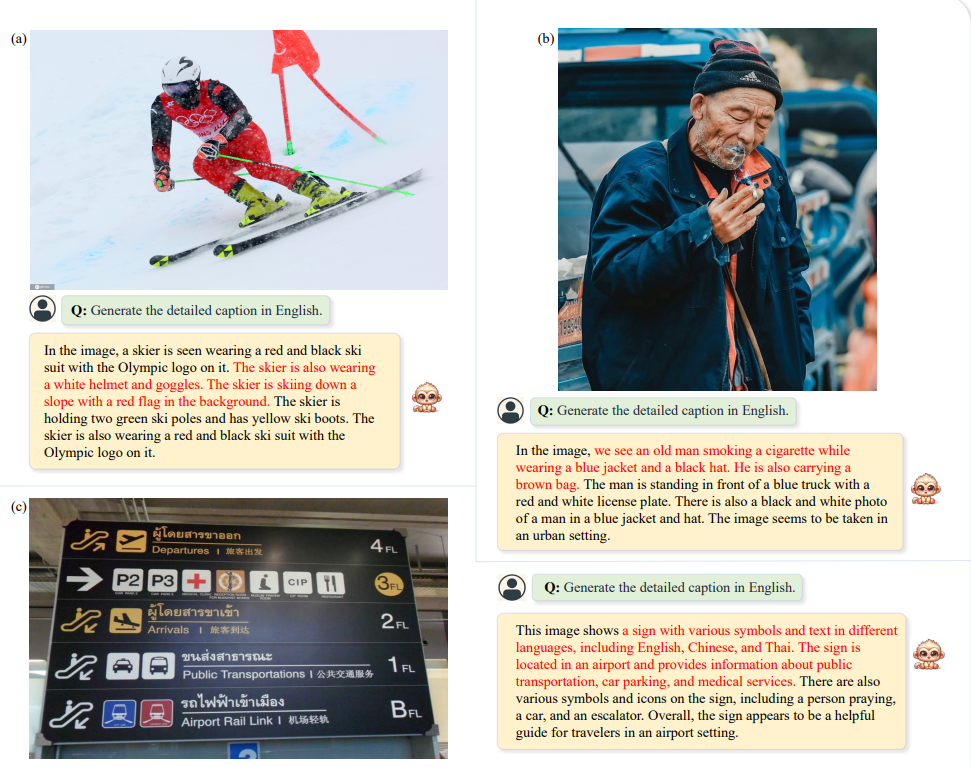

images/caption_1.png

0 → 100644

900 KB

images/logo_hust.png

0 → 100644

140 KB

images/logo_monkey.png

0 → 100644

384 KB

images/logo_vlr.png

0 → 100644

13.8 KB

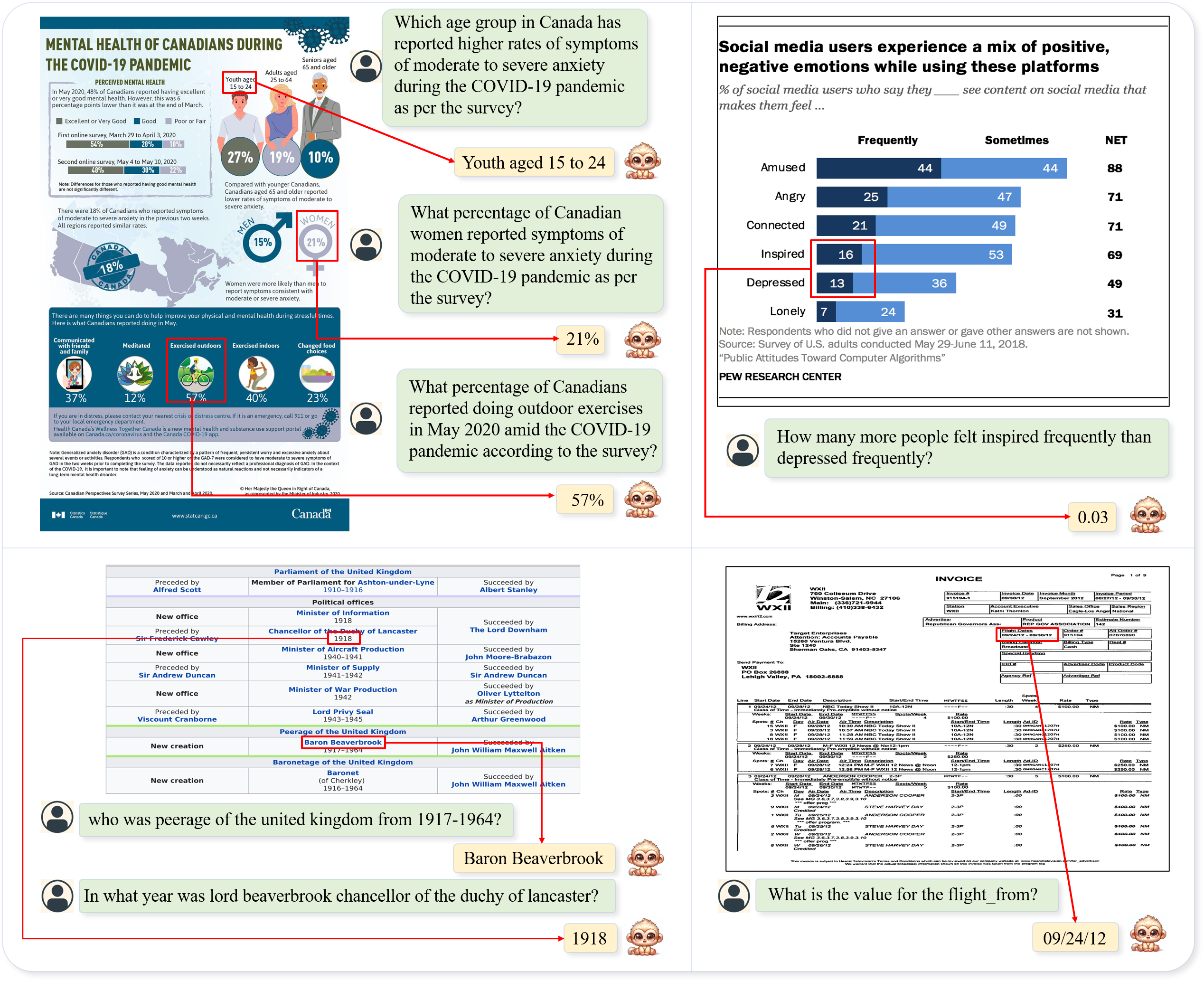

images/qa_1.png

0 → 100644

742 KB

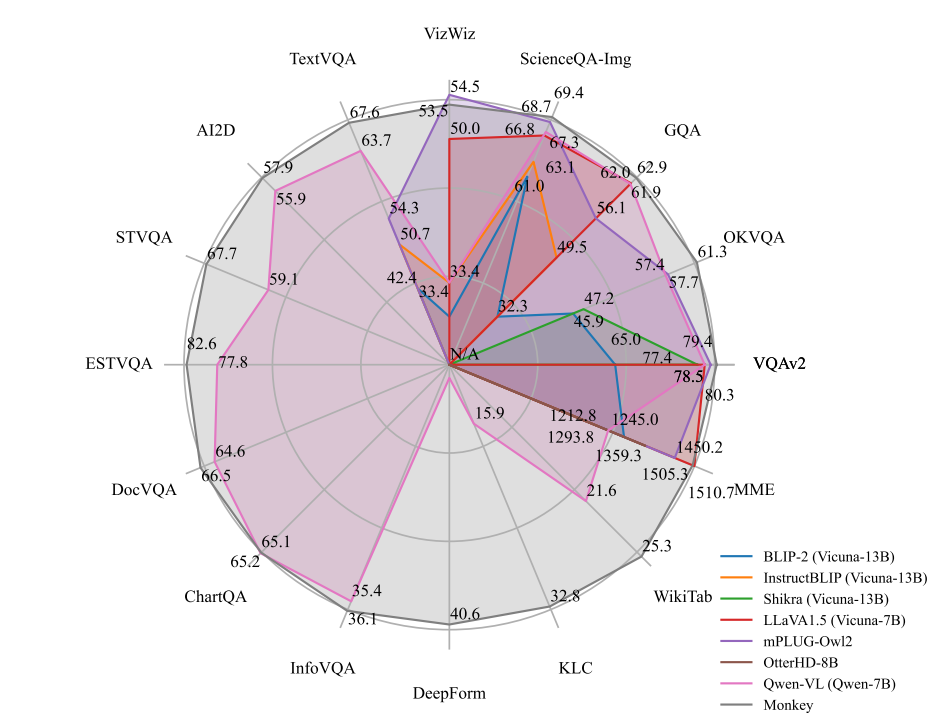

images/radar.png

0 → 100644

159 KB