Skip to content

GitLab

Menu

Projects

Groups

Snippets

Loading...

Help

Help

Support

Community forum

Keyboard shortcuts

?

Submit feedback

Contribute to GitLab

Sign in / Register

Toggle navigation

Menu

Open sidebar

ModelZoo

Swin-Transformer_pytorch

Commits

cd9e6abc

"vscode:/vscode.git/clone" did not exist on "efb4345042b9b3cf1b5397d10d0c92b1441fe103"

Commit

cd9e6abc

authored

Apr 02, 2023

by

unknown

Browse files

修改readme

parent

6a10c7bf

Changes

2

Hide whitespace changes

Inline

Side-by-side

Showing

2 changed files

with

2 additions

and

1 deletion

+2

-1

README.md

README.md

+2

-1

docs/cc163380115640d4a5d88ffb246bde44.png

docs/cc163380115640d4a5d88ffb246bde44.png

+0

-0

No files found.

README.md

View file @

cd9e6abc

...

...

@@ -5,7 +5,8 @@ Swin Transformer可以作为计算机视觉的通用支柱。将 Transformer 从

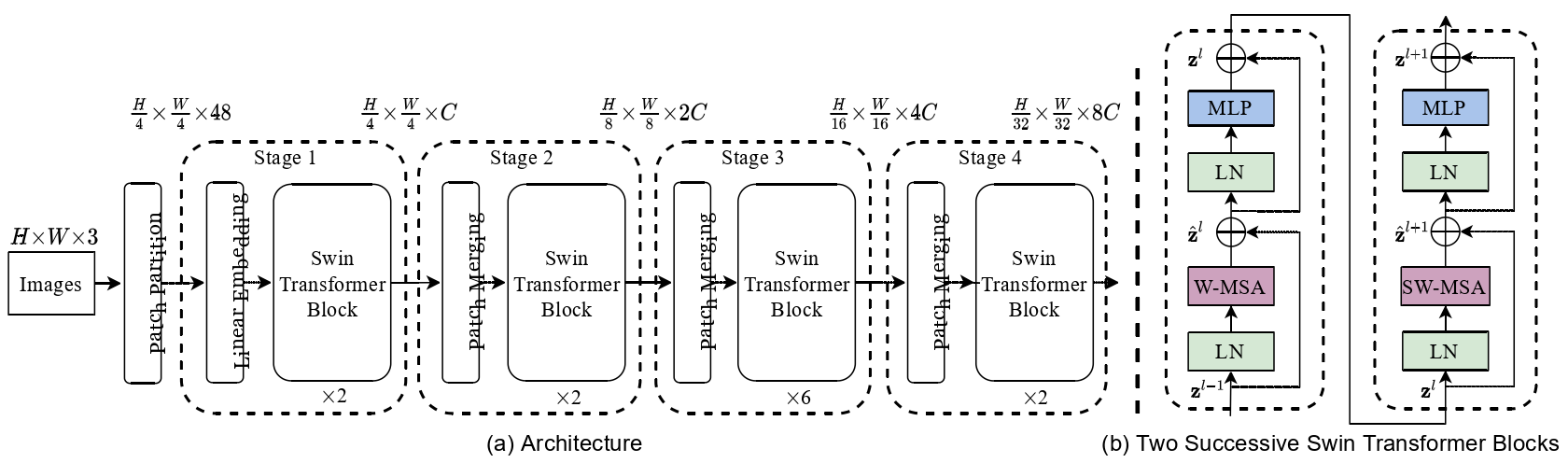

## 模型结构

Swin Transformer体系结构的概述如下图所示,其中说明了 tiny version ( Swin-T )。它首先通过 patch 分割模块(如ViT )将输入的RGB图像分割成不重叠的 patch 。每个 patch 被当作一个 "token" ( 相当于NLP中的词源 )处理,它的特征被设置为原始像素RGB值的 concatenation。在我们的实现中,我们使用了 4 × 4 的 patch 大小,因此每个 patch 的特征维度为 4 × 4 × 3 = 48。在这个原始值特征上应用一个线性嵌入层,将其投影到任意维度( 记为C )。Swin Transformer block将Transformer块中的标准多头自注意力( MSA )模块替换为基于移动窗口的模块,其他层保持不变。如图( b )所示,一个 SwinTransformer 模块由一个基于移动窗口的MSA模块组成,其后是一个2层的MLP,GELU非线性介于两者之间。在每个MSA模块和每个MLP之前施加一个 LayerNorm ( LN )层,在每个模块之后施加一个残差连接。

-

( a )Swin Transformer ( Swin-T )的结构;

-

( b )连续 2 个Swin Transformer 块。

...

...

docs/cc163380115640d4a5d88ffb246bde44.png

0 → 100644

View file @

cd9e6abc

101 KB

Write

Preview

Markdown

is supported

0%

Try again

or

attach a new file

.

Attach a file

Cancel

You are about to add

0

people

to the discussion. Proceed with caution.

Finish editing this message first!

Cancel

Please

register

or

sign in

to comment