提交Swin-Transformer代码

parents

Showing

data/imagenet22k_dataset.py

0 → 100644

data/map22kto1k.txt

0 → 100644

data/samplers.py

0 → 100644

data/zipreader.py

0 → 100644

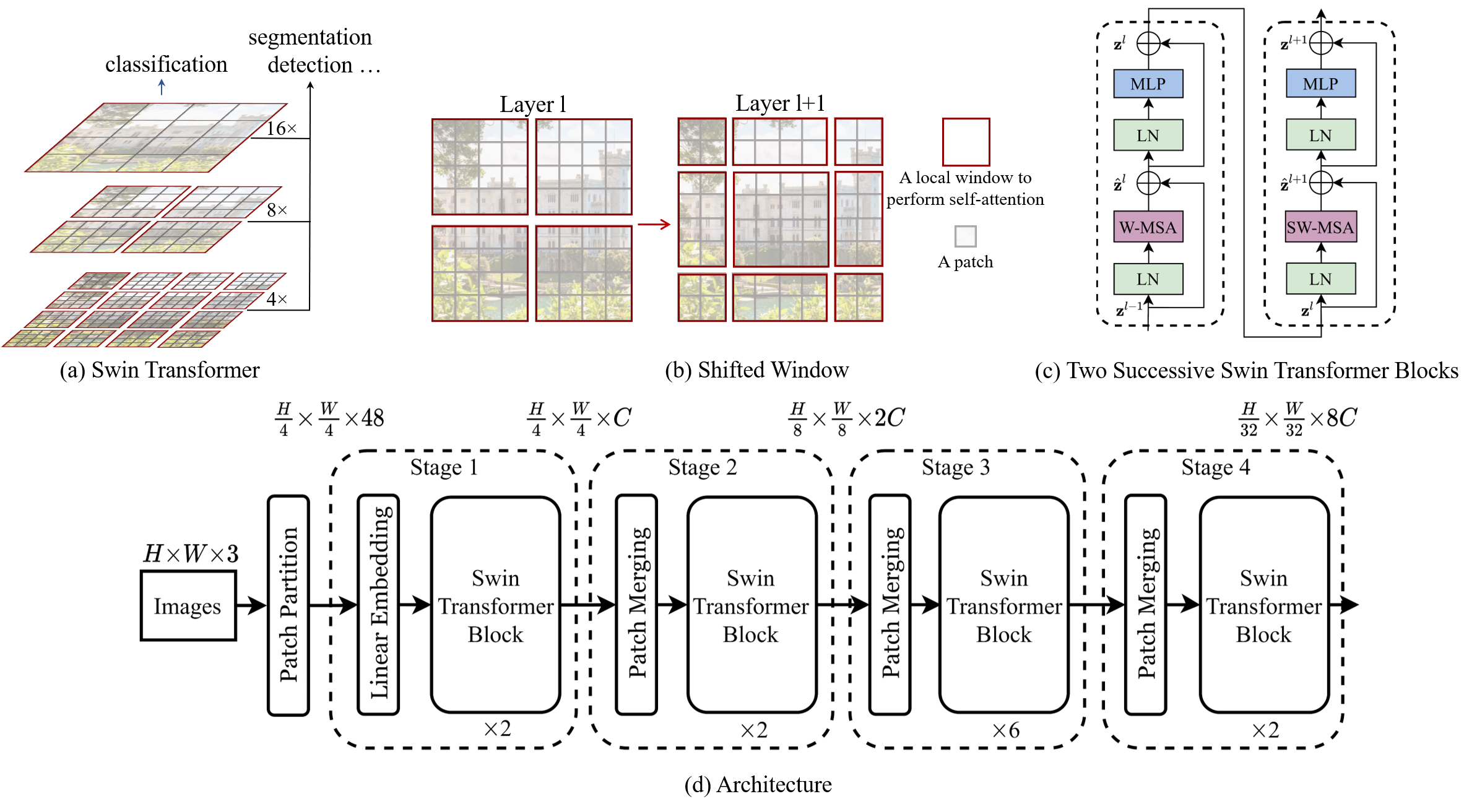

figures/teaser.png

0 → 100644

909 KB

get_started.md

0 → 100644

logger.py

0 → 100644

lr_scheduler.py

0 → 100644

This diff is collapsed.

main.py

0 → 100644

This diff is collapsed.

main_moe.py

0 → 100644

This diff is collapsed.

main_simmim_ft.py

0 → 100644

This diff is collapsed.

main_simmim_pt.py

0 → 100644

This diff is collapsed.

models/__init__.py

0 → 100644

models/build.py

0 → 100644

This diff is collapsed.

models/simmim.py

0 → 100644

This diff is collapsed.