stylegan3

Showing

gen_images.py

0 → 100644

gen_video.py

0 → 100644

gui_utils/__init__.py

0 → 100644

gui_utils/gl_utils.py

0 → 100644

gui_utils/glfw_window.py

0 → 100644

gui_utils/imgui_utils.py

0 → 100644

gui_utils/imgui_window.py

0 → 100644

gui_utils/text_utils.py

0 → 100644

legacy.py

0 → 100644

metrics/__init__.py

0 → 100644

metrics/equivariance.py

0 → 100644

metrics/inception_score.py

0 → 100644

metrics/metric_main.py

0 → 100644

metrics/metric_utils.py

0 → 100644

This diff is collapsed.

metrics/precision_recall.py

0 → 100644

model.properties

0 → 100644

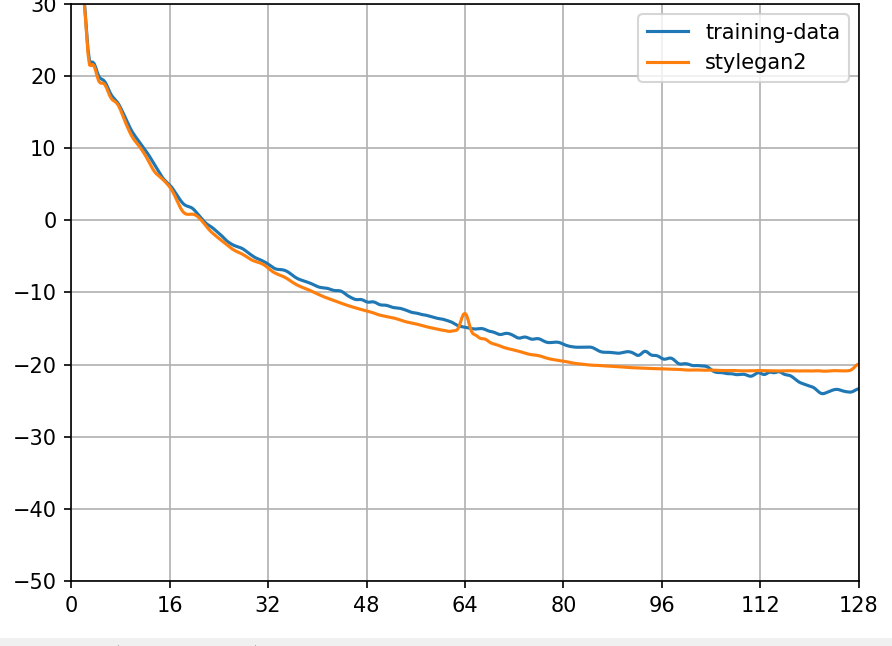

pngs/acc.png

0 → 100644

44.5 KB