".github/vscode:/vscode.git/clone" did not exist on "39fd89308c0bbe26311db583cf9729f81ffa9a94"

Initial commit

Showing

README.md

0 → 100644

README_orgin.md

0 → 100644

assets/0000.jpg

0 → 100644

154 KB

assets/0001.png

0 → 100644

3.01 MB

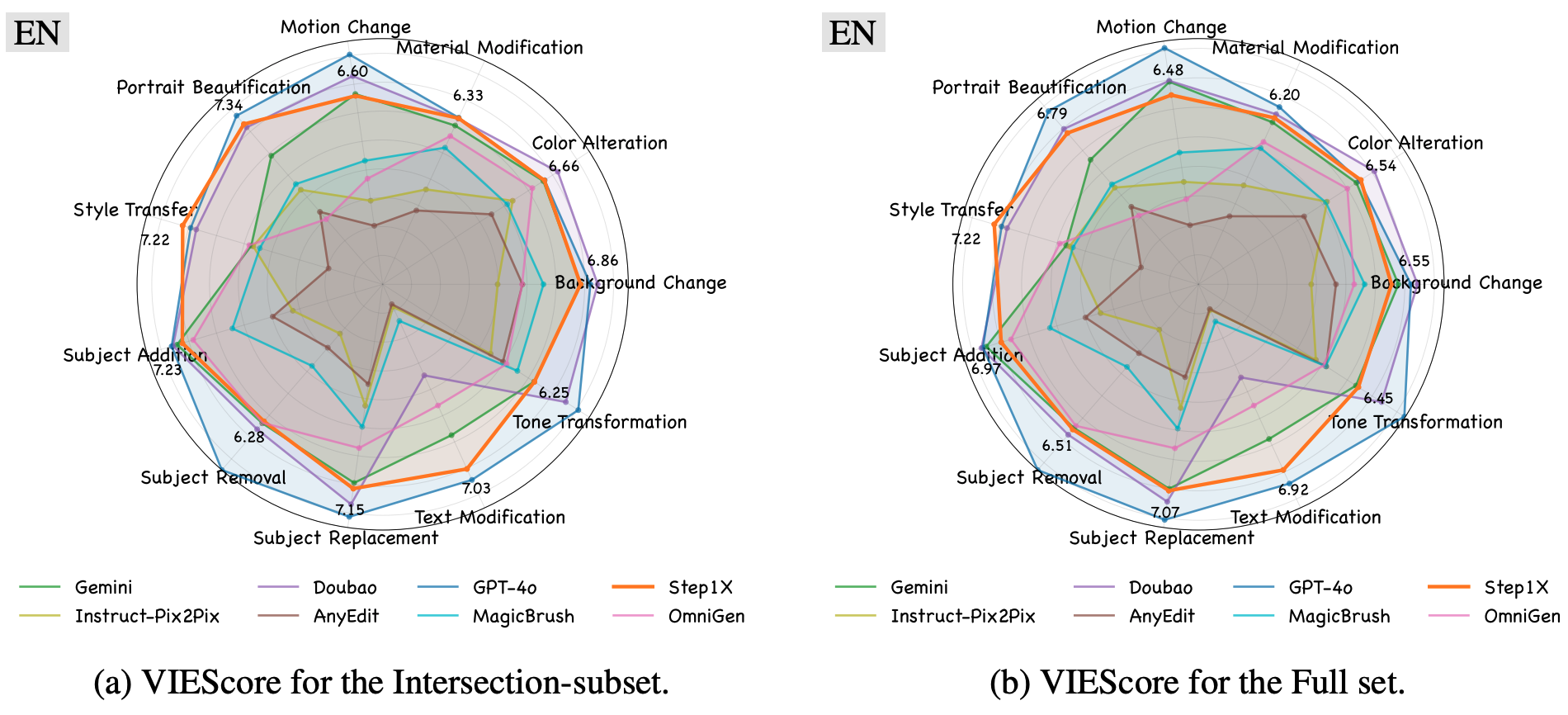

assets/eval_res_en.png

0 → 100644

512 KB

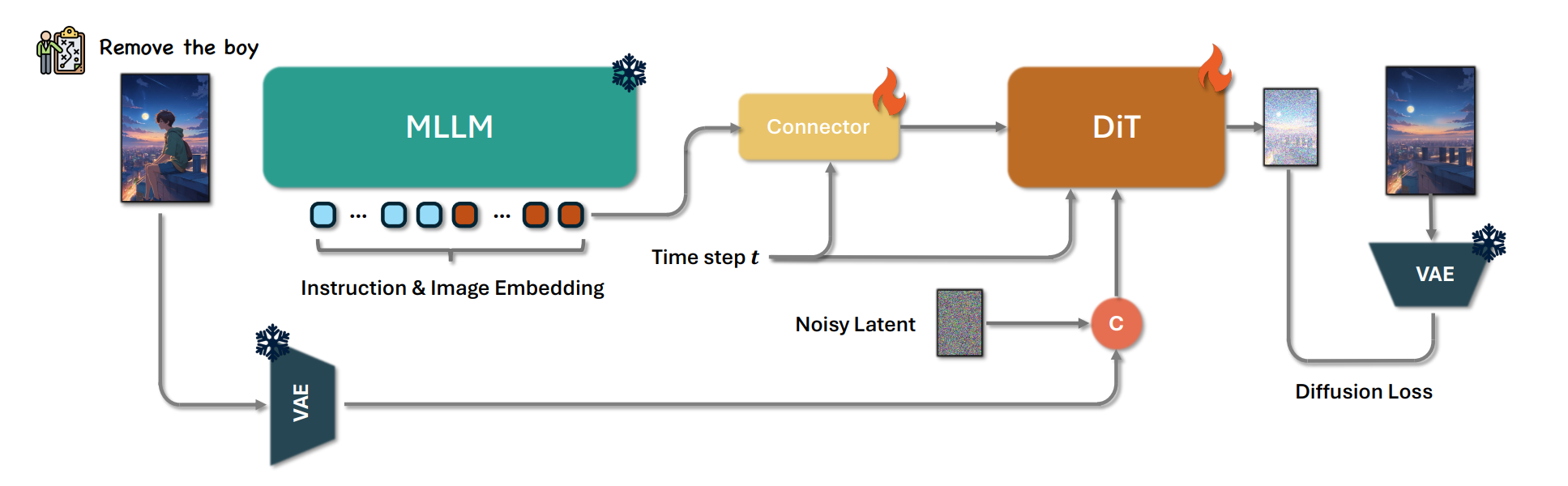

assets/frame_work.png

0 → 100644

249 KB

assets/image_edit_demo.gif

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

assets/logo.png

0 → 100644

6.71 KB

152 KB

2.94 MB

155 KB

579 KB

171 KB

154 KB

3.08 MB

146 KB

552 KB

171 KB

assets/results_show.png

0 → 100644

2.36 MB

docker/Dockerfile

0 → 100644