"vscode:/vscode.git/clone" did not exist on "0b3ddec6540d7fc7fb59c1b6184a5e6c9e1d32e0"

Initial commit

Showing

.gitignore

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_orgin.md

0 → 100644

api/call_remote_server.py

0 → 100644

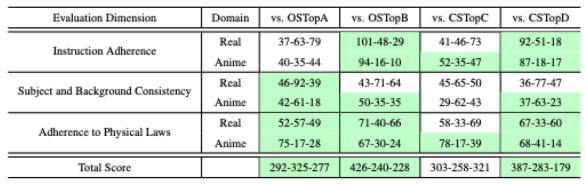

assets/compare_1.png

0 → 100644

43.7 KB

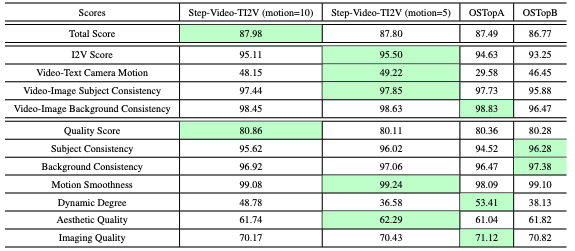

assets/compare_2.png

0 → 100644

53.6 KB

assets/demo.png

0 → 100644

4.71 MB

assets/logo.png

0 → 100644

6.71 KB

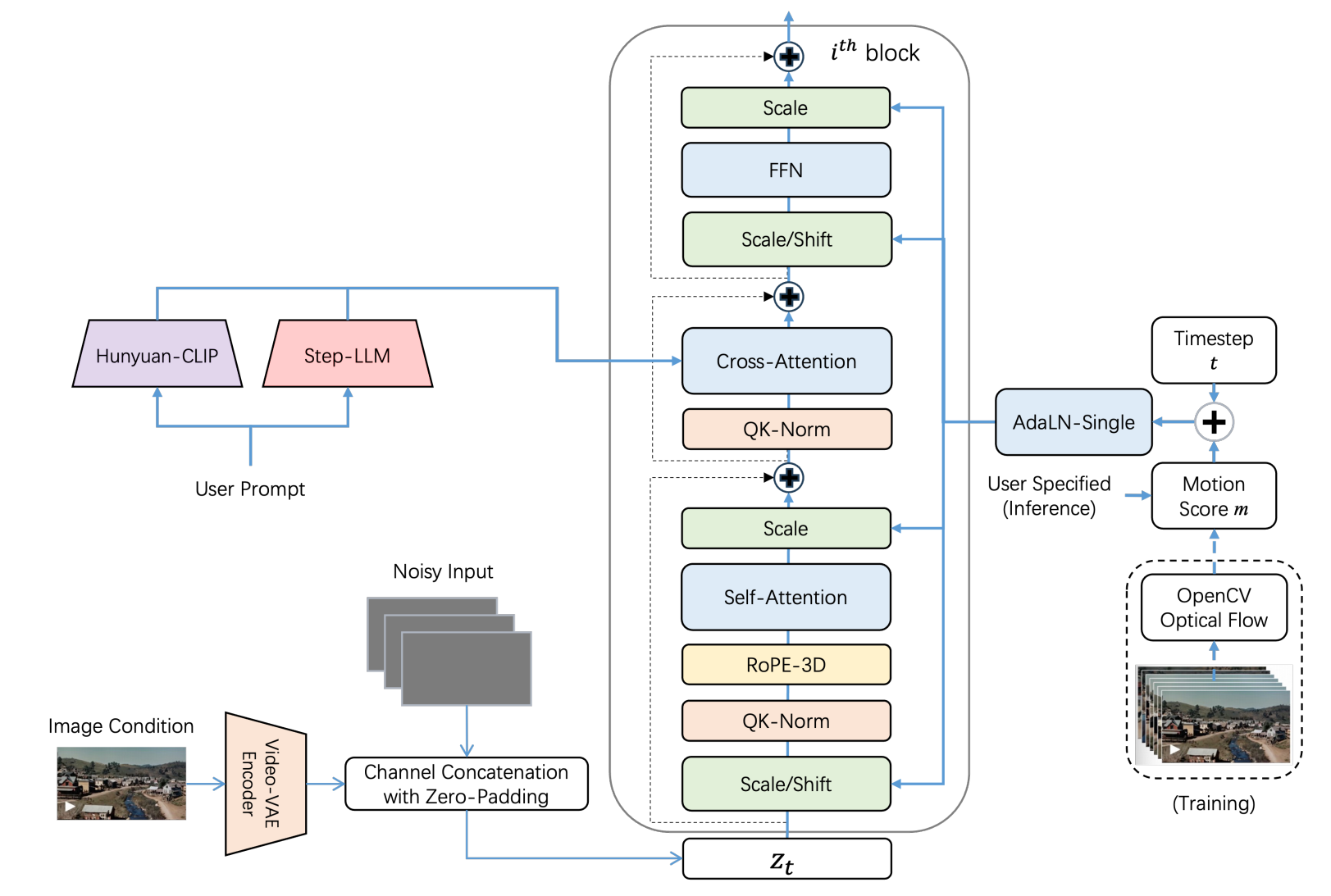

assets/model.png

0 → 100644

266 KB

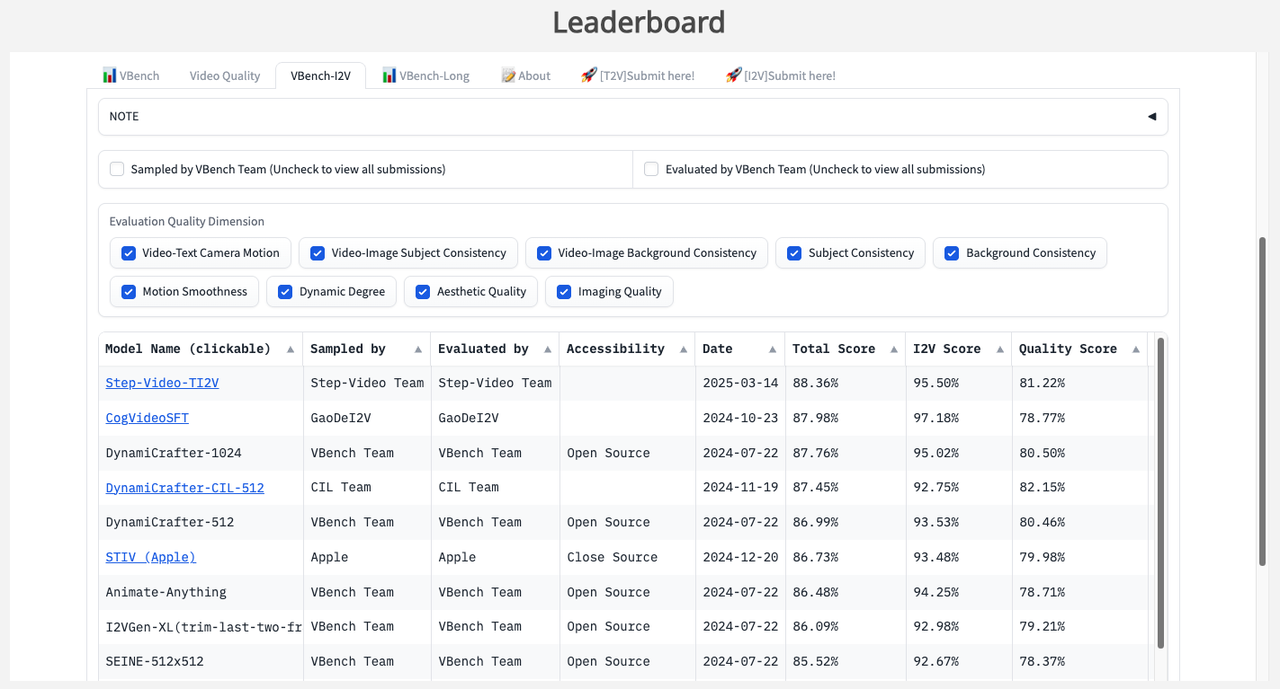

assets/vbench.png

0 → 100644

258 KB

assets/笑起来-2025-05-13.mp4

0 → 100644

File added

docker/Dockerfile

0 → 100644

fix.sh

0 → 100644

icon.png

0 → 100644

70.5 KB

model.properties

0 → 100644

modified/config.py

0 → 100644

modified/envs.py

0 → 100644

run.sh

0 → 100644

run_parallel.py

0 → 100644