git init

Showing

README.md

0 → 100644

File added

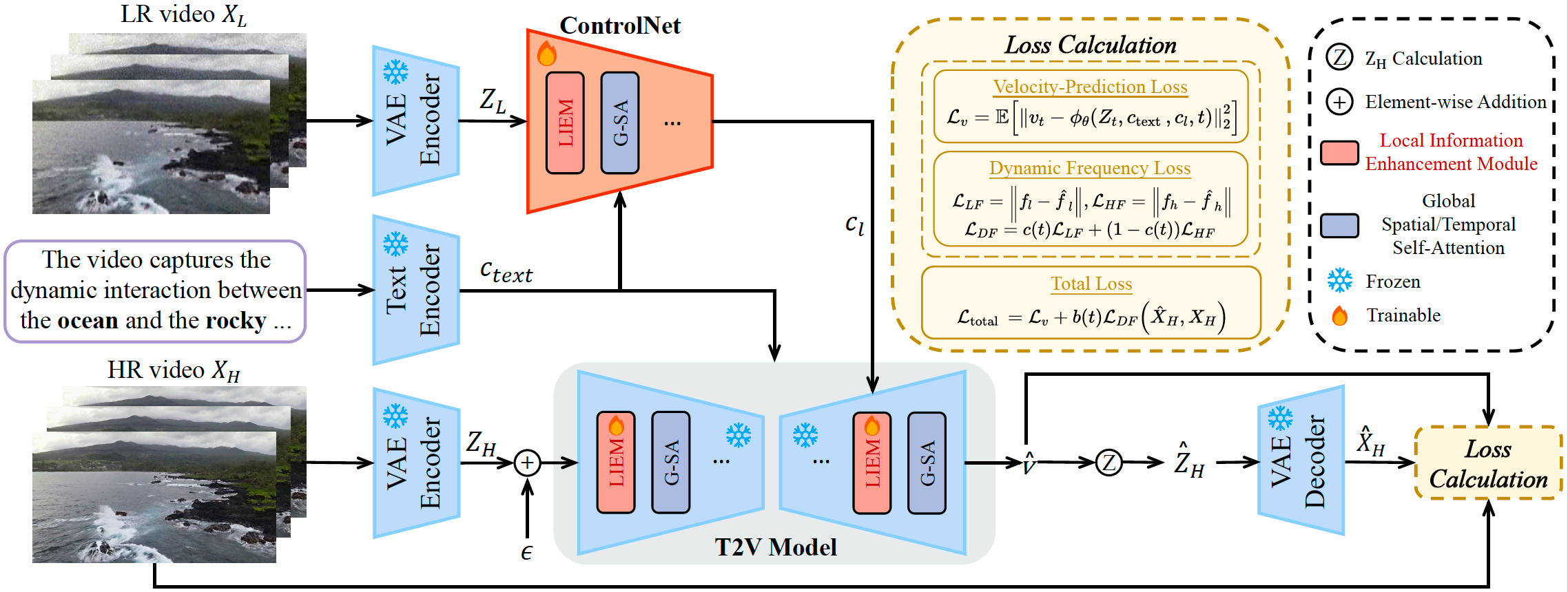

assets/overview.png

0 → 100644

686 KB

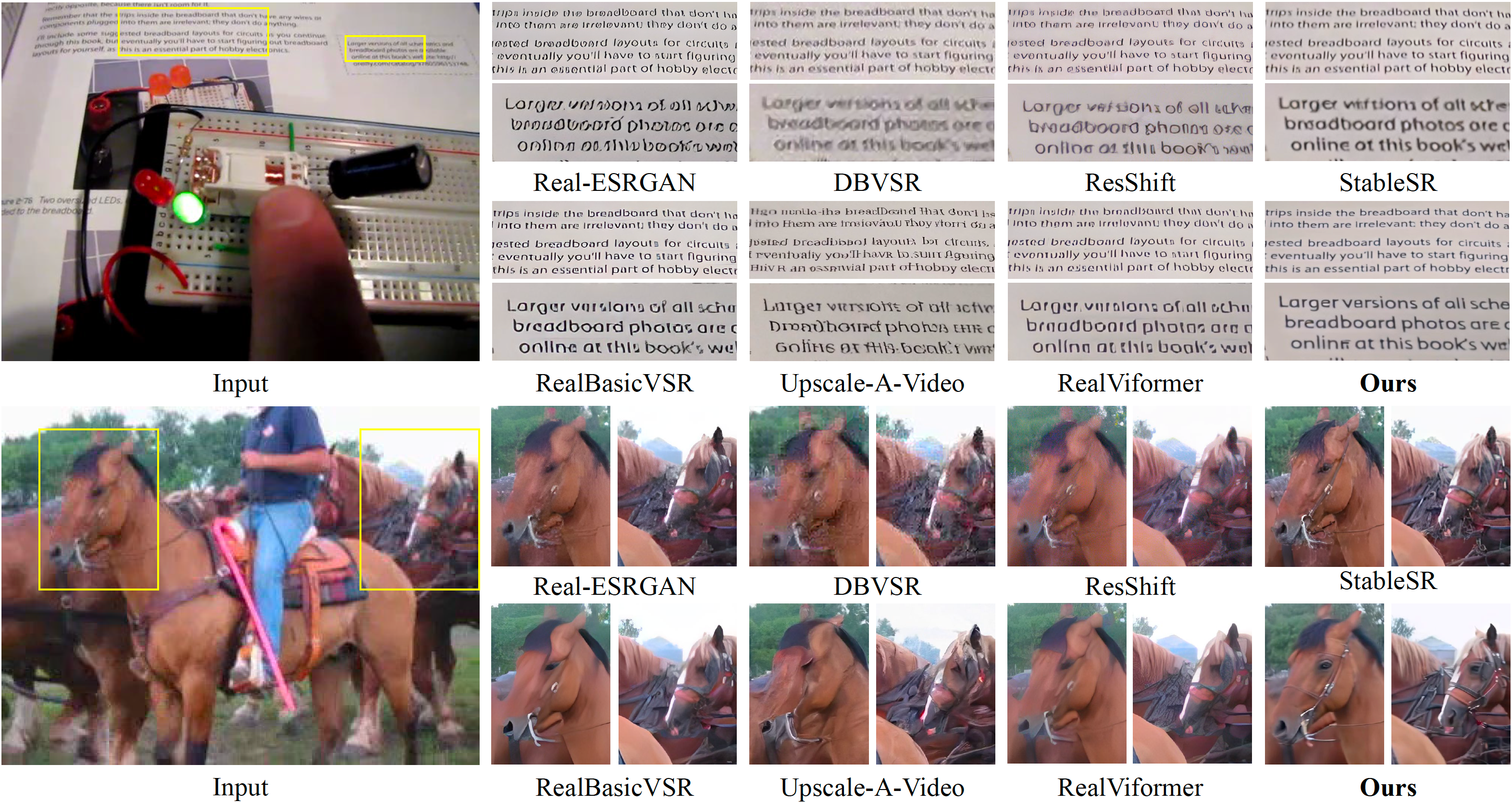

assets/real_world.png

0 → 100644

6.48 MB

assets/teaser.png

0 → 100644

5.36 MB

cogvideox-based/README.md

0 → 100644

File added

File added

File added

File added

File added