Initial commit

Showing

figures/losses.png

0 → 100644

39.1 KB

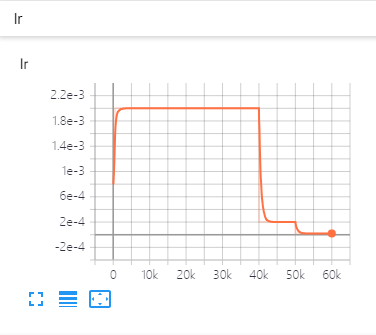

figures/lr.png

0 → 100644

13.2 KB

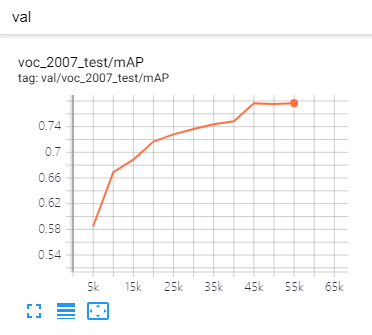

figures/metrics.png

0 → 100644

16.2 KB

outputs/.gitignore

0 → 100644

requirements.txt

0 → 100644

| torch>=1.3 | ||

| torchvision>=0.3 | ||

| yacs | ||

| tqdm | ||

| opencv-python | ||

| vizer | ||

| \ No newline at end of file |

setup.py

0 → 100644

ssd/__init__.py

0 → 100644

ssd/config/__init__.py

0 → 100644

ssd/config/defaults.py

0 → 100644

ssd/config/path_catlog.py

0 → 100644

ssd/data/__init__.py

0 → 100644

ssd/data/build.py

0 → 100644

ssd/data/datasets/coco.py

0 → 100644

ssd/data/datasets/voc.py

0 → 100644