Initial commit

Showing

.gitignore

0 → 100644

DEVELOP_GUIDE.md

0 → 100644

LICENSE

0 → 100644

MANIFEST.in

0 → 100644

README.md

0 → 100644

TROUBLESHOOTING.md

0 → 100644

demo.py

0 → 100644

demo/000342.jpg

0 → 100644

51.2 KB

demo/000542.jpg

0 → 100644

113 KB

demo/003123.jpg

0 → 100644

80.3 KB

demo/004101.jpg

0 → 100644

93.1 KB

demo/008591.jpg

0 → 100644

94.3 KB

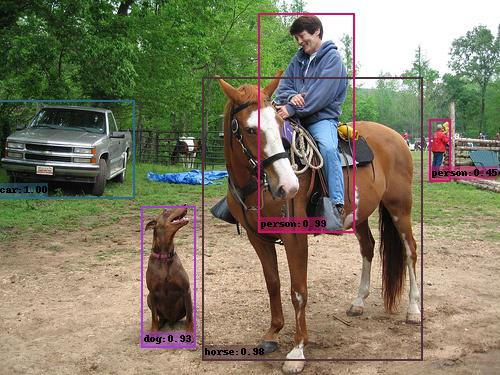

figures/004545.jpg

0 → 100644

53 KB