Add flops counter (#1127)

* add flops counter * minor fix * add forward_dummy() for most detectors * add documentation for some tools

Showing

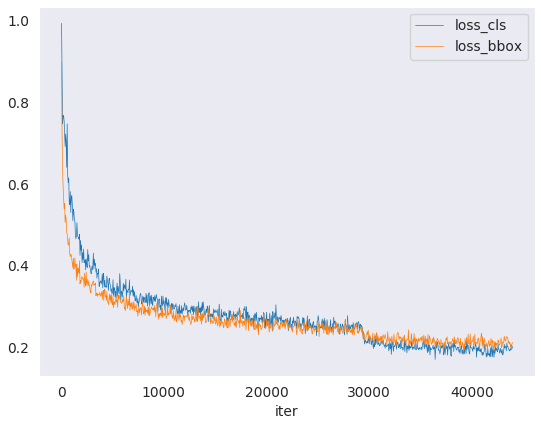

demo/loss_curve.png

0 → 100644

36.6 KB

mmdet/utils/flops_counter.py

0 → 100644

tools/get_flops.py

0 → 100644