First add

Showing

File added

File added

File added

File added

File added

File added

File added

File added

File added

File added

This diff is collapsed.

docs/conf.py

0 → 100644

docs/contact.md

0 → 100644

docs/hugging_face.md

0 → 100644

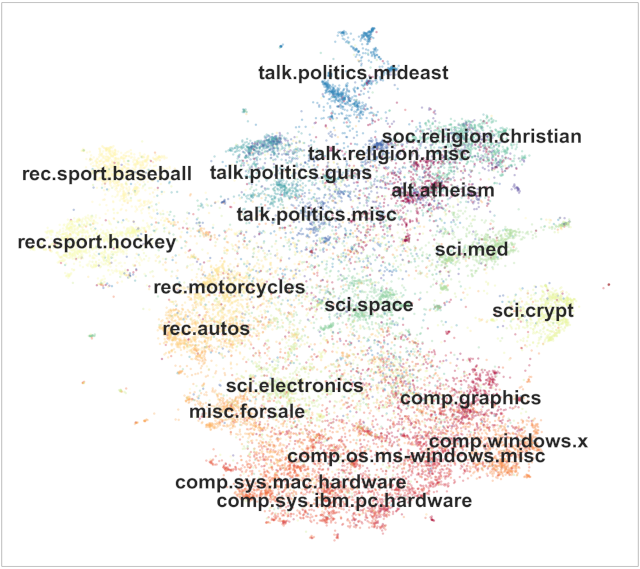

docs/img/20news_semantic.png

0 → 100644

331 KB

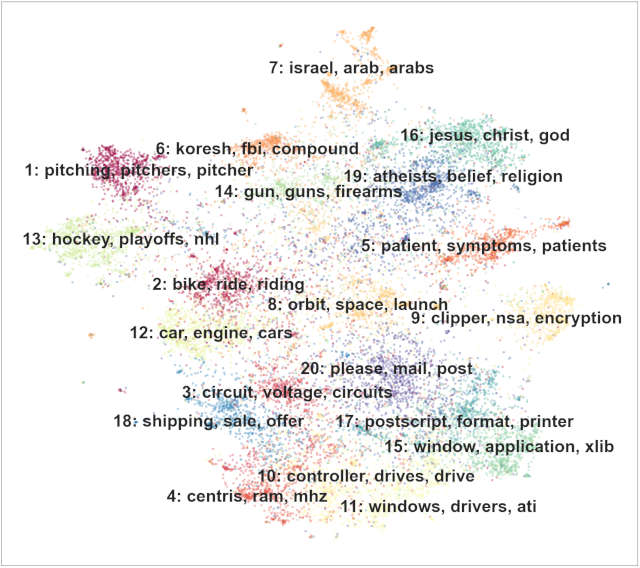

docs/img/20news_top2vec.png

0 → 100644

347 KB