First add

Showing

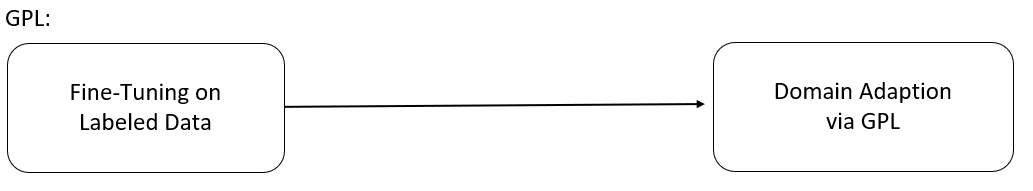

docs/img/gpl_overview.png

0 → 100644

8.97 KB

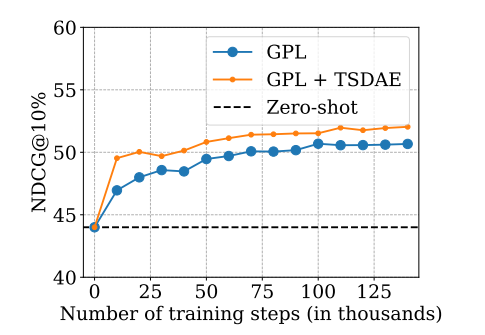

docs/img/gpl_steps.png

0 → 100644

33.8 KB

docs/img/logo.png

0 → 100644

45 KB

docs/img/logo.svg

0 → 100644

docs/img/logo.xcf

0 → 100644

File added

docs/img/logo_org.png

0 → 100644

24.6 KB

35.4 KB

21.5 KB

35.8 KB

65.3 KB

docs/installation.md

0 → 100644