"Initial commit"

Showing

.clang-format

0 → 100644

.gitignore

0 → 100644

.watchmanconfig

0 → 100644

CODE_OF_CONDUCT.md

0 → 100644

CONTRIBUTING.md

0 → 100644

INSTALL.md

0 → 100644

LICENSE

0 → 100644

LICENSE_cctorch

0 → 100644

MANIFEST.in

0 → 100644

README.md

0 → 100644

README_ori.md

0 → 100644

RELEASE_NOTES.md

0 → 100644

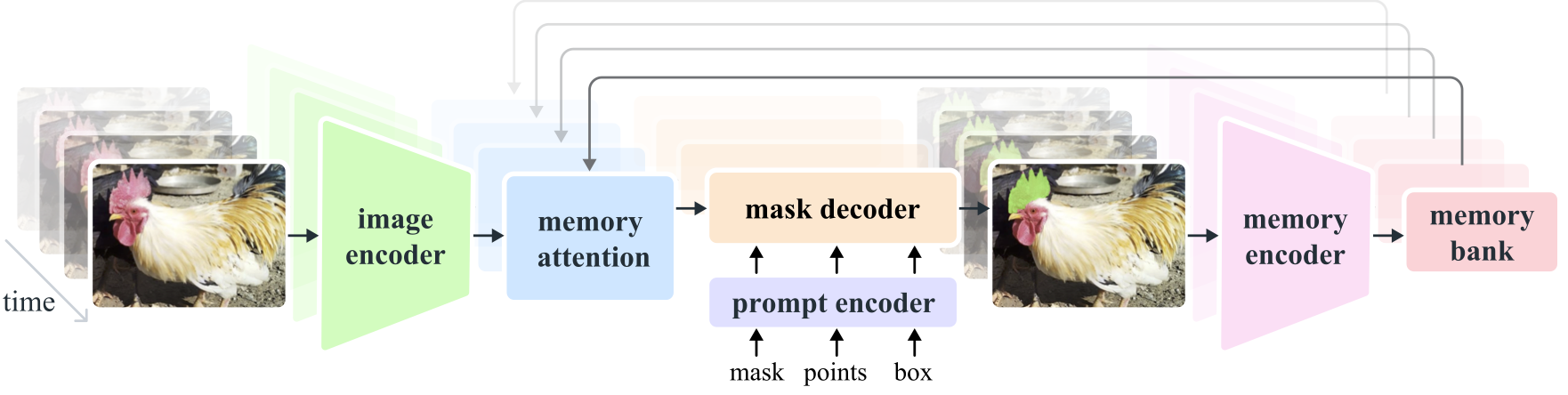

assets/model_diagram.png

0 → 100644

354 KB

assets/sa_v_dataset.jpg

0 → 100644

545 KB

backend.Dockerfile

0 → 100644

demo/.gitignore

0 → 100644

demo/README.md

0 → 100644

demo/backend/server/app.py

0 → 100644