精简代码

Showing

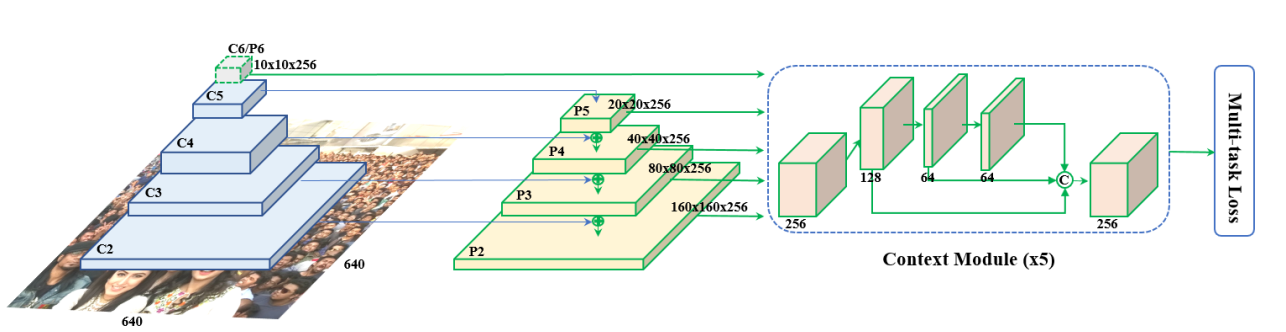

Doc/Image/RetinaFace_01.png

0 → 100644

152 KB

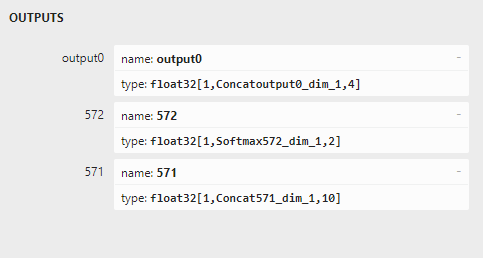

Doc/Image/RetinaFace_02.png

0 → 100644

10.1 KB

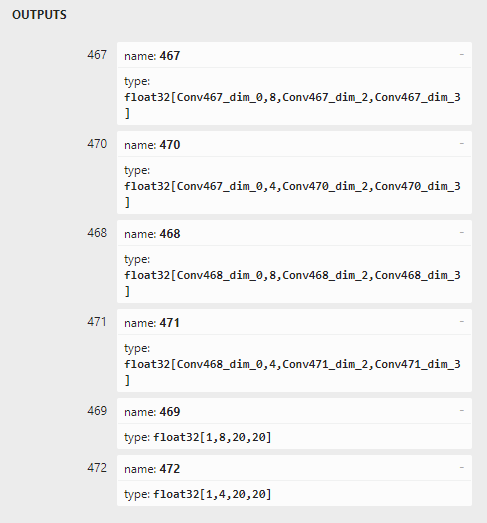

Doc/Image/RetinaFace_03.png

0 → 100644

22.1 KB

Doc/Tutorial_Cpp.md

0 → 100644

Doc/Tutorial_Python.md

0 → 100644

File moved

Src/Sample.cpp

deleted

100644 → 0

Src/Sample.h

deleted

100644 → 0