提交RetinaFace C++示例

Showing

File moved

File moved

File moved

File moved

File moved

File moved

File moved

Resource/Configuration.xml

0 → 100644

187 KB

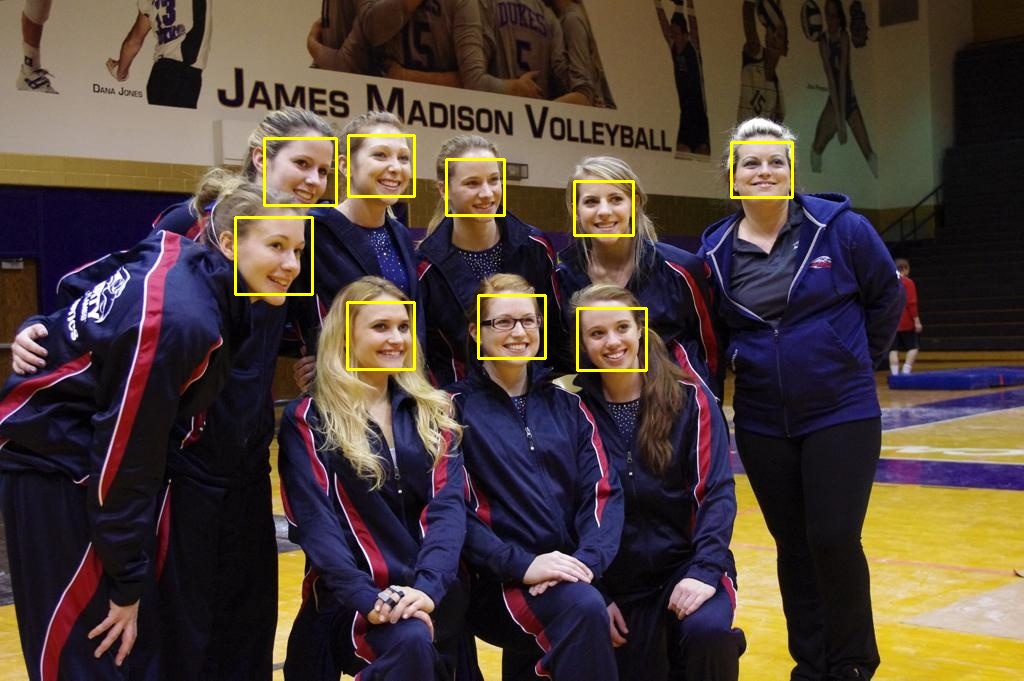

Resource/Images/Result_1.jpg

0 → 100644

193 KB

Resource/Images/Result_2.jpg

0 → 100644

194 KB

File added

This diff is collapsed.

Src/Sample.cpp

0 → 100644

Src/Sample.h

0 → 100644