Adding TCN.

Showing

research/tcn/eval.py

0 → 100644

82.3 KB

204 KB

96.3 KB

research/tcn/g3doc/im.gif

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

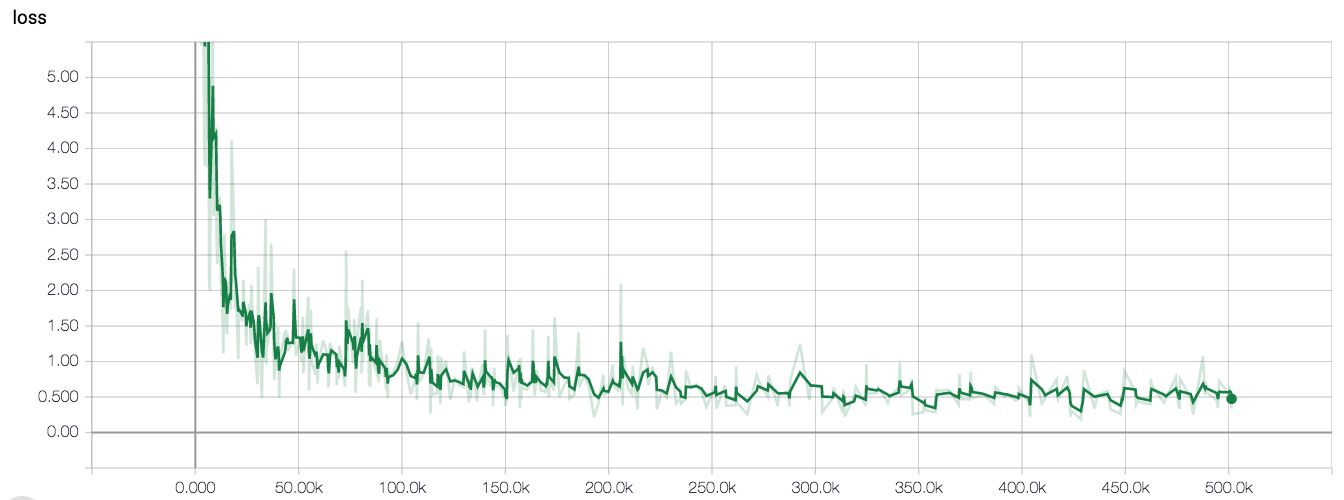

research/tcn/g3doc/loss.png

0 → 100644

103 KB

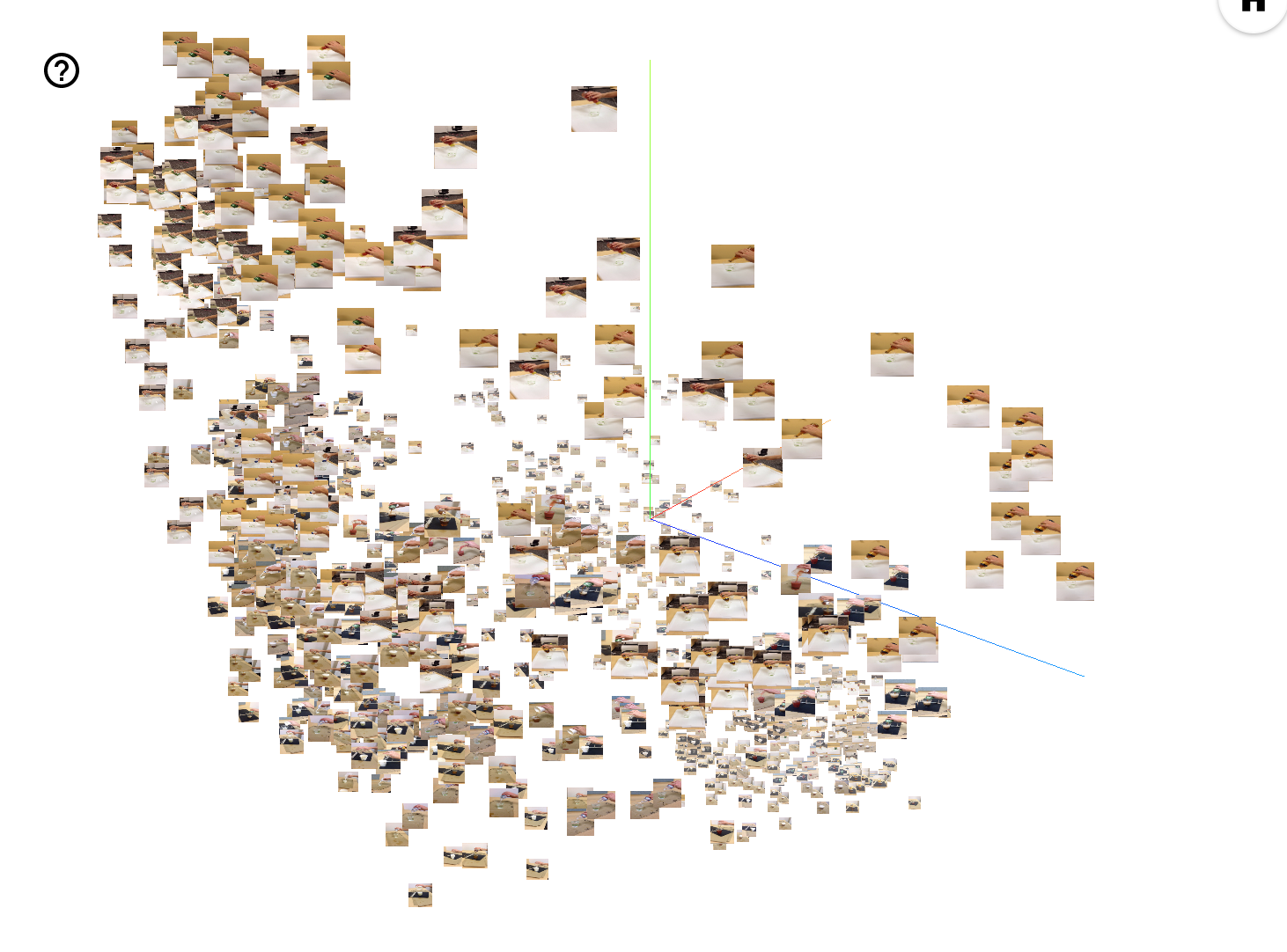

research/tcn/g3doc/pca.png

0 → 100644

924 KB

109 KB

research/tcn/labeled_eval.py

0 → 100644

This diff is collapsed.

research/tcn/model.py

0 → 100644

This diff is collapsed.

This diff is collapsed.

research/tcn/train.py

0 → 100644

This diff is collapsed.

This diff is collapsed.

research/tcn/utils/util.py

0 → 100644

This diff is collapsed.