".github/vscode:/vscode.git/clone" did not exist on "4750cddf68aef4b073a5504a014691767f25f086"

Merge remote-tracking branch 'resnet50_onnxruntime/master'

Showing

3rdParty/InstallRBuild.sh

0 → 100644

File added

File added

File added

File added

File added

File added

CMakeLists.txt

0 → 100644

Doc/Tutorial_Cpp.md

0 → 100644

Doc/Tutorial_Python.md

0 → 100644

Doc/images/.gitkeep

0 → 100644

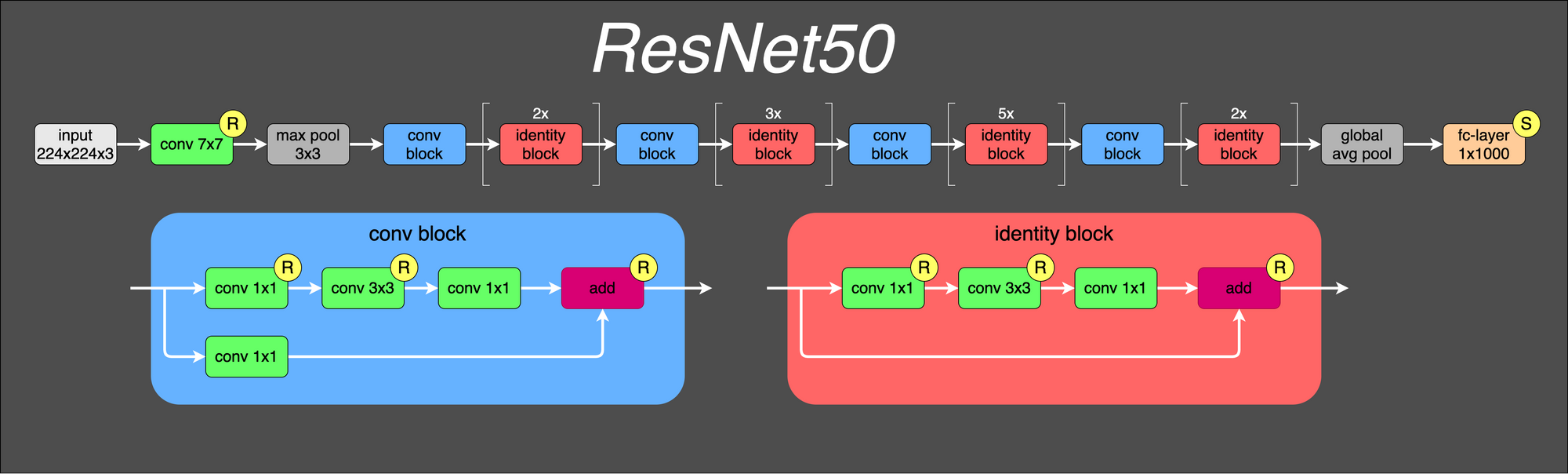

Doc/images/1.png

0 → 100644

158 KB

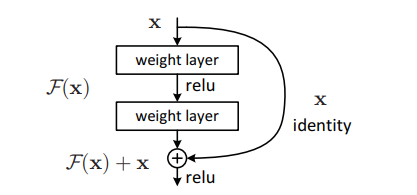

Doc/images/2.png

0 → 100644

14.9 KB

Doc/images/output_image.jpg

0 → 100644

57.4 KB

Python/Classifier.py

0 → 100644

Python/requirements.txt

0 → 100644

Resource/Configuration.xml

0 → 100644

91.9 KB