pyramid-flow

Showing

.gitignore

0 → 100644

Dockerfile

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_official.md

0 → 100644

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This source diff could not be displayed because it is too large. You can view the blob instead.

annotation/image_text.jsonl

0 → 100644

annotation/video_text.jsonl

0 → 100644

app.py

0 → 100644

app_multigpu.py

0 → 100644

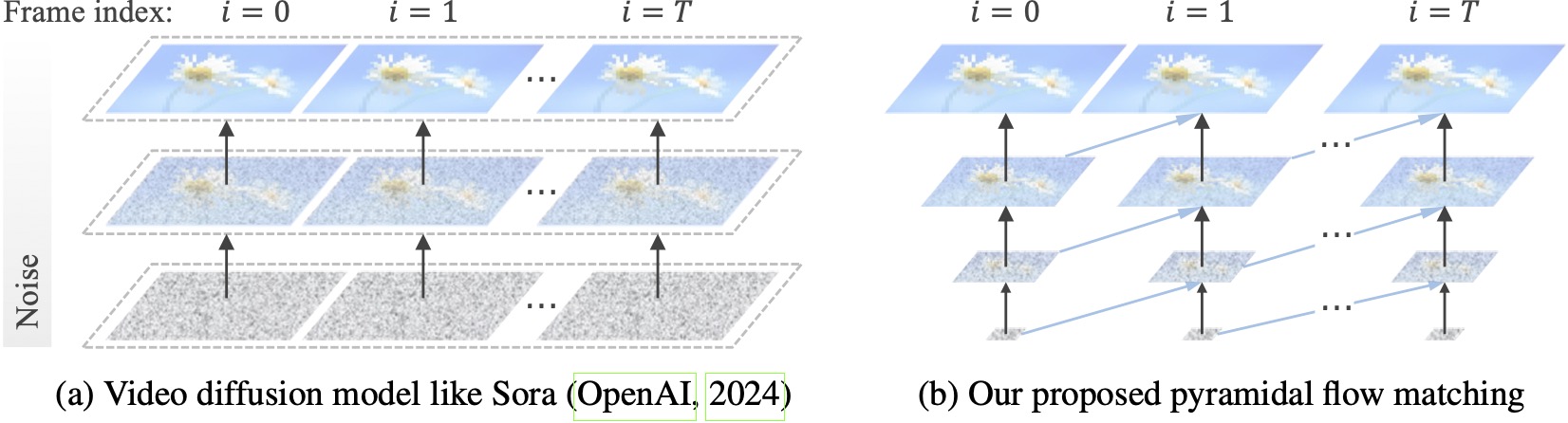

assets/motivation.jpg

0 → 100644

138 KB

assets/the_great_wall.jpg

0 → 100644

343 KB

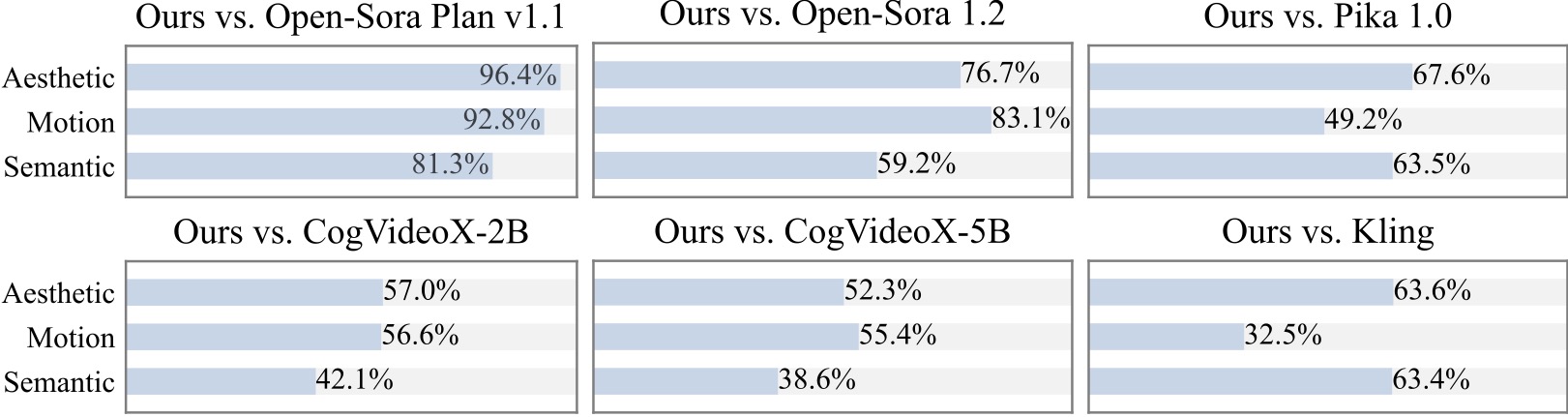

assets/user_study.jpg

0 → 100644

129 KB

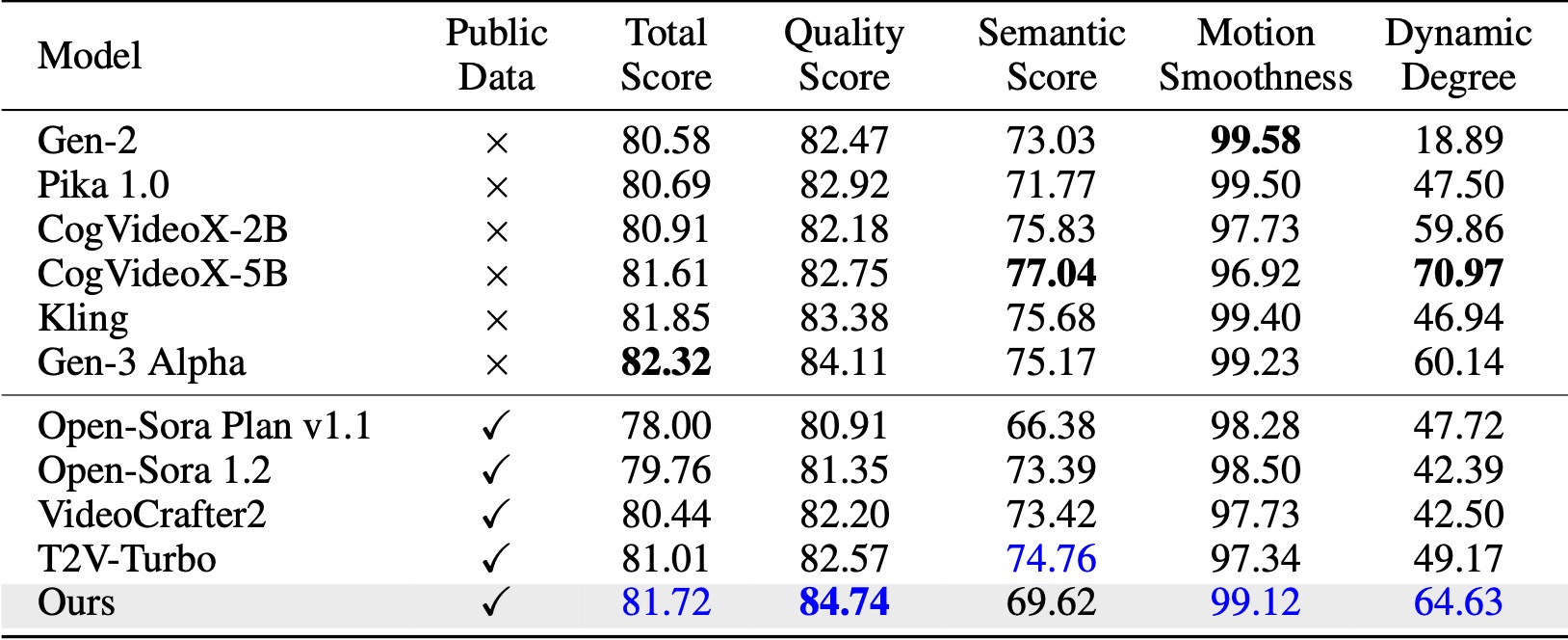

assets/vbench.jpg

0 → 100644

225 KB

causal_video_vae_demo.ipynb

0 → 100644

dataset/__init__.py

0 → 100644

dataset/bucket_loader.py

0 → 100644