更新

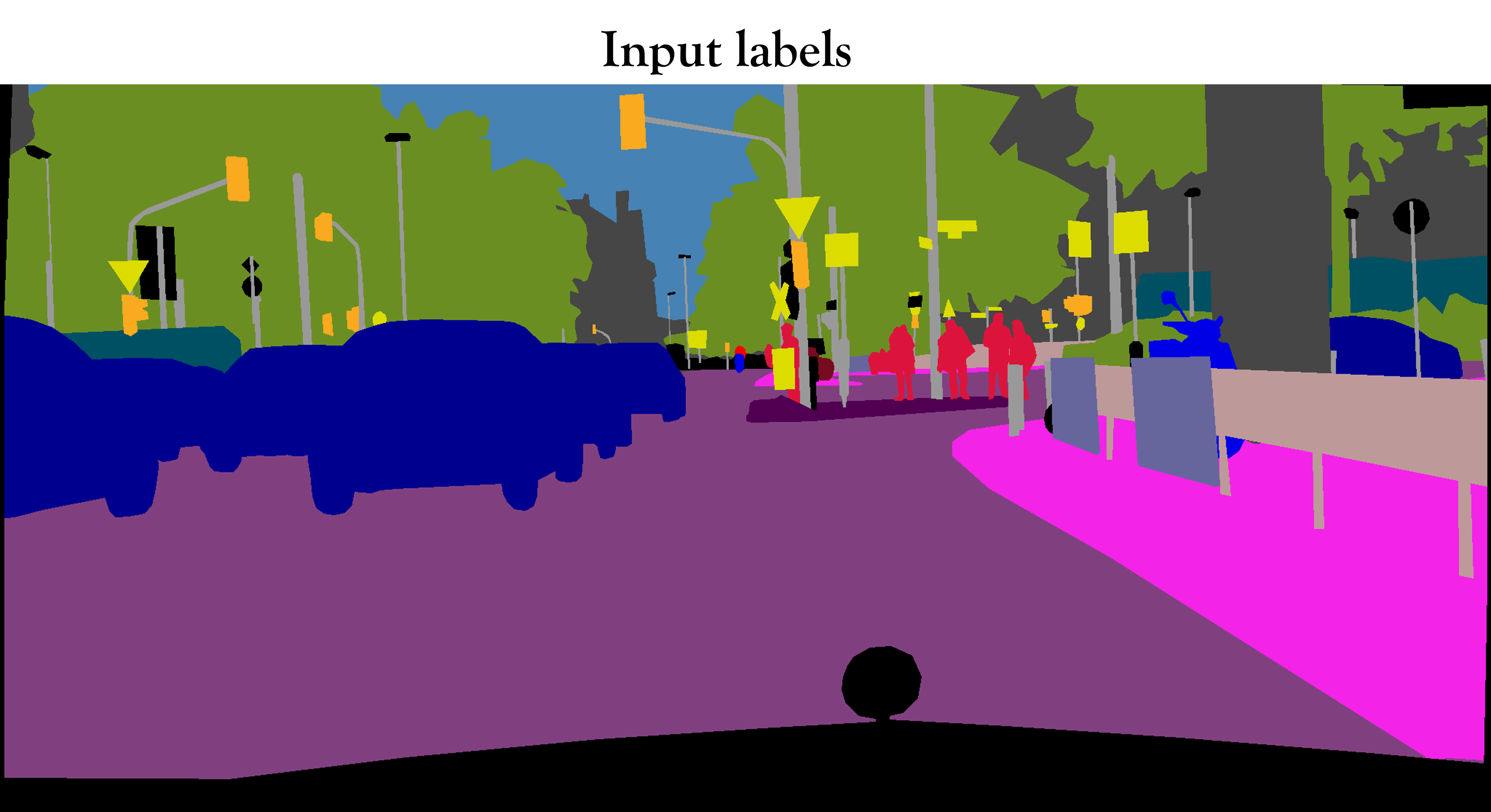

Showing

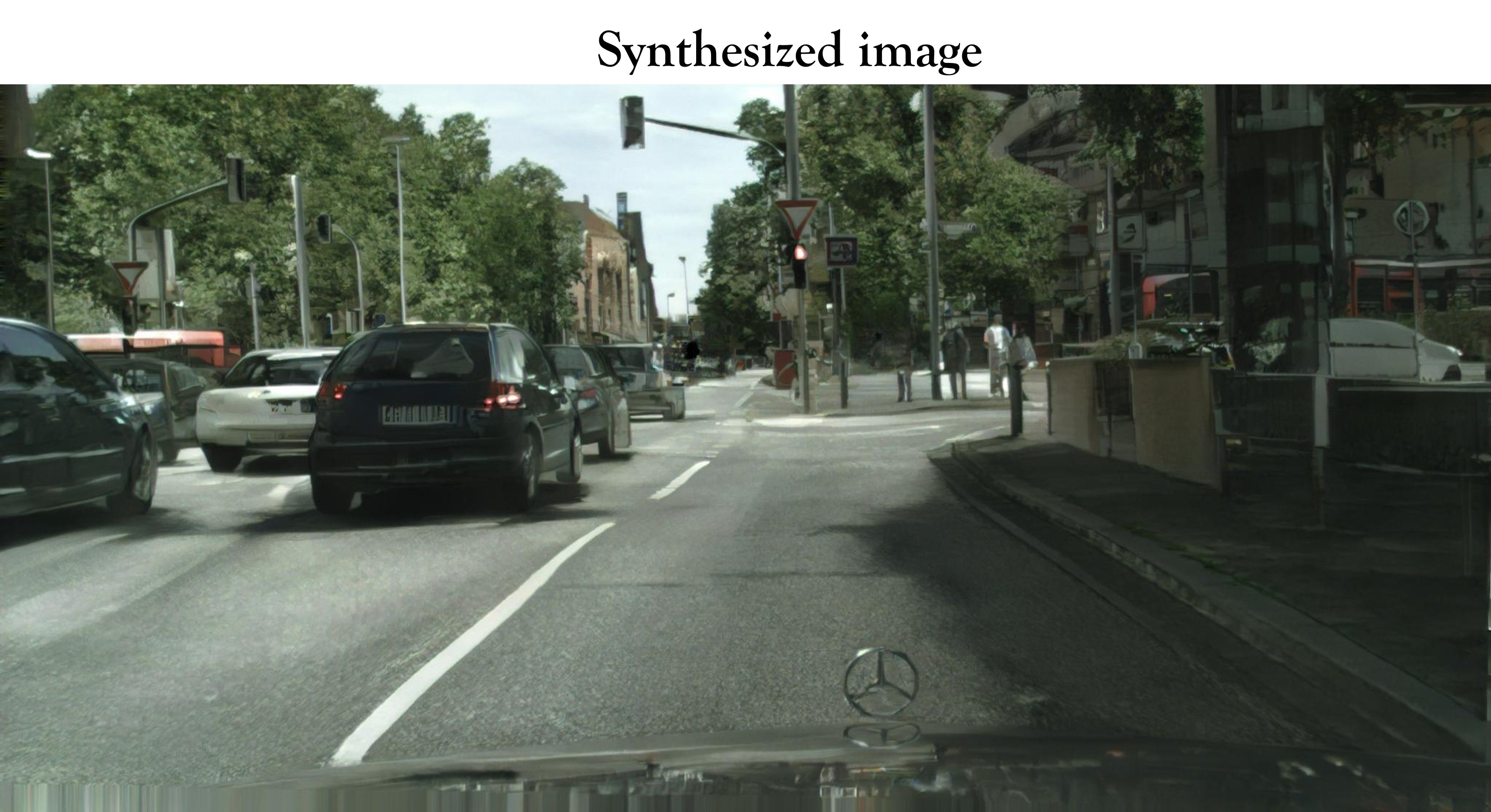

imgs/city_short.gif

deleted

100644 → 0

5.59 MB

208 KB

178 KB

193 KB

173 KB

imgs/face1_1.jpg

deleted

100644 → 0

134 KB

imgs/face1_2.jpg

deleted

100644 → 0

135 KB

imgs/face1_3.jpg

deleted

100644 → 0

125 KB

imgs/face2_1.jpg

deleted

100644 → 0

129 KB

imgs/face2_2.jpg

deleted

100644 → 0

118 KB

imgs/face2_3.jpg

deleted

100644 → 0

164 KB

imgs/face_short.gif

deleted

100644 → 0

1.43 MB

imgs/teaser_720.gif

deleted

100644 → 0

7.99 MB

8.84 MB

465 KB

imgs/teaser_ours.jpg

deleted

100644 → 0

657 KB

This image diff could not be displayed because it is too large. You can view the blob instead.

pix2pixHD_README.md

deleted

100644 → 0