"src/TransferBench.cpp" did not exist on "ff2a96c9f7bc17d420788bfd9a45667976f6e49c"

Initial commit

Showing

Dockerfile

0 → 100644

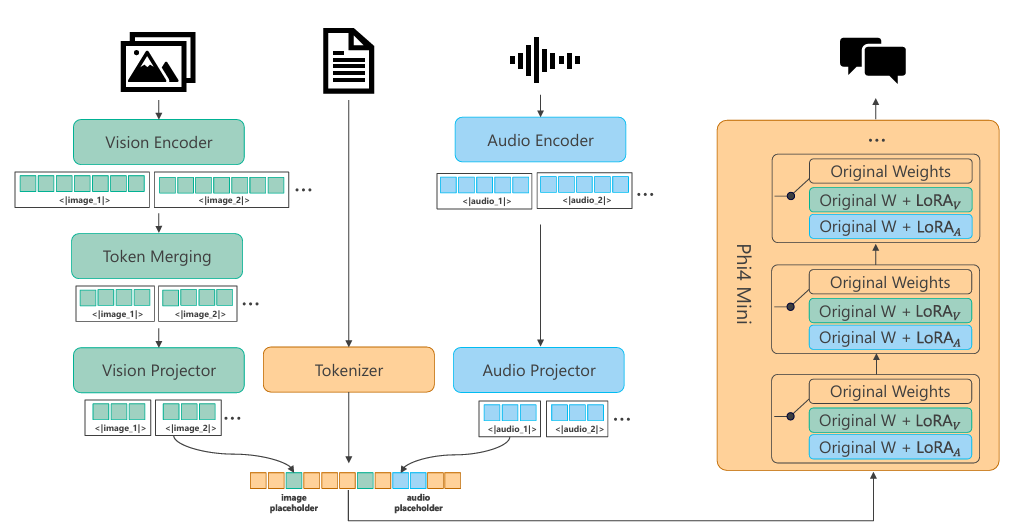

Pic/arch.png

0 → 100644

58.1 KB

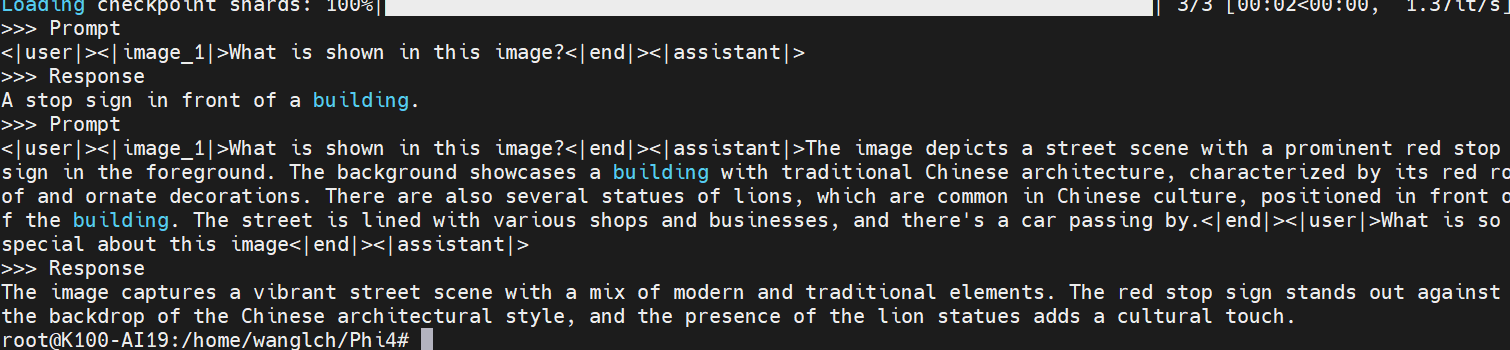

Pic/image.png

0 → 100644

45.6 KB

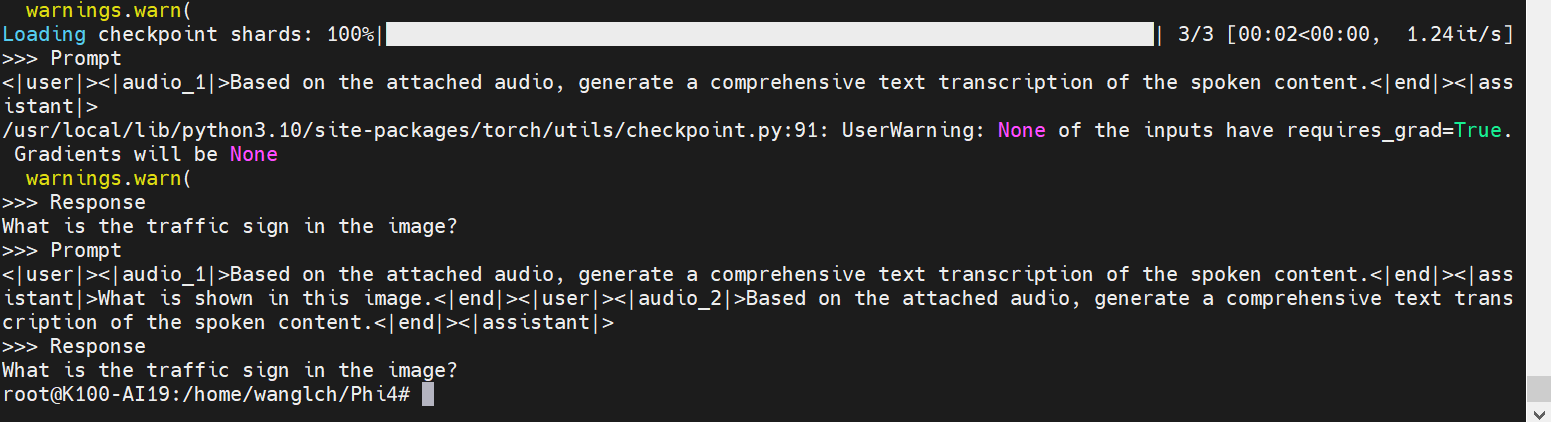

Pic/speech.png

0 → 100644

44.3 KB

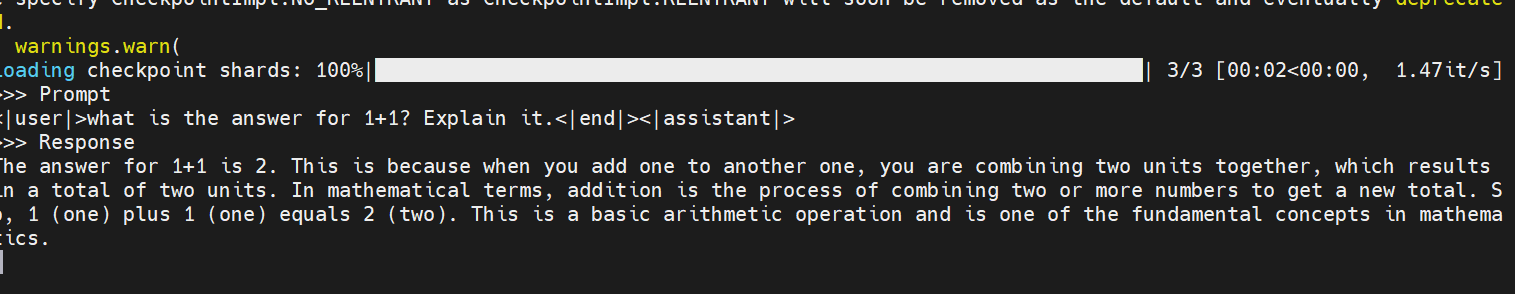

Pic/text.png

0 → 100644

28.3 KB

Pic/theory.png

0 → 100644

156 KB

README.md

0 → 100644

File added

File added

icon.png

0 → 100644

53.8 KB

model.properties

0 → 100644

phi4_finetune.sh

0 → 100644

phi4_speech_inference.py

0 → 100644

phi4_text_inference.py

0 → 100644

phi4_vision_inference.py

0 → 100644

requirements.txt

0 → 100644