"Initial commit"

Showing

.DS_Store

0 → 100644

File added

.gitignore

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_ori.md

0 → 100644

doc/.DS_Store

0 → 100644

File added

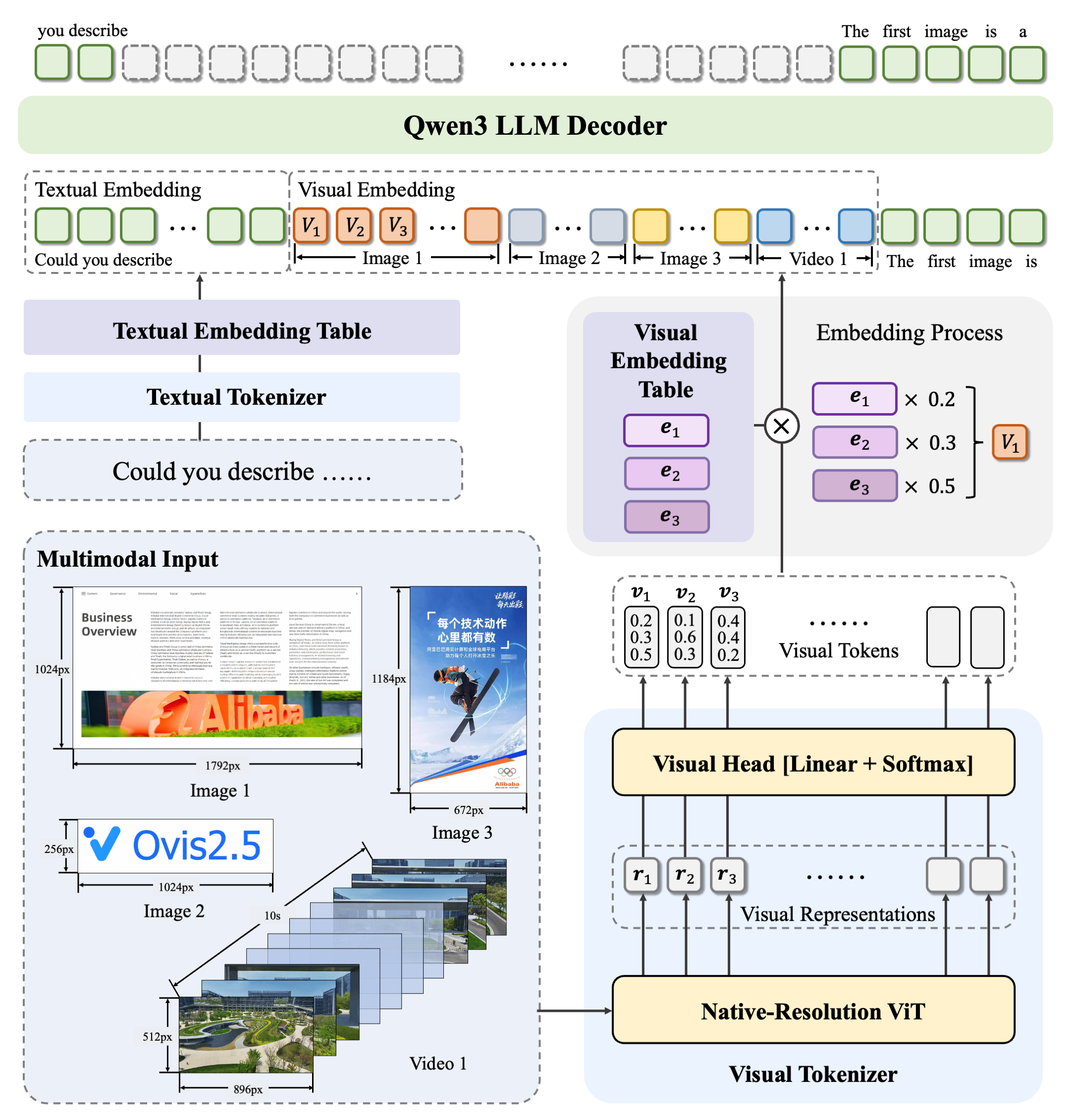

doc/Ovis25_arch.png

0 → 100644

1.24 MB

doc/Ovis2_5_Tech_Report.pdf

0 → 100644

File added

767 KB

doc/license/LLAMA3_LICENSE

0 → 100644

doc/license/QWEN_LICENSE

0 → 100644

doc/ovis_logo.png

0 → 100644

15.9 KB

449 KB

256 KB

415 KB

254 KB

312 KB

207 KB

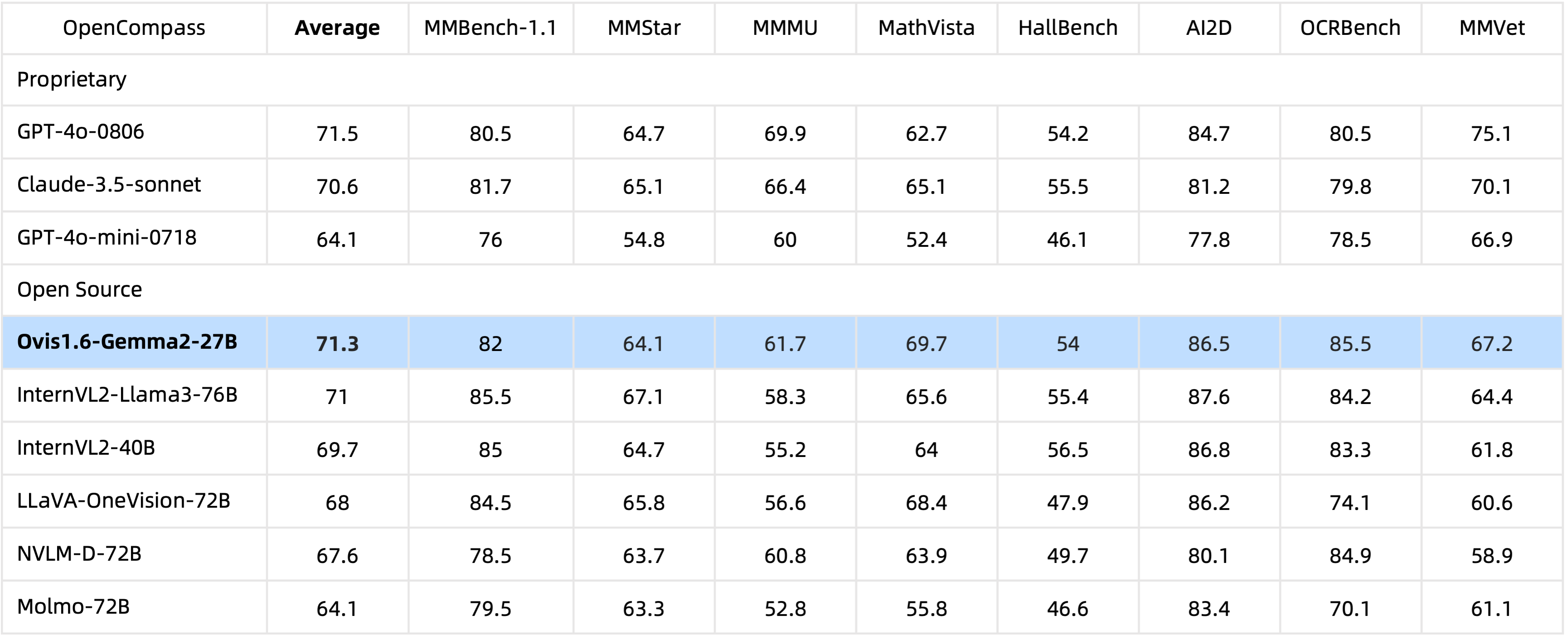

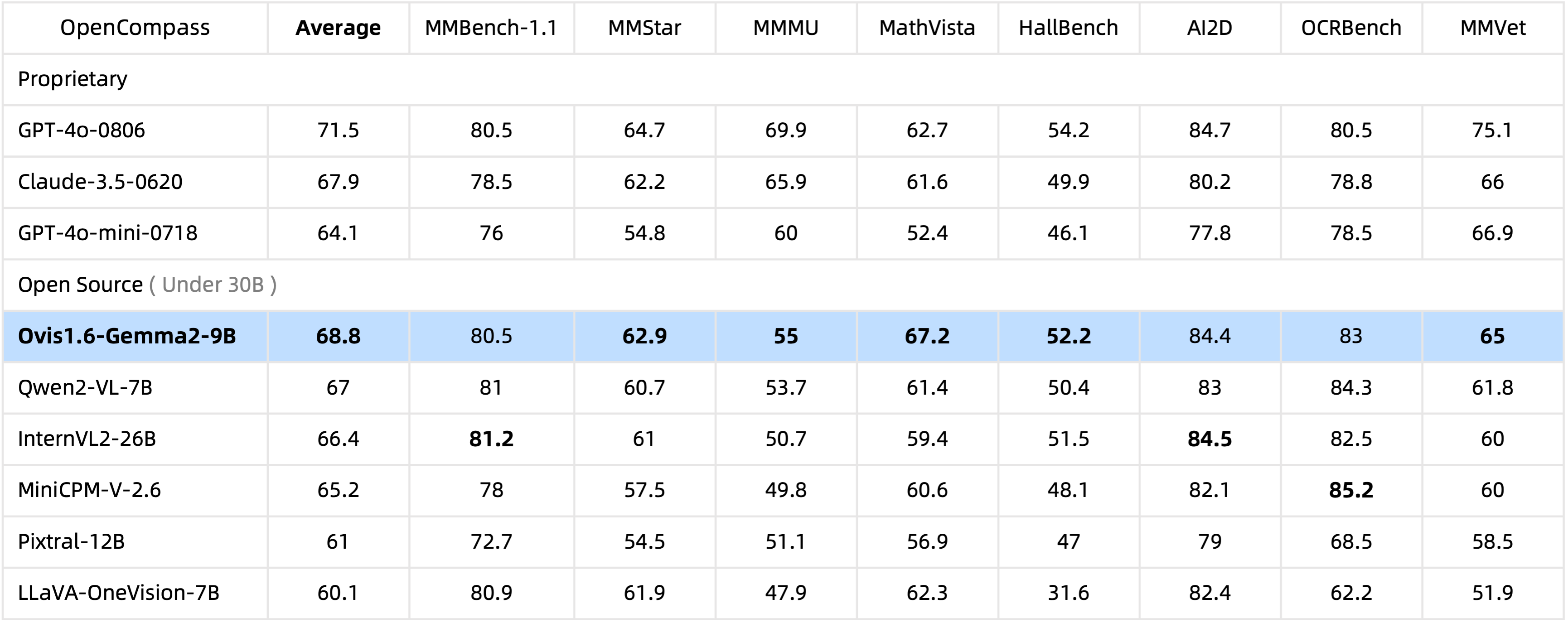

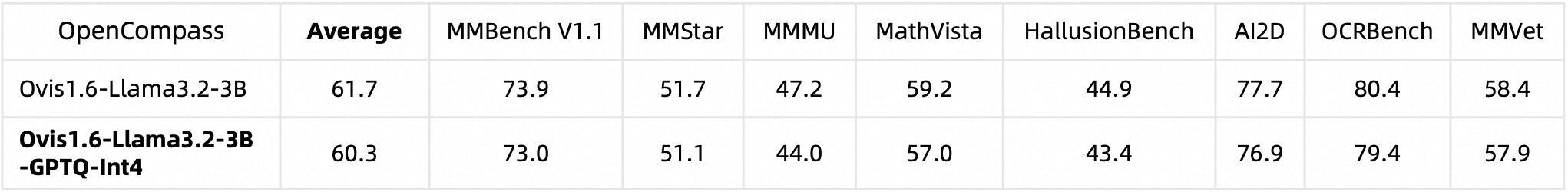

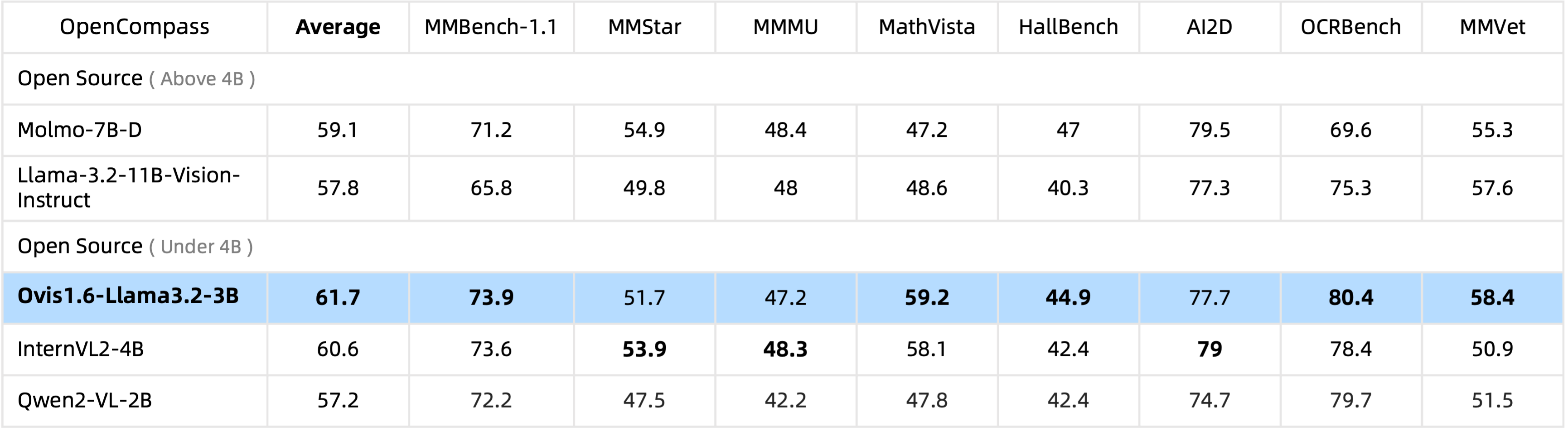

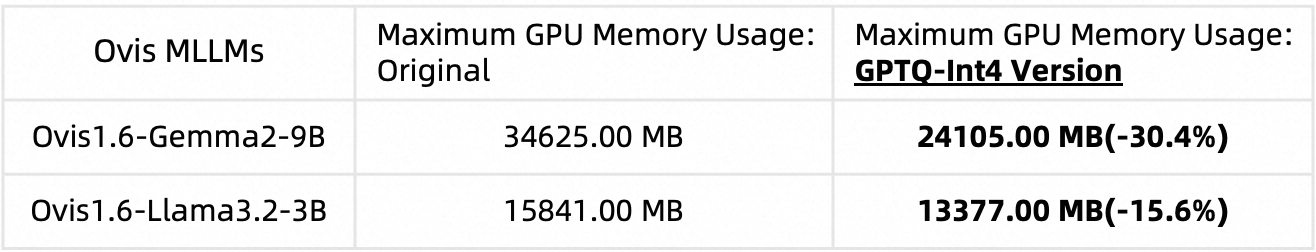

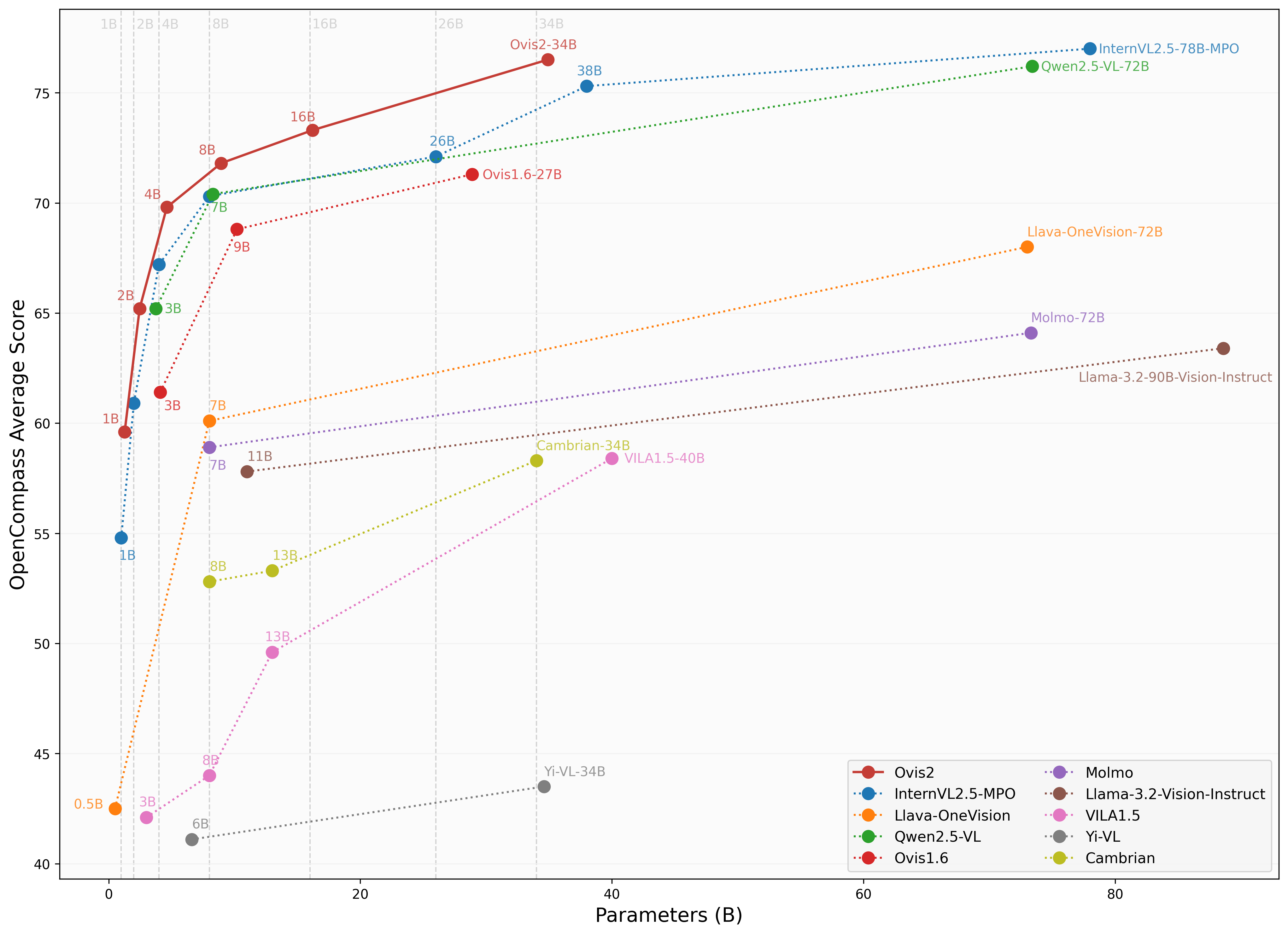

doc/performance/Ovis2.png

0 → 100644

584 KB