v2.0

Showing

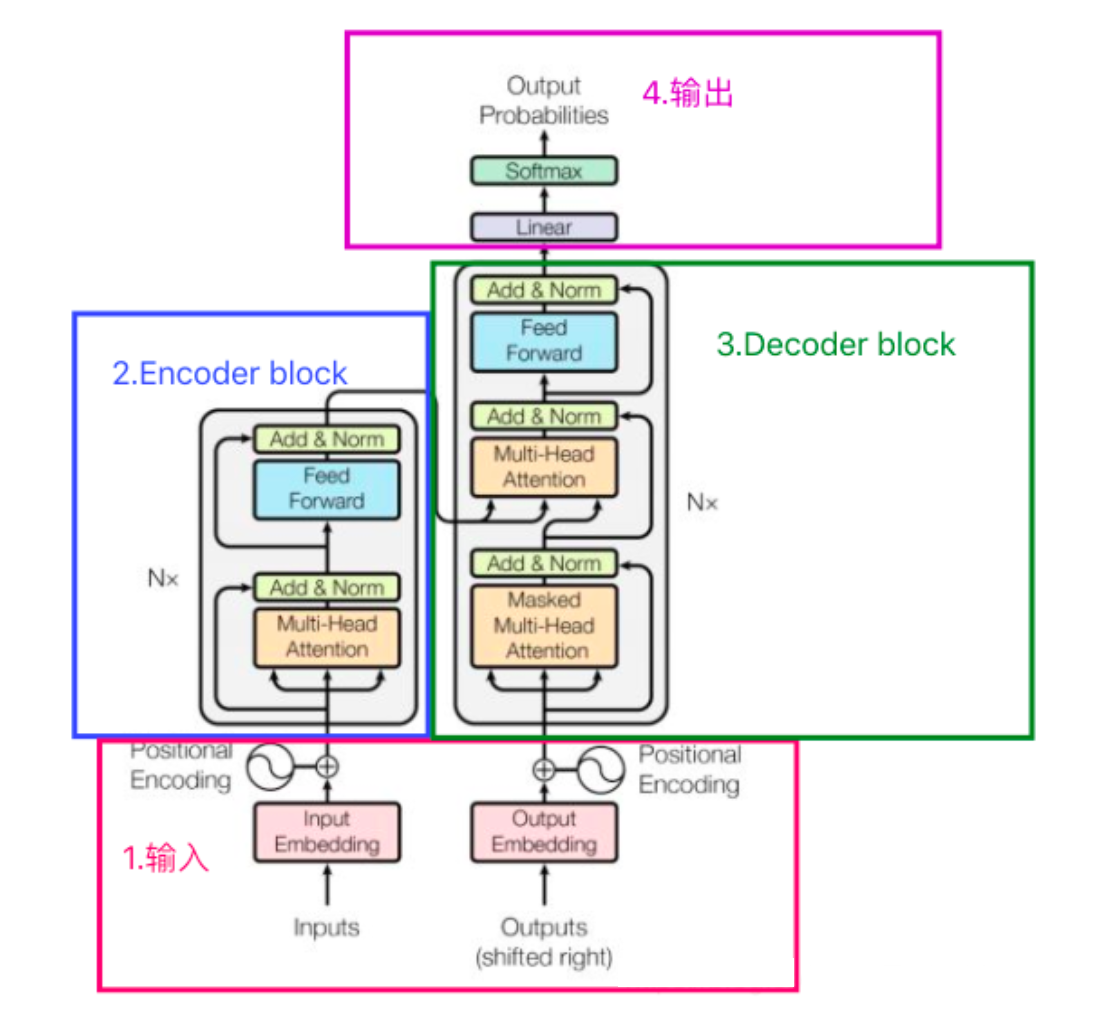

doc/transformer.png

deleted

100644 → 0

396 KB

docker_start.sh

0 → 100644

infer_fastllm.py

0 → 100644

log.txt

0 → 100644

This diff is collapsed.

No preview for this file type