v1.0

parents

Showing

LICENSE

0 → 100644

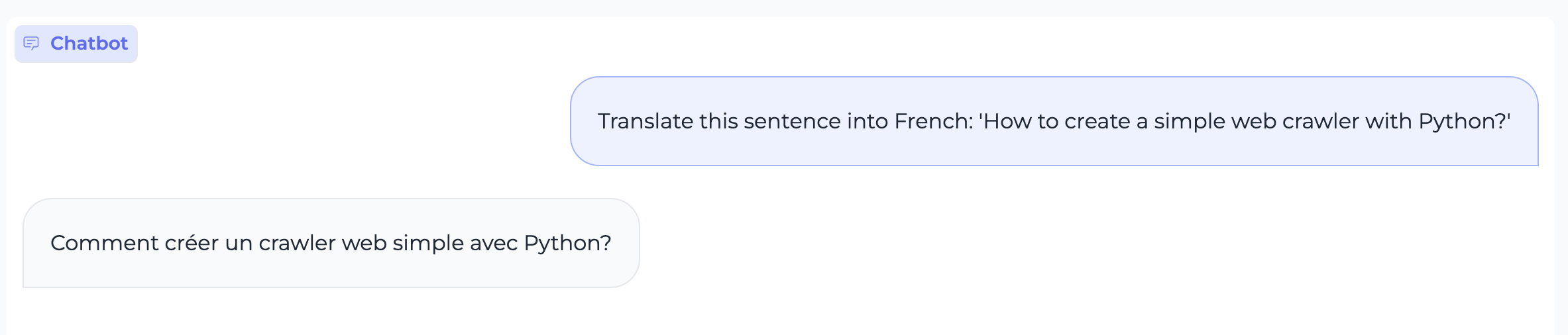

README-en.md

0 → 100644

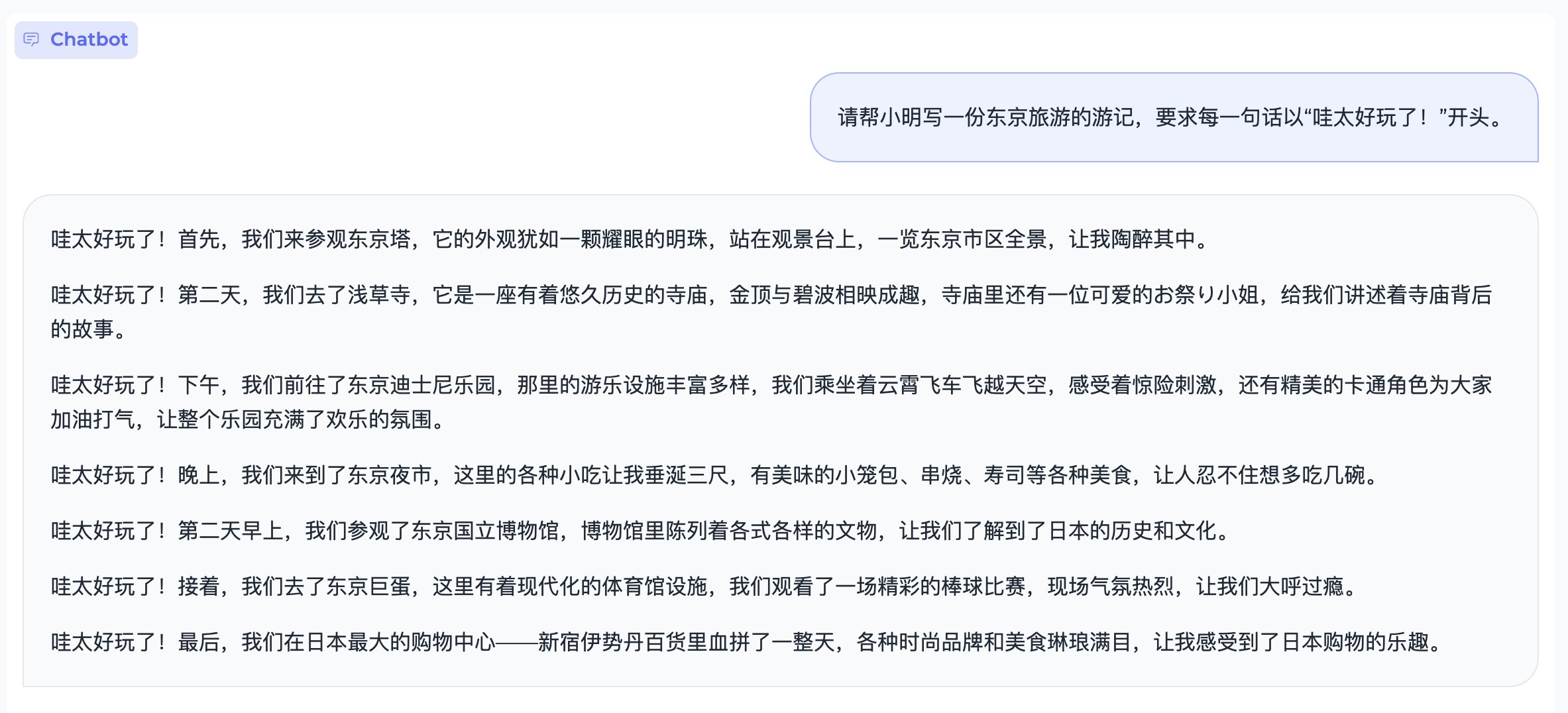

README.md

0 → 100644

README_origin.md

0 → 100644

188 KB

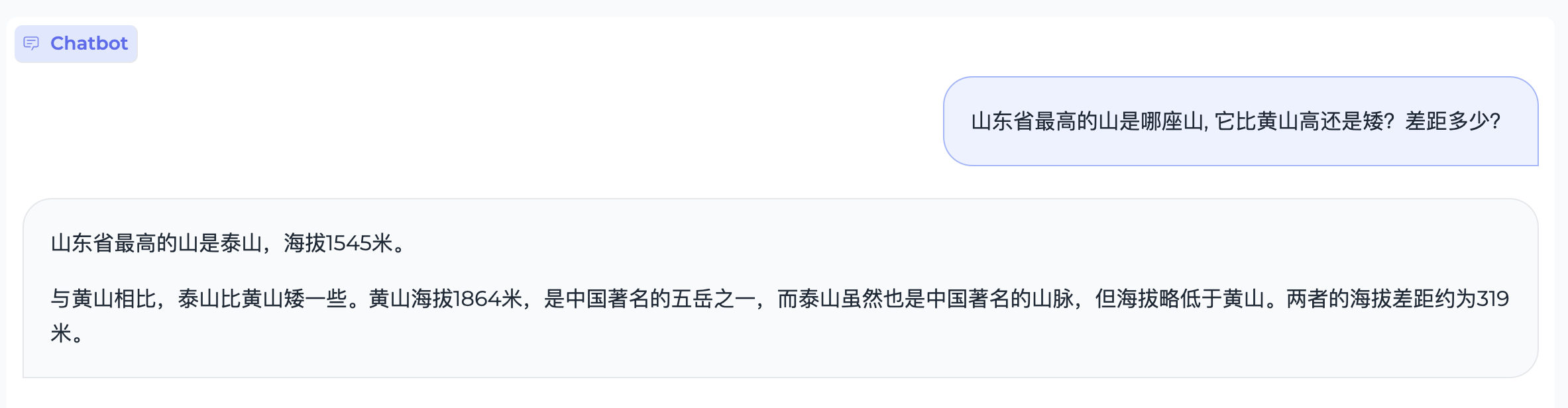

assets/code.case1.gif

0 → 100644

4.56 MB

assets/code.case2.gif

0 → 100644

This image diff could not be displayed because it is too large. You can view the blob instead.

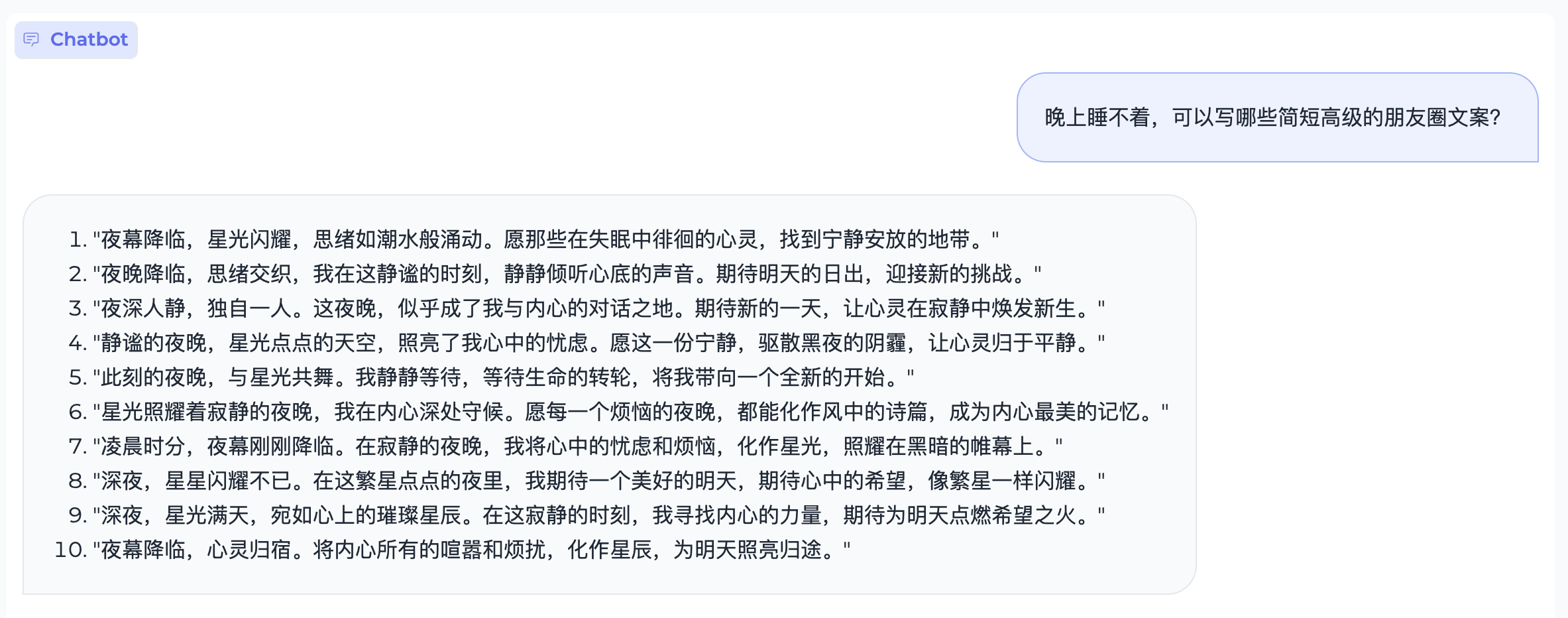

assets/creation.case1.png

0 → 100644

111 KB

assets/creation.case2.png

0 → 100644

373 KB

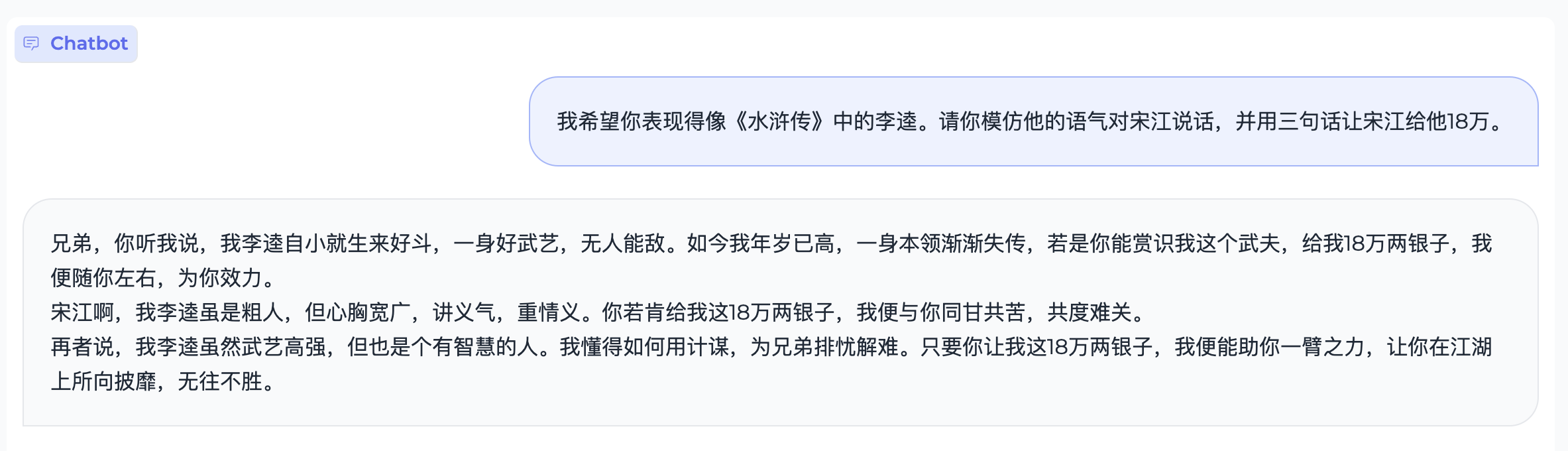

assets/creation.case3.png

0 → 100644

204 KB

assets/en.code.case1.gif

0 → 100644

4.93 MB

assets/en.creation.case1.png

0 → 100644

304 KB

assets/en.creation.case2.png

0 → 100644

557 KB

353 KB

assets/en.math.case1.png

0 → 100644

163 KB

assets/en.math.case2.png

0 → 100644

68.5 KB

279 KB

87.1 KB

70.9 KB

365 KB